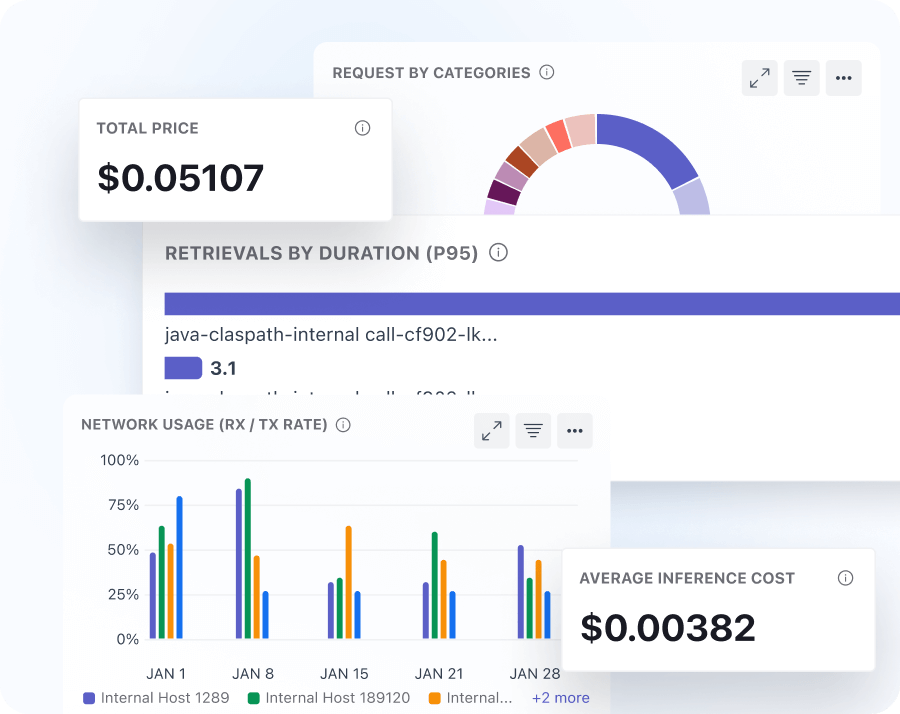

Enhanced Decision-Making

- Get data-driven insights for informed decisions, optimizing strategies and driving results.

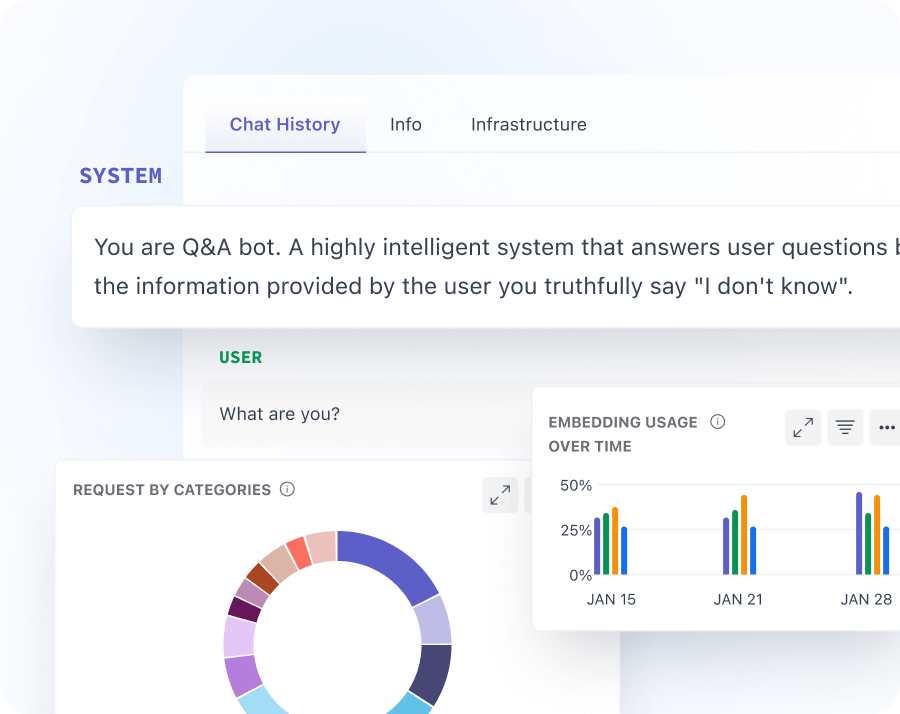

- Visualize token performance, cost, and request metrics for deeper insights and optimization.

- Identify trends and patterns using advanced visualization tools for better insights.

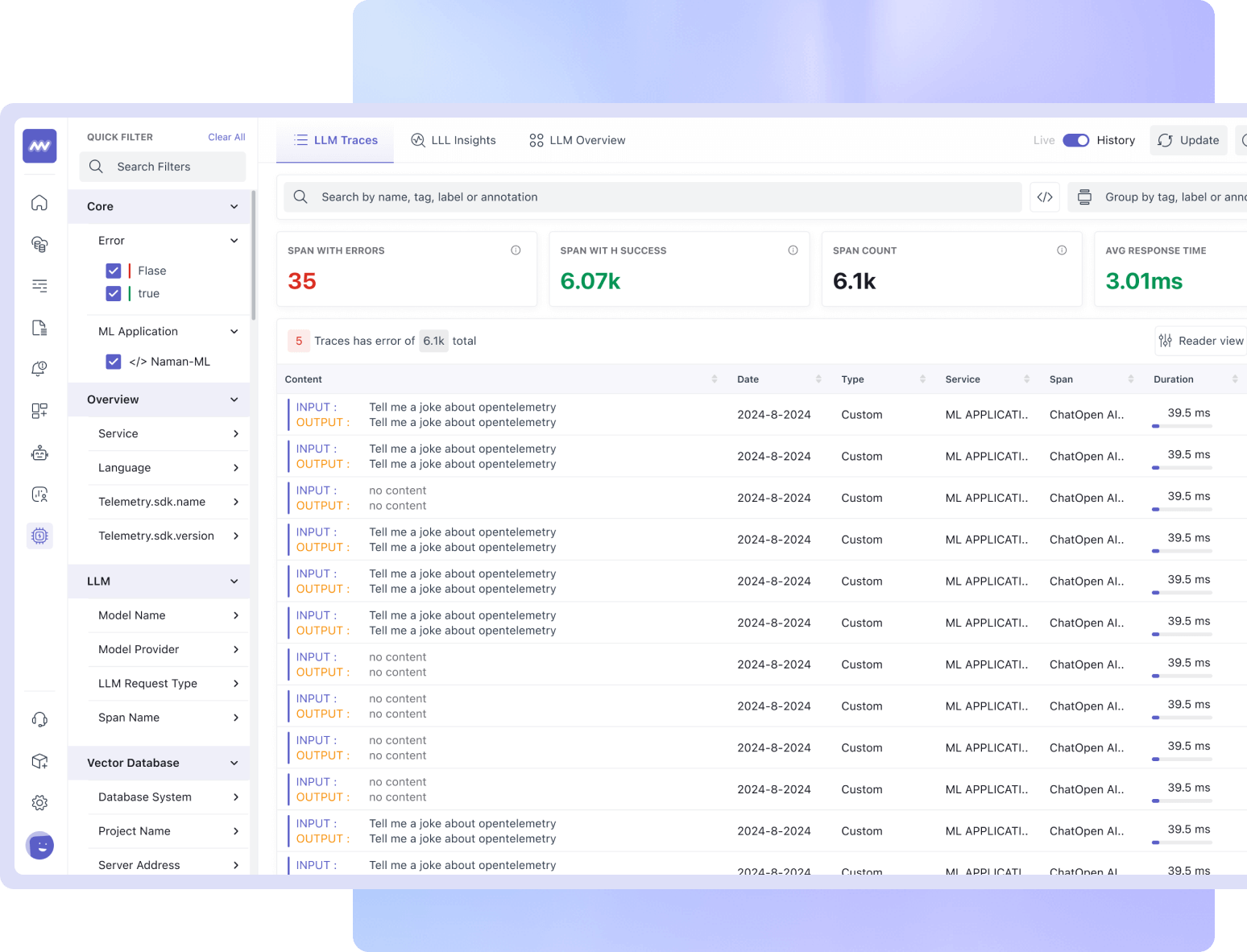

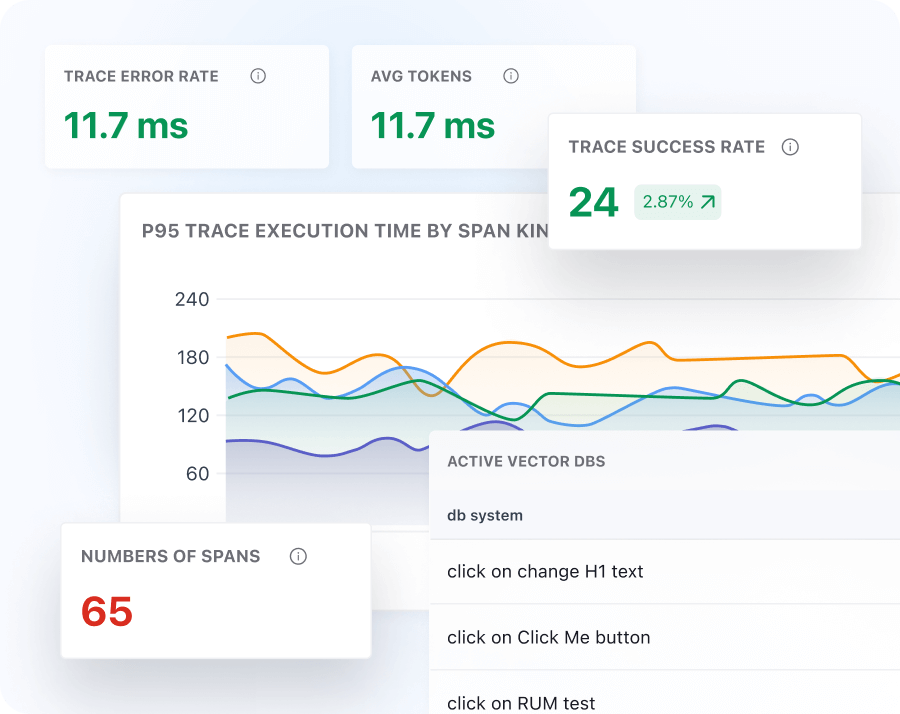

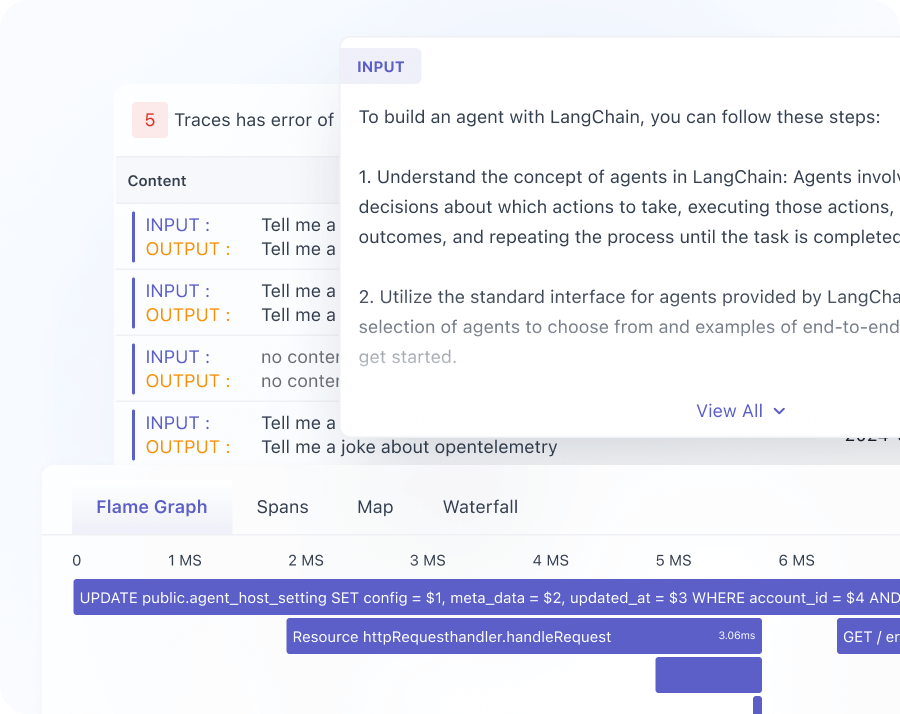

Improved Performance And Troubleshooting

- Detect issues early to prevent impact on business operations and performance.

- Proactively address performance slowdowns and latency for smoother operations and efficiency.

- Investigate root causes with span-level details.

Increased Efficiency

- Automate monitoring and troubleshooting workflows.

- Seamlessly integrate with leading LLM providers and frameworks for enhanced functionality.

- Standardize data formats to ensure consistency across all sources.

Customized Monitoring

- Create customized metrics to meet specific monitoring requirements.

- Utilize pre-built dashboards for rapid insights and data analysis.

- Support for SDKs compatible with OpenTelemetry for enhanced observability.