Autoscaling is a critical capability for any organization planning to scale its websites, applications, and digital platforms while handling sudden traffic growth.

Every application obtains the processing power it requires from a server or server farm on which it is hosted. Each server has a limited amount of processing power. So, what happens when the app requires more processing power than is currently available? You autoscale.

Modern cloud-native applications rarely experience steady traffic. From flash sales and viral campaigns to unexpected outages, demand can spike or drop within minutes. Autoscaling ensures your application infrastructure responds instantly without manual intervention by automatically adding or removing compute resources based on real-time demand.

According to Gartner, over 85% of organizations will adopt a cloud-first principle by 2025, making autoscaling a foundational capability rather than an optimization. Without autoscaling, teams either overprovision resources, wasting money, or underprovision, risking downtime.

Autoscaling saves you the time and effort required to manually scale a server or system to meet all potential levels of server load, whether high or low.

What is Autoscaling?

Autoscaling (also called auto scaling or automatic scaling) is a cloud computing capability that automatically increases or decreases computing resources such as virtual machines, containers, or pods, based on real-time demand, performance metrics, or predefined schedules.

The primary goal of autoscaling is to maintain application performance and availability during traffic spikes while minimizing infrastructure costs during low-usage periods.

Automatic scaling is widely accepted for its versatility, flexibility, and cost-effectiveness. Some of the world’s most popular websites, such as Netflix, have opted for autoscaling to meet the growing and ever-changing needs of consumers.

Amazon Web Services (AWS), Microsoft Azure, and Oracle Cloud are some of the most popular cloud computing vendors offering autoscaling services.

Horizontal vs Vertical Autoscaling

Autoscaling can be implemented in two primary ways: horizontal and vertical.

Horizontal autoscaling adds or removes instances from a resource pool based on demand. When traffic increases, new instances are launched; when demand drops, excess instances are terminated. This approach is highly scalable, fault-tolerant, and ideal for cloud-native applications.

Vertical autoscaling increases or decreases the capacity of an existing instance by scaling up or down the instance’s CPU, memory, or storage. While useful for certain workloads, vertical autoscaling has physical limits and often requires restarts, making it less suitable for high-traffic web applications.

Most modern cloud architectures rely on horizontal autoscaling to achieve high availability and resilience.

Why is Autoscaling Important?

Autoscaling is especially relevant today as the world commits to reducing carbon emissions and its environmental footprint. The process helps conserve energy by putting the idle servers to sleep when the load is low.

Autoscaling is most beneficial for applications with unpredictable loads because it improves server uptime and utilization. Based on conditions specified by the system administrator, autoscaling can automatically scale up or down on a computing matrix to adjust to load. This reduces electricity consumption and usage costs, since many cloud service providers charge based on server usage.

E.g.

– Netflix scales thousands of instances during peak streaming hours

– E-commerce sites auto-scale during flash sales

AWS reports that customers using autoscaling reduce infrastructure costs by up to 30–50% compared to static provisioning. Autoscaling reduces idle compute time, lowering energy consumption and cloud carbon footprint.

Some of the other key benefits of autoscaling are:

- Better load management: It enables effective server load management by scaling servers during low-traffic periods to handle non-time-sensitive computing tasks. This is possible because scaling frees up significant server capacity with lower traffic.

- Dependability: When server load is highly erratic and unpredictable, as in e-commerce websites or video streaming services, this approach prepares the server to handle fluctuating demand, making it a dependable option.

- Fewer failures: Scaling mechanisms ensure that failed instances are immediately replaced with healthy ones, reducing application downtime.

- Lower energy consumption: By automatically scaling resources during periods of low traffic, servers can enter idle states, significantly reducing electricity usage for organizations running their own infrastructure.

- Business continuity during peak events: High-traffic events such as flash sales, streaming premieres, or festive sales can overwhelm static infrastructure. Dynamic resource scaling ensures applications remain available during unpredictable demand surges.

How Autoscaling Works

The scaling system continuously monitors system performance and automatically adjusts resources to match demand.

Autoscaling workflow in cloud computing: User → Load Balancer → Auto Scaling Group → Instances

This mechanism relies on performance metrics and scaling policies to make scaling decisions. Common metrics include CPU utilization, memory usage, request rate (requests per second), queue length, and application-specific custom metrics.

When a metric crosses a predefined threshold, such as CPU usage exceeding 70% for five consecutive minutes, the scaling system triggers a scale-out event.

New instances are launched, registered with the load balancer, and automatically begin serving traffic. When demand decreases, scale-in policies remove unnecessary instances after a cooldown period.

The system tightly integrates load balancing, health checks, and monitoring tools to ensure that only healthy instances receive traffic.

A server cluster comprises the main servers and replicated servers made available when traffic spikes. When a user initiates a request, it traverses the internet to a load balancer, which determines whether to scale up or scale out its supplementary units.

In fact, the entire process of autoscaling banks on load balancing – it defines the server pool’s efficiency in handling traffic.

Types of Autoscaling

We classify autoscaling into three major types based on how the system invokes servers from the circuit. Dynamic autoscaling continuously adjusts resources in real time based on live performance metrics without relying on fixed schedules or manual input.

Reactive Autoscaling

Reactive autoscaling operates on preset “triggers” or thresholds specified by the administrator, which trigger the addition of additional servers when crossed. Administrators set thresholds for key server performance metrics, such as the percentage of CPU capacity used.

For example, reactive autoscaling triggers additional servers to scale in when the primary server reaches 80% capacity for a full minute.

Essentially, this type of autoscaling “reacts” to incoming traffic.

Proactive or Predictive Autoscaling

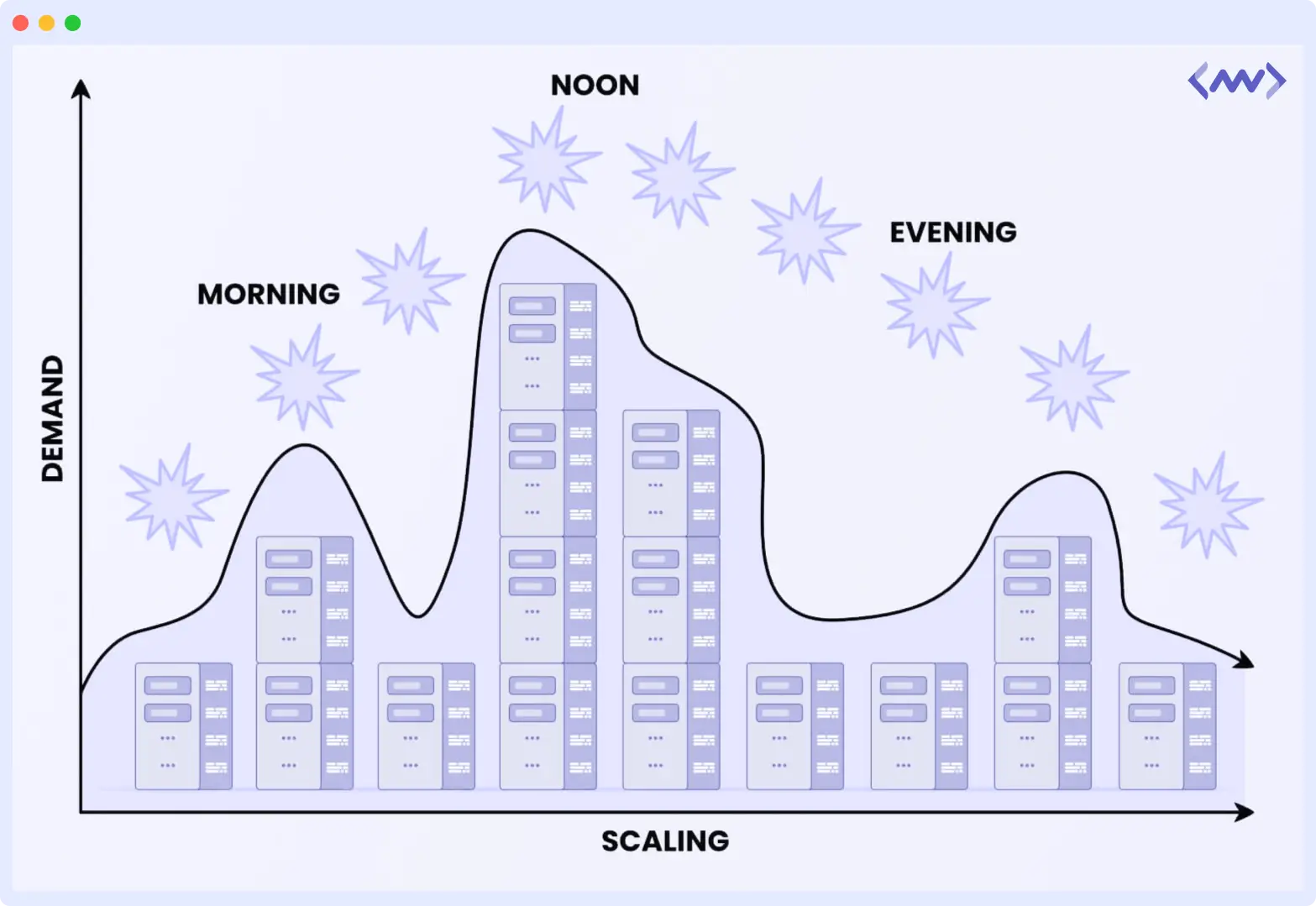

Suitable for applications where server loads are more or less predictable. Predictive or proactive autoscaling schedules additional servers to kick in automatically during peak traffic times based on the time of day.

This type of autoscaling uses artificial intelligence (AI) to “predict” when traffic will be high and to schedule server augmentations in advance.

Scheduled Autoscaling

Scheduled autoscaling is similar to predictive autoscaling; the only difference is that it schedules additional servers for peak periods. While predictive autoscaling does this autonomously, scheduled autoscaling relies more on human input to plan the servers.

Autoscaling in Practice

Various cloud service providers deploy autoscaling using in-house processes and software to optimize server performance.

Let’s look at some of these examples in detail.

AWS Autoscaling

Amazon Web Services (AWS) offers multiple autoscaling services, including AWS Auto Scaling and Amazon EC2 Auto Scaling. Amazon EC2 relies on launch templates to retrieve information about launching instances (such as the VPC subnet). Users can manually set the instance count or let EC2 do it automatically.

💬 Discover how Middleware simplifies AWS monitoring and gives you complete visibility into your cloud resources.

In containerized environments, Kubernetes provides autoscaling through the Horizontal Pod Autoscaler (HPA), Vertical Pod Autoscaler (VPA), and Cluster Autoscaler. These components automatically adjust pods and nodes based on resource usage and custom metrics.

Google Compute Engine (GCE)

GCE enables autoscaling via Managed Instance Groups (MIGs). Its console lets users define MIGs, organize them by the desired performance metric (e.g., CPU utilization), adjust them to the required autoscaling cap, and enable autoscaling with a single click.

IBM Cloud

IBM’s services run on virtual servers autoscaled by cluster-autoscaler. The system adds or removes nodes based on instance load when the preset threshold is exceeded. This autoscaling mechanism works with workload policies that users define as per sizing needs.

Microsoft Azure

Azure provides its users with a console to set autoscale programs. They can navigate to the autoscale option in their console, add new settings and rules for scaling across various server parameters, and define the autoscaling conditions.

Oracle Cloud Infrastructure

Oracle Cloud provides full-scale control over autoscaling. It allows users to configure it for metric-based or schedule-based autoscaling. Users can edit and configure autoscaling policies.

Oracle offers multiple autoscaling services to balance network load across servers elastically.

Autoscaling Best Practices

To get the most value from autoscaling, organizations should follow these best practices:

- Use multiple metrics instead of relying solely on CPU utilization.

- Configure cooldown periods to prevent rapid scaling fluctuations.

- Regularly test autoscaling policies under peak-traffic scenarios.

- Monitor scaling events using observability tools to identify bottlenecks.

- Avoid aggressive scale-down policies that can impact performance.

Autoscaling works best when combined with strong monitoring, alerting, and observability practices.

Autoscaling is not as Easy As It Sounds

Today, autoscaling is a powerful, sophisticated, and useful compute feature that helps millions of websites and apps manage server load. However, as with traditional scaling, you still face many hurdles to enable autoscaling.

Here are four overarching reasons why autoscaling can be difficult to optimize and apply, especially on large servers with massive amounts of information.

1. Searching for Information Becomes Difficult

Imagine an e-commerce website with a database of over a million names and customer contacts. Regardless of the site’s measures to organize this massive dataset, scouring it for information is not easy.

With autoscaling, however, the system must make this information available at all times across additional servers, which presents a significant challenge.

2. Consistency is Hard to Achieve

When an e-commerce website opts for autoscaling services, another major hurdle is achieving consistency.

For example, during flash sales, the system constantly updates product availability data and makes these changes available to all users, ensuring no one places an order for an unavailable product.

Ensuring information and data consistency in such situations, especially when server load is high, isn’t straightforward.

3. Concurrent Use Increases Server Demands

Using the same example above, suppose millions of users are trying to log into the e-commerce website to purchase the same product. Although unlikely, this is the kind of situation a server should be ready for.

Each of these users requires simultaneous access to the data and information on the servers. This is a major challenge that any autoscaling attempt must overcome.

4. Speed maintenance becomes complex

When it comes to large datasets, scaling up or adding a server inevitably affects how quickly these resources can be deployed to serve information to application or website users.

Native Autoscaling Support Issues

Beyond the computing resources and expertise required to tackle autoscaling and deliver a satisfying customer experience, most cloud service providers don’t offer native autoscaling support because the associated costs are very high.

Physical Server Costs

Cloud-based hosting services that offer autoscaling almost always use horizontal autoscaling to achieve the desired result. This entails deploying additional servers or machines to the existing resource pool versus vertical autoscaling, which involves upgrading the existing servers and machines.

A good example of vertical autoscaling is increasing RAM capacity in an existing machine. Regardless of the approach used to enable autoscaling, a cloud service provider’s costs are high.

Investment in your workforce

To ensure efficient autoscaling, organizations need a dedicated team of experts to oversee and monitor the process, especially for high-traffic websites.

For example, in the United States, e-commerce platforms such as Shopify and Amazon see higher sales during the festive sales season than on Black Friday.

💬 Ensure your website performs flawlessly during the holiday rush. Know More

Therefore, not all cloud service providers are willing to make the substantial investments required to build a team capable of supporting autoscaling natively on their platforms.

Autoscaling is Here to Stay

Autoscaling is an increasingly popular web hosting feature that’s undoubtedly here to stay. With significant investment in technology and strategy, many tech giants have ensured that consumers now have access to reliable autoscaling features and are continually improving their customer experience.

Organizations interested in maximizing autoscaling can choose either vertical or horizontal scaling. Right off the bat, vertical autoscaling isn’t ideal for web applications or resources serving thousands of users, because several architectural limitations in upgrading existing servers affect availability.

On the other hand, horizontal autoscaling ensures continued availability by distributing user sessions across a scalable server pool rather than restricting them to a single server.

Despite the investment required and the challenges involved, autoscaling offers organizations both short- and long-term benefits. Therefore, if an organization wants to scale its operations and web resources, autoscaling is often the best option available.

Achieving high availability for your application can be a chore. Read more about high-availability options to spot errors and traffic patterns in your application and take appropriate action.

⚡ Facing downtime or performance issues? Middleware helps you identify, diagnose, and fix cloud infrastructure problems before they impact users. Get Demo

What are the types of Autoscaling?

Autoscaling is classified into four types: manual, scheduled, dynamic, and predictive.

What is Autoscaling in Devops?

Auto-scaling ensures your application always has the compute capacity it requires and eliminates the need to monitor server capacity manually. You can autoscale based on incoming requests (front-end) or the number of jobs in the queue as well as the length of time jobs have been in the queue (back-end).

What exactly is the distinction between Automatic scaling and load balancing?

While load balancing will re-route connections from unhealthy instances, new instances must be added to the network. As a result, auto scaling will start these new instances, and load balancing will connect to them.

What is microservices scalability?

Scalability is the most important feature of microservices. Statistically, monolithic programmes share the resources of the same machine. Microservices, in turn, scale their specifications as needed. Microservice architectures can then manage their resources and allocate them where and when required.

What metrics are commonly used for autoscaling?

CPU utilization, memory usage, request rate (RPS), queue length, and custom application metrics.

Is autoscaling suitable for Kubernetes?

Yes, Kubernetes supports autoscaling via HPA, VPA, and Cluster Autoscaler.

Does autoscaling reduce cloud costs?

Yes, autoscaling prevents overprovisioning and reduces idle resource usage.