The development of server-side web applications has changed drastically since the introduction of Docker. Because of Docker, it’s now easy to build scalable and manageable apps built of microservices.

We have mentioned everything to help you understand what microservices are and how microservices can be containerized with Docker.

Microservices, Docker, and containerization have become synonymous in recent years.

Although different technical concepts, collectively, they fit well into a distinct ecosystem. And this integrated framework is something the software industry continues to gravitate toward.

When working with different pieces of a software puzzle, you need to discern each piece in a way that makes sense in the big picture. And this goes for the powerful trio (microservices, Docker, and containers) and their role in packaging, deploying, and containerizing microservice applications.

But first, let’s learn more about the heart of it all: microservices.

What are microservices?

In real-life when we have a complex problem ,we ofter break it down into smaller tasks, similarly, microservices are a software design paradigm that breaks down a system into smaller services or sub-modules.

Together, these small services form a single application. They’re inherently designed to decouple from the rest of the system to work independently with a unified purpose.

Each service in a microservice architecture encapsulates a specific function that aligns with application features and is accessible to other services. For example, an e-commerce application usually consists of modules like order management, shipping, inventory, and payments, to name a few.

These modules represent a specific business domain that translates into a microservice. This microservice is treated as a black box from the outside and is accessible via an abstraction layer implemented as an application programming interface (API).

The goal of this architecture is to create a network of accessible services with the following criteria:

- Organized with a single purpose functionality

- Used independently

- Owned by a small team

- Loosely connected

- Highly maintainable and testable

What is containerization, and how does Docker facilitates it?

The libcontainer README defines a container as a self-contained execution environment that shares the host system’s kernel and is (optionally) isolated from other containers in the system.

Containerization is similar to physical containers transporting items from one place to another. Virtual containerization works the same way and ships applications. It aims to solve application consistency and portability issues when deploying or shipping software.

After a software development lifecycle, an application is deployed to a runtime environment (i.e., on-premises physical servers, virtual machines (VMs), or cloud VM instances). These target environments present portability and compatibility challenges to run an application as expected since operating systems and underlying system configurations differ from the development environments.

So how do you make sure your software is compatible with different machines? Containerization allows you to ship your machine per se. This way, software teams only have to worry about making sure the software really works on their machines before packaging the applications into containers using different technologies. One such technology is Docker.

Docker is both a containerization technology and an ecosystem. It’s made up of the following components:

- Docker file: A text configuration file that defines the steps to create a container image and start the container process

- Docker image: A single file that contains all of the dependencies and necessary configuration for a program to run

- Docker container: An instance of a container image that runs a specific program using its own set of isolated hardware resources

- Docker hub: A Docker-provided service for finding and sharing container images

- Docker client: Also known as Docker CLI, responsible for receiving and processing user commands. The Docker CLI communicates with the Docker server and does the actual heavy lifting

- Docker server: Receives and handles API requests from the Docker CLI

Dockerizing microservices

Microservices can be containerized with Docker, which reduces application design, serves a single purpose, and exposes an API.

Prerequisites

Start by installing the Docker Container Runtime Engine, including the Docker client and Docker server. You can then run Docker commands and build Docker containers on your local machine, regardless of the underlying OS.

Node.js: Node.js version 10 or higher installed on your local machine.

Dockerize backend microservice

For backend microservices, you can clone this repository containing a basic Node.js application that exposes an API to a to-do list.

To Dockerize this application, you need to create a Dockerfile (saved as a Dockerfile) that contains instructions on building a Docker image.

FROM node:14-alpine

WORKDIR /usr/src/app

COPY ["package.json", "package-lock.json", "./"]

RUN npm install

COPY . .EXPOSE 3001

RUN chown -R node /usr/src/app

USER node

CMD ["npm", "start"]

This code block represents the finished Dockerfile, but we’ll further explore what each line means.

FROM node:14-alpine

The FROM instruction specifies the base image to build a Docker image and should be the first statement listed in the file. The base image is the blank first layer that gives you control over what your final image will contain. It can be an official Docker image like BusyBox or CentOS. Alternatively, it can be a custom base image that includes additional software packages and dependencies that you need for your image.

WORKDIR /usr/src/app

The next command is the WORKDIR instruction. This command sets the active directory on which all the following commands run. It’s similar to running the “cd” command inside the container.

COPY ["package.json", "package-lock.json", "./"]

As the name suggests, the COPY command copies files from one location to another. When the image is built, the specified files are copied from the host application directory to the specified working directory (WORKDIR).

As shown in the above code block, the array of files to copy are the files that contain the list of package dependencies for the main application (package.json and package-lock.json). A command similar to COPY is the ADD command, which performs the same function but can also handle remote URLs and unzip compressed files.

RUN npm install

The RUN command invokes installing container applications or package dependencies. In the previous step, we defined a directive to copy the dependency files. Here, you need to specify a command that installs these dependencies.

COPY . .

A COPY command copies all the application’s source code from the host to the previously specified container working directory. Since the package dependency files have already been copied and installed, the container now has everything the application needs to run successfully.

EXPOSE 3001

The EXPOSE command doesn’t open ports. It simply tells Docker what port the application is listening on for traffic.

RUN chown -R node /usr/src/app

USER node

Without specifying this command, your container would run with root-level privileges. It’s best to run them as a limited user with the RUN command to give the node user ownership of the application’s working directory and set it as the active user to run the container processes. By running your container as a non-root user, you add a layer of security that prevents malicious code from fully accessing your host machine and any other processes running on it.

CMD ["npm", "start"]

USER node

Finally, CMD defines the command you want to execute when running a container from an image.

After completing your Dockerfile, you can store it in the same directory as the backend application in the repository you cloned. The next step is to create a container image, run the container, and test the application.

To build the container image, run the following command from the root of the backend application directory:

docker build -t nodejs-backend-application:0.1.0 .

After building an image, you can proceed to create a container. To access your application from your host machine, you need to do “port forwarding” to forward or proxy traffic on a specific port on the host machine to the port inside your container.

# docker run -p

Finally, you can test access to your application either by running a curl command, testing with the browser, or running Postman.

curl http://127.0.0.1:3001

Conclusion

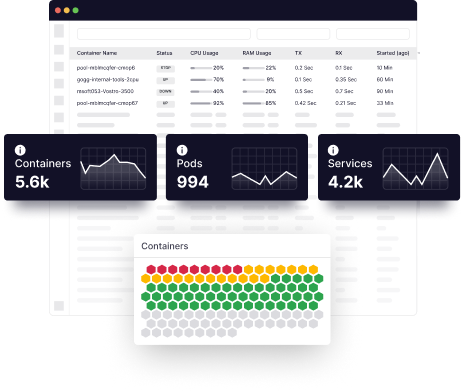

Containerizing microservices can be challenging and time-consuming, especially for a large application. Using Middleware, you can automate your workflow by simply connecting or integrating your application’s source code (such as a Git repository). It takes care of the containerization process and deployment to a VM.

Check out the power and adaptability of the Middleware to monitor and analyze your Docker containers. Request a demo today.

Do you want to dive deeper? Learn more about microservices architecture

FAQs

What are the advantages of using Docker for microservices?

- Docker is available for Windows, Mac, Debian, and other OSs.

- A Docker container can start in seconds because a container is part of an operating system process. But a virtual machine with a complete OS can take minutes to load.

- Docker containers require fewer computing resources than virtual machines. With Docker, it’s possible to reduce performance overhead and deploy thousands of microservices on the same server.

How do microservices communicate with each other in Docker?

Docker uses built-in networking features to connect containers running different microservices to the same network, this allows them to communicate with each other using their hostnames or container names as the address. Therefore discover each other dynamically and communicate using the internal IP address of the container.

How is Kubernetes different from Docker?

- Docker is a containerization platform that lets developers to package an application and its dependencies together in a container. Kubernetes, on the other hand, provides a set of tools and APIs for automating the deployment, scaling, and management of containers.

- Docker is a container engine, while Kubernetes is a container orchestration platform.

- Docker allows you to package and run your applications in containers, while Kubernetes provides a platform for automating the deployment, scaling, and management of those containers.

How many Docker containers can I run?

- It is a common practice to run several tens or even hundreds of containers on a single host, but this can vary depending on the resources available on the host and the requirements of the individual containers.

- You can use the command “docker stats” to monitor the resource usage of your running containers, and use that information to determine how many more containers you can run on your host.