Given the importance of Observability in today’s complex systems, having a set of Observability best practices becomes critical.

This is because Observability best practices provide a set of guidelines for sustained and efficient development which are compliant with standards and regulations while providing a path for continuous improvement.

Wait, what is observability?

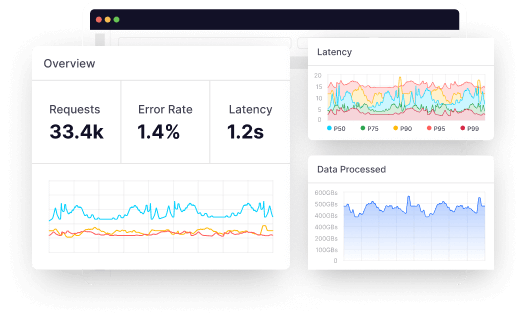

Observability is the extent to which you can understand a complex system’s internal state or condition based only on knowledge of its external outputs in real time. Observability analyzes telemetric data for resolving issues and keeping the system efficient and reliable. Data like metrics, traces, and logs are analyzed to gain actionable knowledge or insights.

As per recent studies, companies using observability on some level have witnessed improvements in system uptime and reliability, real-user experience, and developer productivity and an increase in operational efficiency and security management.

Having understood observability and its importance, let us look at 10 observability best practices every DevOps engineer should implement.

10 Observability best practices every DevOps should implement

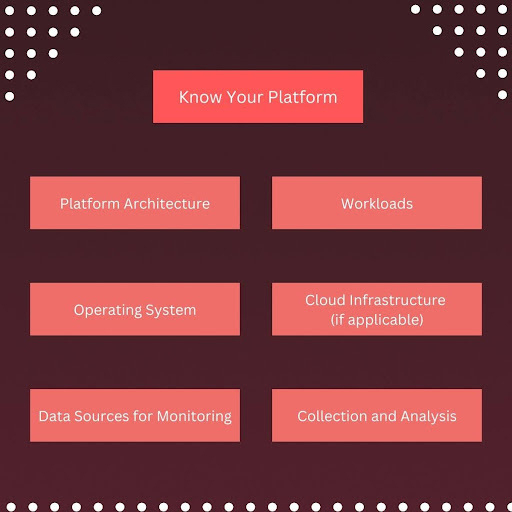

1. Know your platform

You need to have a detailed knowledge of the physical platform to identify all possible data feed sources.

Different platforms and systems require different monitoring and observability approaches, and understanding the unique characteristics of your platform can help you optimize your observability practices.

Some factors to consider for understanding your platform are:

- Platform architecture, including the components, dependencies, and communication patterns between services.

- Workloads running on your platform, such as batch jobs, real-time services, and background tasks.

- The operating system running on your platform, including its performance characteristics, resource utilization, and limitations.

- In the case of a cloud platform, the cloud infrastructure’s monitoring and observability capabilities and limitations.

- Relevant data sources for monitoring and observability, such as logs, metrics, traces, and events, and how to collect and analyze them.

Understanding the unique characteristics of your platform can help you optimize your observability best practices score.

2. You don’t need to monitor everything, just monitor what’s important!

IT platforms generate a lot of data, and not all of it is useful. Observability systems should be designed for filtering data as close to the source, at multiple levels, to avoid cluttering with excess data.

This will enable faster data analysis in real-time.

Of course, observability best practices recommend you to ensure that data that may be unimportant from an operational perspective but important from a business analysis perspective should not be deleted.

Monitoring selectively has the following benefits:

- Focusing only on critical metrics and events reduces the amount of noise in the monitoring data, making it easier to identify and address issues in critical areas faster, thus reducing downtime.

- DevOps teams can scale monitoring efforts more effectively by focusing resources on the most critical areas, thereby improving cost-effectiveness.

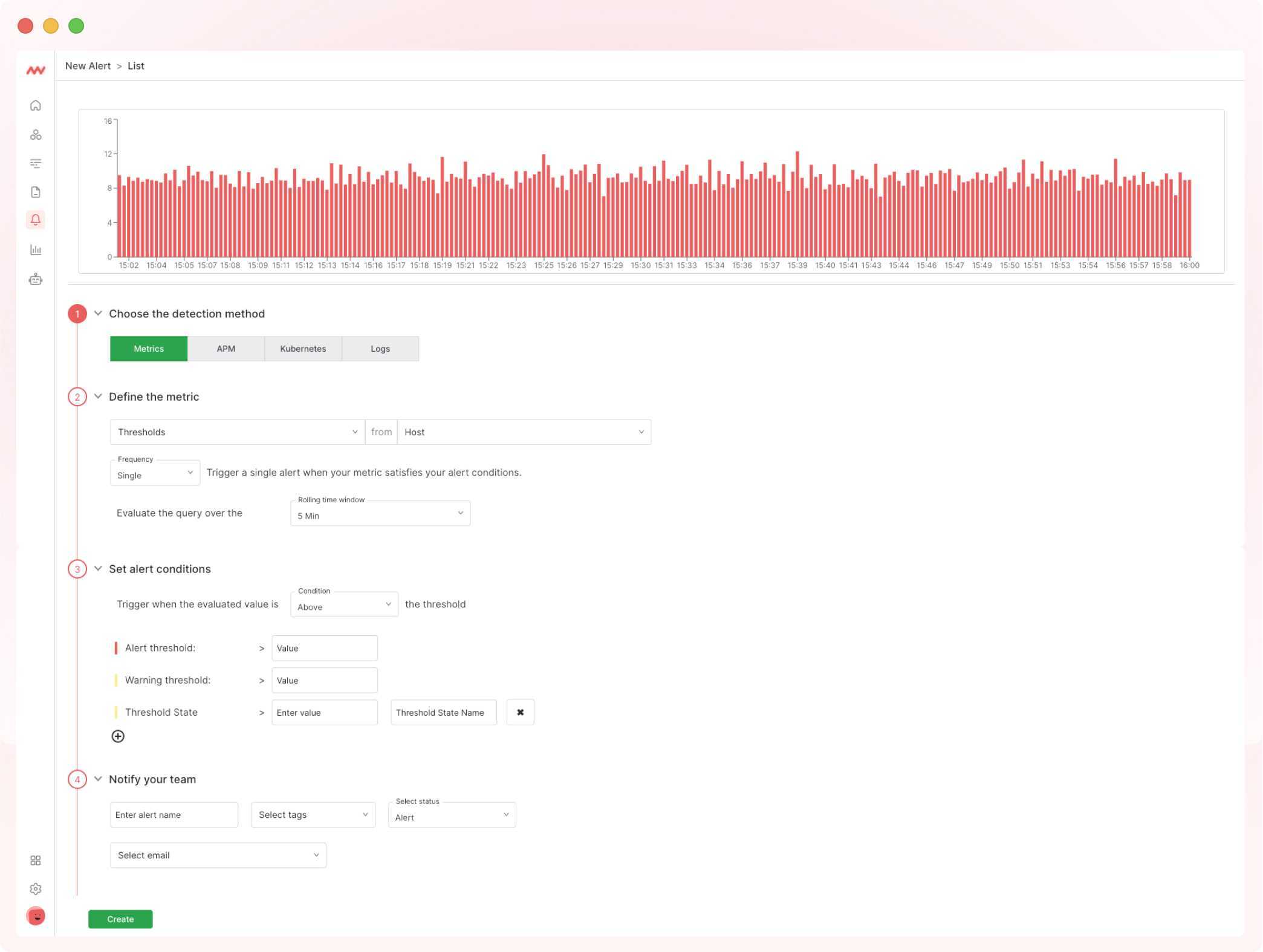

3. Put alerts only for critical events

Alerts can be configured to send notifications for a critical event, like when an application behaves outside of predefined parameters.

It detects important events in the system and alerts the responsible party. An alert system ensures that developers know when something has to be fixed so they can stay focused on other tasks.

An effective observability tools like Middleware will pick up on critical early-stage problems or zero-day attacks on the platform.

Using pattern recognition, they secure platforms from internal and external threats.

Developers can use self-healing infrastructure or automation to resolve non-critical issues. However, issues that are business-critical require developers to be more hands-on, tapping into data and analytics.

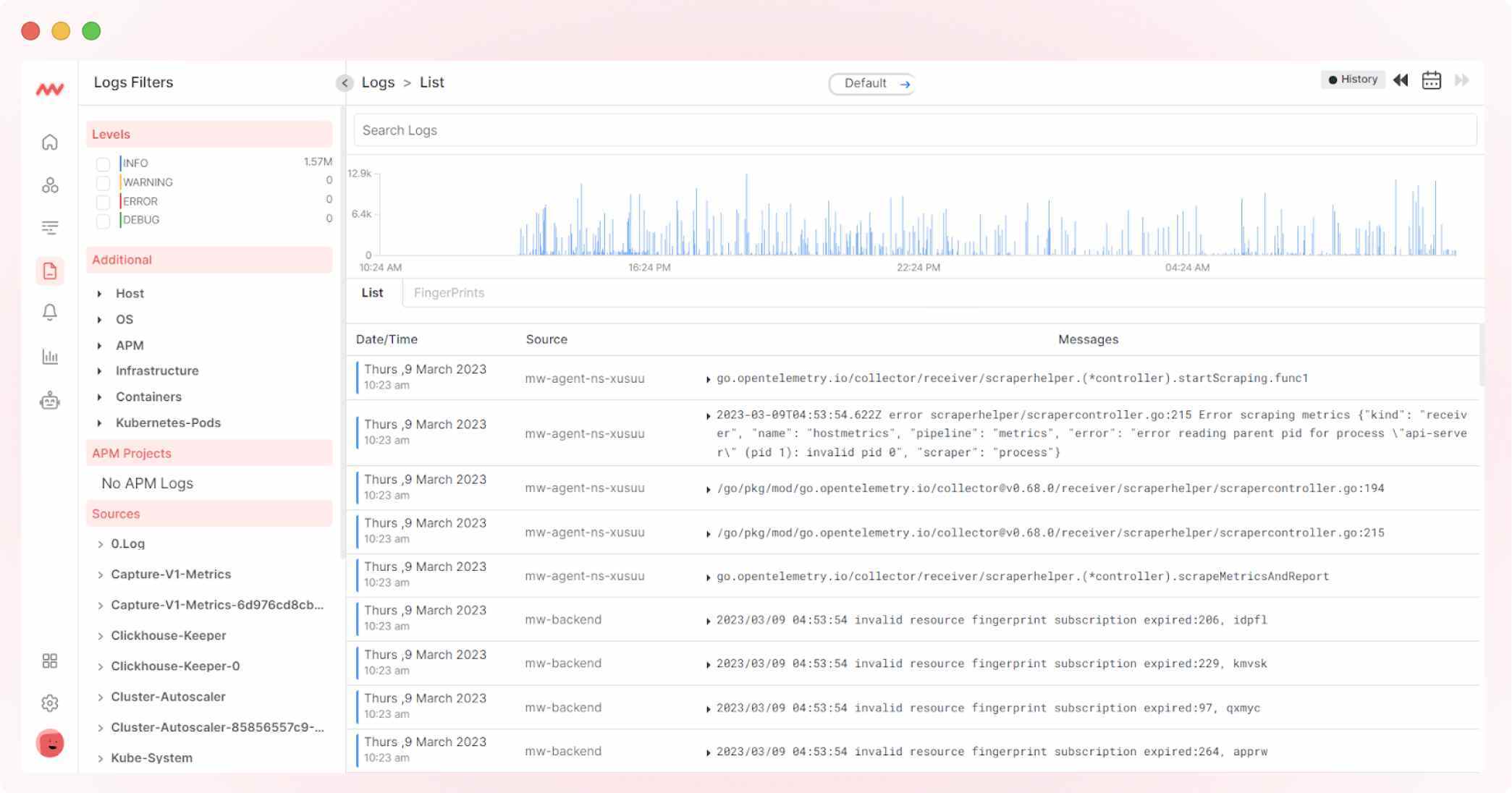

4. Create a standardized data logging format

Data produced by logging can help DevOps teams identify problem occurrences in a system and also to isolate the root cause of the problem.

Hence, log data should be formatted for maximizing usage by structuring logs in a standardized manner.

Structured logging represents all crucial log elements as attributes with associated values that can easily be ingested and parsed.

This allows teams to use the log management platform optimally with data visualization features that enhance the ability to recognize application or infrastructure problems and respond to them.

This becomes critical, especially when you are dealing with a large volume of log data.

We suggest you ensure that data logging has been enabled and use Network Management Protocols or other means of standardized logging wherever possible.

You can use connectors that can translate your data into a standardized format.

5. Store logs that only give insights about critical events

Storing only logs that provide insights about critical events is an observability best practice.

Certain logs must be managed and monitored

- Failed login attempts can be red flags that something is wrong. Multiple login failures in a short time period could indicate an attempt to break into the system. Of course, compliance reasons make it a must to manage and monitor them.

- Firewalls and other intrusion detection devices are an important first line of security. Though advanced attacks can nowadays circumvent most firewalls, still monitoring logs here is a must.

- When control policies are not being followed, or some unauthorized changes are occurring, it could be unprofessional behavior, but it could be something sinister. These changes could be catastrophic and actually bring down a network.

Applications also generate a lot of logs that need to be monitored.

6. Ensure data can be aggregated and centralized

DevOps culture is based on collaborative, consistent, and continuous delivery, and centralized logging plays an important role in it.

Without centralized data, management will not be efficient in the complex, large-scale environments in which DevOps teams work.

With individual management of logs, the workload increases. And compromises the team’s ability to integrate and correlate data from multiple logs when troubleshooting a problem.

This goes against the grain of DevOps culture.

Centralized logging by aggregating logs from all stages of the software delivery pipeline into a single place gives developers and IT engineers the end-to-end visibility they need to deliver software continuously and consistently.

With centralization, logs from development and testing environments are collected in the same place as production logs, making it easier for all to view and correlate data.

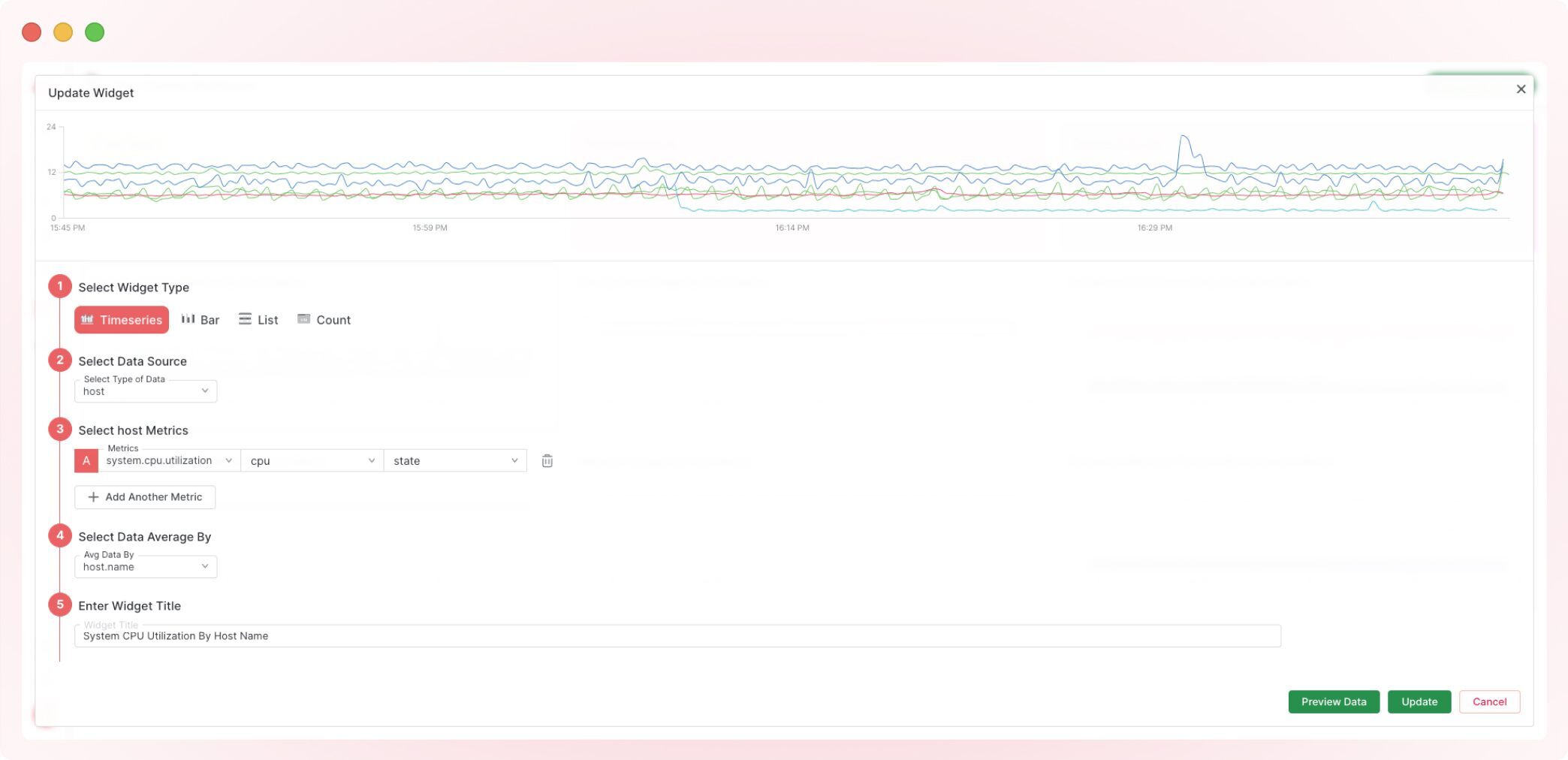

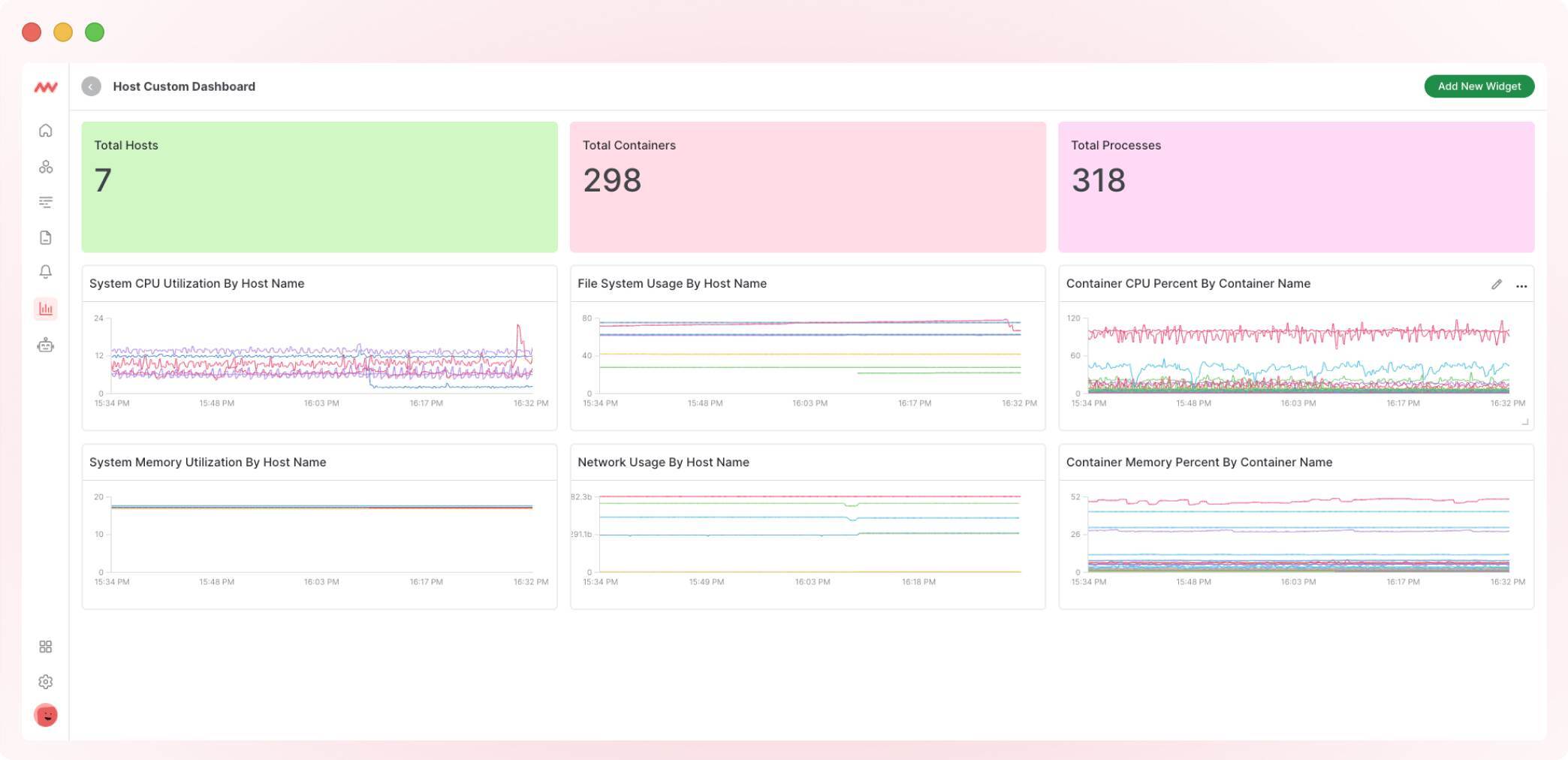

7. Don’t rely on default dashboards

Default dashboards may provide a starting point, but they are not designed to capture the unique characteristics of each system.

Custom dashboards can help to highlight important metrics, provide insights into the performance of critical components, and help identify potential issues before they impact the system.

Custom dashboards can help identify and highlight important metrics specific to the system. Making it easier for IT teams to analyze and interpret data.

With Middleware, you can create custom dashboards in just a few easy steps and under a minute:

Also, dashboards have a larger audience than just system administrators and IT teams and are also important for senior IT managers and business clients.

Dashboards should show the root cause of problems with trend analysis for IT professionals, along with the consequent business impacts that management requires.

8. Leverage integrations

We recommend DevOps teams to automate observability systems for continuous ecosystem monitoring for any issues

Tools like the Middleware platform use AI-powered algorithms to collect and analyze data from across the entire infrastructure to spot signs of potential problems before they even occur.

Artificial intelligence and machine learning algorithms can help to incorporate automation into observability.

You can store and process vast amounts of data and recognize unique patterns or insights that will help you to improve application efficiency.

Moreover, it also allows you to scale up easily while eliminating the element of human error.

9. Integrate with automated remediation systems wherever possible

Observability often identifies relatively low-level issues related to the kernel or the operating system level.

Such issues are routinely addressed by system administrators who have tooling in place to automatically fix such issues by patching or by the application of extra resources to a workload.

Observability software like Middleware can be integrated with the existing ecosystem to maintain an optimized environment.

Having such a filter can ensure that even where automation is not possible, IT teams can focus on the critical issues and fix them on priority.

10. Feedback loops should be present and effective

Feedback loops are basically an internal review of how teams, systems, and users function, not necessarily in the context of Observability but also in the larger DevOps context.

They are critical because they help improve development quality while ensuring deliverables are on time. The objective of feedback loops is to create a loop between DevOps business units, i.e., development and user.

When a change happens in one unit, it causes a change in the other unit, eventually leading to a change in the first unit.

This makes the organization agile for performing required corrections continually. Using a feedback loop to collect data and create a constant flow of information translates into enhanced Observability in the DevOps context.

Of course, feedback is great, provided you act on it. And that’s where you need to close the loop by solving the problem and tightening it for speed.

Else with an open loop, things will start failing, and teams will be lost as they have failed to find the root cause and communicate it.

Bonus: Have a proper instrumentation

Take a look whether instrumentation provided properly or not, if not then go through it.

Instrumentation is the foundation of observability in systems. It’s like installing instruments (sensors) in your car to monitor its health. In software, instrumentation involves adding code to your application that gathers data about its internal workings. This data, called telemetry, is then sent to a collection system for analysis.

Here’s a breakdown of how instrumentation works in observability:

- Adding Instrumentation: You strategically place code snippets within your application to capture specific data points. This data can include:

- Metrics: Measurements that represent the state of your system (e.g., number of requests processed, database connection pool size).

- Logs: Textual messages containing events and details about what’s happening in your application.

- Traces: Records that track the flow of a request through your system, showing how different parts interact.

- Telemetry Collection: The instrumented code sends the telemetry data (metrics, logs, traces) to a central collection point. This can be a dedicated observability tool or a cloud service.

- Data Analysis: The collected data is then stored and analyzed. This allows you to:

- Monitor the health and performance of your application.

- Identify and diagnose issues quickly.

- Gain insights into how your application is behaving under different loads.

By having the right instrumentation in place, you gain valuable visibility into your system’s inner workings. This lets you proactively identify problems and ensure your application is running smoothly.

Here are some additional points to consider:

- OpenTelemetry: This is a vendor-neutral standard for instrumentation that allows you to write code once and work with various observability tools.

- Minimizing Overhead: Instrumentation should be implemented thoughtfully to avoid impacting application performance significantly.

Overall, instrumentation is a crucial practice in achieving observability, allowing you to see what’s happening inside your system and make data-driven decisions for optimal performance.

Conclusion

Observability has become a necessary infrastructure enabler as organizations migrate to decentralized IT platforms.

Without the capability to aggregate and analyze data from all IT platform areas, organizations open themselves up to problems ranging from inadequate application performance through a poor user experience to major security issues. Most importantly, they are constantly at the risk of outages that can cost roughly $500K per hour of downtime.

Implementing the 10 observability best practices mentioned above can help l organizations survive and thrive in today’s complex and dynamic environment.