Summary: Observability is a hot topic for organizations managing complex IT environments, but there’s a lot of confusion about what it really means, why it’s necessary, and what it can actually deliver. This article breaks down what observability is, what it promises, and how it can help organizations achieve their goals.

Did you know? Even to date, developers spend approximately 50% of their time debugging! This staggering statistic raises a critical question: why do developers continue to waste so much time on debugging?

The answer lies in the outdated debugging systems that dominate the industry. These legacy tools fail to provide developers with a clear understanding of the complex interactions between microservices, distributed systems, and other components in their IT ecosystem.

Without the ability to aggregate, correlate, and analyze performance data from applications, hardware, and networks, developers are left to navigate a sea of complexity, making maintenance and troubleshooting a daunting task.

This is where the control theory spin-off, a.k.a observability, comes into the picture.

So, let’s deep dive into what observability is, how it works and its various benefits to businesses.

What is Observability?

In simple words, observability is the ability to assess a system’s current state based on the data it produces. It provides a comprehensive understanding of a distributed system by looking at all the input data.

It is a set of practices that helps developers understand the details of distributed systems throughout their developmental and operational lifecycle.

“The proliferation of microservices and distributed systems has made it more difficult to understand real-time system behavior, which is critical for troubleshooting problems. Recently, more businesses have solved this problem with automations to monitor distributed architecture, deep dive tracking and real-time observability,”

Laduram Vishnoi, Founder and CEO of Middleware.

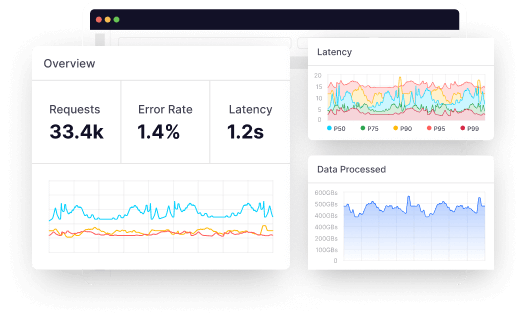

Typically, observability helps developers gain end-to-end real-time visibility of their distributed infrastructure. It enables them to monitor key performance indicators and metrics, troubleshoot and debug applications and networks, detect anomalies, identify patterns or trends, and address issues before they impact the bottom line.

This way, developers can create a resilient and scalable IT infrastructure that works in tandem with continuous integration and continuous delivery (CI/CD) pipelines while ensuring optimal health and performance.

It’s safe to say that observability has evolved from being a buzzword to an essential requirement for data-driven companies.

History of Observability

Though observability became a business catalyst in the last decade, it boasts a surprisingly long history that dates back to the 17th century, originating from an idea that had nothing to do with software development. Back in the day, engineers like Christiaan Huygens used control theory to understand complex systems like windmills. By taking note of external outputs, such as blade speed, they could determine the internal state and grinding efficiency.

Observability was not until the 1960s that it carved out its niche in computer science. Even though computers at the time were relatively basic compared to today’s systems, they were considered complex. As computers became more intricate, the need to monitor their health and performance became abundantly clear. The rise of the Internet two decades later added to the increasing complexity, paving the way for the development of siloed monitoring tools that focused primarily on servers, network traffic, and basic application health.

In the early 2000s, application performance monitoring gained steam, with vendors like AppDynamics and Dynatrace leading the way with tools that provided deep insights into application behavior and helped developers identify performance bottlenecks.

The cloud revolution of the 2010s further transformed observability. While cloud providers like IBM, AWS, and Microsoft offered built-in tools that allowed developers to monitor various aspects of the cloud, companies like Datadog delivered unified platforms to monitor the holy trinity of observability: metrics, logs, and traces. In an effort to expedite adoption and ensure accessibility, open-source solutions like Prometheus also entered the market.

“Each decade has brought a sea change in how observability is expected to function. The last three decades have seen transformation after transformation — from on-premise to cloud to cloud-native. With each generation has come new problems to solve, opening the door for new companies to form.”

Laduram Vishnoi, Founder and CEO of Middleware.

The best observability examples

Today, observability is no longer a niche practice. Here are seven companies known for their cutting-edge observability practices:

- Netflix

Apart from being a global leader in streaming services, Netflix is a champion of microservices architecture. They have built a powerful internal platform dubbed “Chaos Monkey” that intentionally injects failures to identify weaknesses and ensure service resilience. Additionally, they use open-source tools like Prometheus for metrics collection and Grafana for data visualization.

- Facebook by Meta

With its massive user base and ever-evolving applications, Facebook prioritizes performance and observability. The social networking platform uses a combination of custom-built tools and open-source solutions like Prometheus and Zipkin for distributed tracing.

- Uber

Uber relies heavily on microservices and real-time data analysis. Their observability approach focuses on tools like Datadog and Jaeger for tracing requests across microservices, pinpointing issues within individual services without impacting the entire system. Additionally, they monitor data pipelines using tools like Prometheus to ensure data quality for real-time decision-making.

- Airbnb

Much like Uber, Airbnb utilizes observability for managing bookings and guest experiences. They use tools like Datadog and Honeycomb for comprehensive log aggregation and analysis, allowing them to identify issues and optimize guest experiences.

- Spotify

With a vast music library and millions of users, Spotify utilizes observability for performance optimization and a seamless streaming experience. They use a combination of open-source tools like Prometheus and Grafana for infrastructure monitoring, alongside custom-built solutions for application-specific requirements.

- Slack

Slack uses observability to identify and resolve issues quickly, minimizing downtime for real-time communication. They use tools like Datadog for real-time monitoring of their infrastructure and applications, allowing them to detect and address issues proactively.

How does observability work?

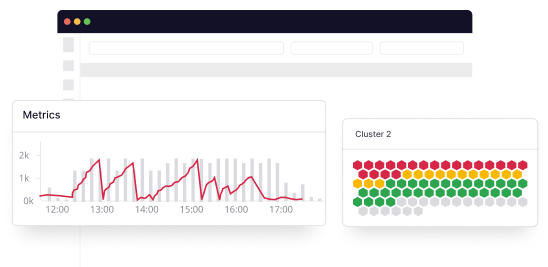

Observability operates on three pillars: logs, metrics, and traces. By collecting and analyzing these elements, you can bridge the gap between understanding ‘what’ is happening within your cloud infrastructure or applications and ‘why’ it’s happening.

With this insight, engineers can quickly spot and resolve problems in real-time. While methods may differ across platforms, these telemetry data points remain constant.

Logs

Logs are records of each individual event that happens within an application during a particular period, with a timestamp to indicate when the event occurred. They help reveal unusual behaviors of components in a microservices architecture.

- Plain text: Common and unstructured.

- Structured: Formatted in JSON.

- Binary: Used for replication, recovery, and system journaling.

Cloud-native components emit these log types, leading to potential noise. Observability transforms this data into actionable information.

Start collecting and monitoring logs from any environment in 60 seconds. Get started!

Metrics

Metrics are numerical values describing service or component behavior over time. They include timestamps, names, and values, providing easy query ability and storage optimization.

Metrics offer a comprehensive overview of system health and performance across your infrastructure.

However, metrics have limitations. Though they indicate breaches, they do not shed light on underlying causes.

Traces

Traces complement logs and metrics by tracing a request’s lifecycle in a distributed system.

They help analyze request flows and operations encoded with microservices data, identify services causing issues, ensure quick resolutions, and suggest areas for improvement.

Unified observability

Successful observability stems from integrating logs, metrics, and traces into a holistic solution. Rather than employing separate tools, unifying these pillars helps developers gain a better understanding of issues and their root causes.

As per recent studies, companies with unified telemetry data can expect a faster Mean time to detect (MTTD) and Mean time to respond (MTTR) and fewer high-business-impact outages than those with siloed data.

How is observability different from monitoring?

Cloud monitoring solutions employ dashboards to exhibit performance indicators for IT teams to identify and resolve issues. However, they merely point out performance issues or help developers gain visibility into what’s happening without any solid explanation as to why it’s happening.

As such, monitoring tools must get better at overseeing complex cloud-native applications and containerized setups that are prone to security threats.

In contrast, observability uses telemetry data such as logs, traces, and metrics across your infrastructure. Such platforms provide useful information about the system’s health at the first signs of an error, alerting DevOps engineers about potential problems before they become serious.

Observability grants access to data encompassing system speed, connectivity, downtime, bottlenecks, and more. This equips teams to curtail response times and ensure optimal system performance.

As per recent reports, nearly 64% of organizations using observability tools have experienced mean time to resolve (MTTR) improvements of 25% or more.

Read more about Observability vs. Monitoring.

Why is observability important for business?

In the last decade, the emergence of cloud computing and microservices has made applications more complex, distributed, and dynamic. In fact, over 90% of large enterprises have adopted a multi-cloud infrastructure.

While the shift to scalable systems has benefited businesses, monitoring and managing them has become very challenging. These challenges are:

- Companies understand that the tools they previously relied on don’t fit the job.

- Legacy monitoring systems lack visibility, create siloed environments, and hinder process management and automation efforts.

- Vendor lock-in—switching from one old vendor to a new one was tedious. But using observability-based vendor-agnostic data formats, you can easily import data.

- Legacy platform overcharges costs; sometimes, it costs more than cloud hosting.

It is no surprise that DevOps and SRE teams are turning to observability to understand system behavior better and improve troubleshooting and overall performance. In fact, the increasing dependency on observability platforms has the potential to bolster the market by 2028 with USD 4.1 billion.

The benefits of observability

According to the Observability Forecast 2023, organizations are reaping a wide range of benefits from observability practices:

Improved system uptime and reliability

Observability tools offer developers real-time insights into system health and behavior, empowering them to pinpoint and resolve issues before they can cause an outage. This subsequently leads to higher uptime and makes the overall system robust. By 2025, 50% of surveyed respondents reported increased usage of OpenTelemetry and Prometheus for comprehensive monitoring and troubleshooting. Unified observability platforms ensure uninterrupted system performance while proactively addressing potential faults before they escalate, resulting in improved mean time between failures (MTBF) and mean time to recovery (MTTR).

See how Middleware helped Generation Esports slash observability costs & improve MTTR by 75%!

Increases operational efficiency

With real-time insights into system performance and behavior, better operational efficiency is an absolute given. If done right, developers can automate repetitive tasks, optimize resource consumption and operations, and streamline incident management processes. This leads to reduced operational overhead, improved resource utilization, and enhanced collaboration between DevOps teams.

Improves security vulnerability management

Beyond DevOps, observability tools are extremely beneficial for security and DevSecOps teams as they allow them to track and analyze security breaches or vulnerabilities in real time and resolve them. This ensures a secure application environment, reduces the risk of data breaches, and enables proactive compliance with regulatory requirements. Observability tools also facilitate the identification of potential security threats, enabling teams to take corrective action before attacks occur.

Enhanced Fault Detection and Prevention

Through preventive observability, AI-based platforms can predict potential issues in real time. For instance, a 400-millisecond span in system traces, combined with profiling data, can reveal the precise code causing the bottleneck and its resource consumption, enabling “surgical” optimization of system performance. This approach has been shown to significantly reduce operational bottlenecks in critical real-time systems, improve fault detection accuracy, and minimize false positives.

Unified Insights Across Data Sources

Consolidating logs, metrics, and traces through platforms reduces the MTTR by up to 50%, significantly boosting operational speed and team efficiency. Approximately 13% of organizations are already using profiling tools in production, with growing demand for unified, streamlined observability systems. This unified approach enables teams to gain a comprehensive understanding of system behavior, identify causal relationships between different data sources, and make data-driven decisions.

Operational Cost Optimization

Continuous monitoring using observability tools facilitates targeted cost optimization in cloud operations. For cloud-heavy organizations, reducing metric cardinality through tools such as Middleware can lead to as much as a 10X reduction in monitoring costs. Observability also enables teams to monitor infrastructure economics effectively, implementing FinOps strategies across hybrid and multi-cloud environments, and optimizing resource utilization to reduce waste and minimize costs.

Performance Optimization for AI Systems

Observability tools track AI resource usage and performance intricately, ensuring efficiency. Around 18% of surveyed users consider AI/ML capabilities crucial for observability solutions. These capabilities support AI-driven models by accelerating root cause analysis and providing deeper operational insights. This ensures that AI performance is consistently aligned with business goals while reducing costs, improving model accuracy, and enhancing overall AI efficiency.

To address the growing demand for AI observability, Middleware introduced LLM Observability, a solution that provides real-time monitoring, troubleshooting, and optimization for LLM-powered applications. This enables organizations to proactively address performance issues, detect biases, and improve decision-making. With comprehensive tracing, customizable metrics, and pre-built dashboards, LLM Observability offers detailed insights into LLM performance. Additionally, its integration with popular LLM providers and frameworks streamlines monitoring and troubleshooting, ensuring optimal performance and responsiveness for AI systems.

Proactive Compliance and Security Measures

Observability platforms ensure adherence to regulations like the EU’s Digital Operational Resilience Act (DORA) and the U.S. Federal Reserve Regulation HH. Observability-integrated systems automate compliance tracking, reducing audit workloads by replacing periodic checks with continuous monitoring, while maintaining a proactive defense against security threats. This enables organizations to demonstrate compliance, reduce regulatory risks, and improve their overall security posture.

Enhanced ROI on IT Investments

Organizations opting for unified observability platforms experience optimized resource utilization and increased return on investment. Consolidation strategies were seen in 2024 with large acquisitions – for example, Cisco acquiring Splunk for $28 billion – pointing to a trend of fewer, more capable platforms to meet demands effectively. This enables organizations to maximize their IT investments, reduce costs, and improve overall business outcomes.

Scalability in Cloud-Native Environments

Cloud-native infrastructure monitoring is simplified through tools like eBPF and OpenTelemetry. Nearly 25% of surveyed engineers identified the operational role of platform engineering within scalability as vital, with eBPF becoming foundational to modern observability frameworks. This enables organizations to efficiently monitor and manage cloud-native applications, ensure scalable infrastructure, and support business growth.

Improved Sustainability in IT

Observability tools monitor energy efficiencies better, particularly for cloud and AI-heavy operations. Leveraging “green coding” approaches, organizations optimize energy usage significantly, saving on operational costs while meeting global sustainability directives like the EU’s Green Deal. Observability has become essential for organizations striving to reduce inefficiencies in energy-intensive workloads and support environmental goals.

Reduced Data Overload

Streamlining telemetry data in observability pipelines allows a focused analysis of only the most relevant metrics. Observability enables up to 30–50% reductions in metric cardinality, eliminating unnecessary complexity in system monitoring while maintaining precision. This leads to improved signal-to-noise ratios, reduced data noise, and enhanced analytics capabilities.

Security Resilience

Observability in cybersecurity enhances the detection of sophisticated threats while streamlining threat mitigation workflows. A human-in-the-loop approach ensures accountability, with observability tools recommending actionable measures in alignment with compliance and ethical standards. This approach supports robust organizational defense mechanisms, reduces the risk of data breaches, and enables proactive compliance with regulatory requirements.

Enhances Real-User Experience

Observability tools play a vital role in enhancing customer experiences by ensuring seamless interactions with web and mobile applications. With capabilities like real user monitoring (RUM), developers can gain comprehensive user journey visibility, identifying and troubleshooting issues concerning front-end performance and user actions. This enables them to correlate issues, make data-driven decisions, and optimize user experiences, resulting in improved user engagement, retention, and overall business success.

Understand user journey with session replays. Get started for free.

Proactive observability takes this a step further by enabling organizations to anticipate and prepare for high-traffic events, such as holiday surges. By scaling up resources and optimizing load management, organizations can reduce the risk of downtimes, ensuring smoother end-user interactions, higher customer satisfaction rates, and improved brand loyalty.

For instance, Hotplate, a popular food delivery platform that leveraged Middleware’s observability solution to ensure seamless customer experiences during peak hours. By gaining real-time visibility into their application’s performance, Hotplate’s team was able to identify and resolve issues quickly, resulting in a 90% reduction in errors and a significant improvement in customer satisfaction. With Middleware’s observability solution, Hotplate was able to deliver fast, reliable, and secure food delivery experiences to its customers, even during the busiest times.

Improves Developer Productivity and Satisfaction

“Developers spend nearly 50% of their time and effort on debugging. Observability tools have the potential to bring that down to 10%, allowing developers to focus on more critical areas.”

Laduram Vishnoi, Founder and CEO of Middleware.

Wondering how Middleware helps developers move over debugging? Check out our exclusive feature on YourStory.

Observability tools don’t just offer comprehensive visibility into distributed applications; they render actionable insights that developers can actually use to identify and fix bugs, optimize code, and enhance overall productivity. By providing developers with the right insights at the right time, observability tools enable them to work more efficiently, reduce manual effort, and focus on higher-value tasks that drive business innovation and growth.

“I don’t want my developers to stay up all night to try to fix issues. That’s a waste of everyone’s time. Middleware helped us become faster. It saves at least one hour of my time every day, which I can dedicate to something else.”

Akshat Gupta, Trademarkia.

Open standards such as OpenTelemetry reduce overhead and increase programmer focus, with the adoption rate of tools like these growing significantly. Standardized instrumentation eliminates the need for custom solutions, allowing more than 50% of users to benefit from faster development cycles and enhanced system monitoring capabilities. This leads to improved code quality, reduced technical debt, and increased developer satisfaction.

The real advantage of using observability?

These were just the tip of the iceberg. Companies using full-stack observability have seen several other advantages:

- Nearly 35.7 % experienced MTTR and MTTD improvements.

- Almost half the companies using full-stack observability were able to lower their downtime costs to less than $250k per hour.

- More than 50% of companies were able to address outages in 30 minutes or less.

Additionally, companies with full-stack observability or mature observability practices have gained high ROIs. In fact, 71% of organizations see observability as a key enabler to achieving core business objectives. As of 2025, the median annual ROI for observability stands at 100%, with an average return of $500,000.

How can Observability benefit Devops and engineers?

Observability is so much more than data collection. Access to logs, metrics, and traces marks just the beginning. True observability comes alive when telemetry data improves end-user experience and business outcomes.

Open-source solutions like OpenTelemetry set standards for cloud-native application observability, providing a holistic understanding of application health across diverse environments.

Real-user monitoring offers real-time insight into user experiences by detailing request journeys, including interactions with various services. This monitoring, whether synthetic or recorded sessions, helps keep an eye on APIs, third-party services, browser errors, user demographics, and application performance.

With the ability to visualize system health and request journeys, IT, DevSecOps, and SRE teams can quickly troubleshoot potential issues and recover from failures.

Can AI make Observability better?

Artificial intelligence is neither a silver bullet nor snake oil! Though AI has the power to take things up a notch, its promise is often overshadowed by exaggerated claims and misconceptions. However, when applied to observability, AI can truly transform the way organizations approach monitoring, troubleshooting, and optimizing their systems.

One pressing pain point for many organizations is the time-consuming process of debugging issues. Sifting through vast amounts of data to identify root causes can be costly and detrimental to business operations. This is where AI-enhanced observability comes in – automating and streamlining the debugging process, saving developers nearly half of their time.

By blending AIOps and Observability, organizations can optimize real user monitoring and automate the analysis of vast data streams. This allows teams to maximize their overall efficiency and automate critical tasks like anomaly detection, log grouping, and root cause analysis. AI-driven anomaly detection capabilities enable the identification of unknown unknowns, detecting unusual patterns of behavior not seen before, and allowing for timely investigation and remediation.

The integration of Generative AI into observability simplifies the complexities of accessing critical insights. AI models can pinpoint specific telemetry data that may indicate issues, enabling proactive remediation. This level of automation and foresight is redefining the future of observability and reliability.

Top 10 observability best practices

There is no doubt that observability offers immense value. However, it’s important to understand that most available tools lack business context.

On top of that, several organizations look at technology and business as two separate disciplines, hindering their overall ability to maximize their use of observability. The situation highlights the need for a defined set of best practices.

- Unified telemetry data: Consolidate logs, metrics, and traces into centralized hubs for a comprehensive overview of system performance.

- Metrics relevance: Identify and monitor important metrics that are aligned with organizational goals.

- Alert configuration: Set benchmarks for those metrics and automate alerts to ensure quick issue identification and resolution.

- AI and machine learning: Leverage machine learning algorithms to detect anomalies and predict potential problems.

- Cross-functional collaboration: Foster collaboration among development, operational, and other business units to ensure transparency and overall performance.

- Continuous enhancement: Regularly assess and improve observability strategies to align with evolving business needs and emerging technologies.

Read more about observability best practices.

Finding the right observability tool

Selecting the right observability platform can be a tad bit difficult. You must consider capabilities, data volume, transparency, corporate goals, and cost.

Here are some points worth considering:

User-friendly interface

Dashboards present system health and errors, aiding comprehension at various system levels. A user-friendly solution is crucial for engaging stakeholders and integrating smoothly into existing workflows.

Real-time data

Accessing real-time data is vital for effective decision-making, as outdated data complicates actions. Utilizing current event-handling methods and APIs ensures accurate insights.

Open-source compatibility

Prioritize observability tools using open-source agents like OpenTelemetry. These agents reduce resource consumption, enhance security, and simplify configuration compared to in-house solutions.

Easy deployment

Choose an observability platform that can quickly be deployed without stopping daily activities.

Integration-ready across tech stacks

The tools must be compatible with your technology stack, including frameworks, languages, containers, and messaging systems.

Clear business value

Benchmark observability tools against key performance indicators (KPIs) such as deployment time, system stability, and customer satisfaction.

AI-powered capabilities

AI-driven observability helps reduce routine tasks, allowing engineers to focus on analysis and prediction.

Top 5 observability platforms

You can easily employ these observability platforms once you have a clear idea of your organizational goals and use cases. Here are the leading five options:

Middleware

Middleware is a full-stack cloud observability platform that empowers developers and organizations to monitor, optimize, and streamline their applications and infrastructure in real-time. By consolidating metrics, logs, traces, and events into a single platform, users can effortlessly resolve issues, enhance operational efficiency, minimize downtime, and reduce observability costs.

Middleware provides comprehensive observability capabilities, including infrastructure, log, application performance, database, synthetic, serverless, container, and real user monitoring.

With its scalable architecture and extensive integrations, it helps organizations optimize their technology stack and improve efficiency. Many businesses have seen significant benefits, including a 10x reduction in observability costs and a nearly 75% improvement in operational efficiency.

“Middleware has proven to be a more cost-effective and user-friendly alternative to New Relic, enabling us to capture comprehensive telemetry across our platform. This improved our operational efficiency, service delivery, and accelerated incident root cause analysis.”

John D’Emic, Co-Founder and CTO at Revenium.

Splunk

Splunk is an advanced analytics platform powered by machine learning for predictive real-time performance monitoring and IT management. It excels in event detection, response, and resolution.

Datadog

Datadog is designed to help IT, development, and operations teams gain insights from a variety of applications, tools, and services. This cloud monitoring solution provides useful information to companies of all sizes and sectors.

Dynatrace

Dynatrace provides both cloud-based and on-premises solutions with AI-assisted predictive alerts and self-learning APM. It is easy to use and offers various products that render monthly reports about application performance and service-level agreements.

Observe, Inc.

Observe is a SaaS tool that provides visibility into system performance. It provides a dashboard that displays the most important application issues and overall system health. It is highly scalable and uses open-source agents to gather and process data, simplifying the setup process quickly.

Observability challenges in 2025

Here’s an interesting question: if observability provides so many advantages, then what’s stopping organizations from going all in?

Cost: In 2023, nearly 80% of companies experienced pricing or billing issues with an observability vendor.

Data overload: The sheer volume, speed, and diversity of data and alerts can lead to valuable information surrounded by noise. This fosters alert fatigue and can increase costs.

“Excessive data collection has led to inflated costs without real value. Customizable observability platforms now enable companies to flag and filter unnecessary data, reducing expenses without sacrificing insights. By implementing comprehensive data processing with compression and indexing, companies can significantly reduce data size, leading to cost savings. This approach is expected to save companies 60-80% on observability costs, shifting from exhaustive data collection to efficient, targeted monitoring.”

Sam Suthar, Founding Director at Middleware.

Team segregation: Teams in infrastructure, development, operations, and business often work in silos. This can lead to communication gaps and prevent the flow of information within the organization.

Causation clarity: Pinpointing actions, features, applications, and experiences that drive business impact is hard. Companies need to connect correlations to causations regardless of how great the observability platform is.

The future of observability

As 2025 unfolds, the future of observability holds exciting possibilities.

In the days to come, the industry will see a major shift, moving away from legacy monitoring to practices that are built for digital environments. Full-stack observability tops this list, with nearly 82% of companies gearing up to adopt 17 capabilities through 2026.

The idea of tapping natural language and Large Language Models (LLMs) to build more user-friendly interfaces is also gaining steam. Furthermore, industry players are upping the ante by tapping into AI to offer unified systems of records, end-to-end visibility, and high scalability. They promise to democratize observability, deliver real-time insights into operations, reduce downtime, improve user experiences, and ensure customer satisfaction.

On the other hand, the proliferation of AI-generated code will drive the need for disaggregated observability approaches, allowing organizations to manage the complexities of AI-driven systems.

Additionally, the commoditization of observability stacks will give customers more choice and control over their data, with companies demanding more control over their data and prioritizing cost-efficiency and customizable observability solutions. Middleware is leading this change with its AI-powered observability solutions that can unify telemetry data into a single location and deliver actionable insights in real time – while giving you complete control over your data!

Schedule a free demo with one of our experts today!

FAQs

What is observability?

Observability entails gauging a system’s current condition through the data it generates, including logs, metrics, and traces. Observability involves deducing internal states by examining output over a defined period. This is achieved by leveraging telemetry data from instrumented endpoints and services within distributed systems.

Why is observability important?

Observability is essential because it provides greater control and complete visibility over complex distributed systems. Simple systems are easier to manage because they have fewer moving parts.

However, in complex distributed systems, monitoring is necessary for CPU, logs, traces, memory, databases, and networking conditions. This monitoring helps in understanding these systems and applying appropriate solutions to problems.

What are the three pillars of observability?

The 3 pillars of observability: Logs, metrics and traces.

- Logs: Logs provide essential insights into raw system information, helping to determine the occurrences within your database. An event log is a time-stamped, unalterable record of distinct events over a specific period.

- Metrics: Metrics are numerical representations of data that can reveal the overall behavior of a service or component over time. Metrics include properties such as name, value, label, and timestamp, which convey information about service level agreements (SLAs), service level objectives (SLOs), and service level indicators (SLIs).

- Traces: Traces illustrate the complete path of a request or action through a distributed system’s nodes. Traces aid in profiling and monitoring systems, particularly containerized applications, serverless setups, and microservices architectures.

How do I implement observability?

Your systems and applications require proper tools to gather the necessary telemetry data to achieve observability. By developing your own tools, you can utilize open-source software or a commercial observability solution to create an observable system. Typically, implementing observability involves four key components: logs, traces, metrics, and events.

What are the best observability platforms?

- Middleware

- Splunk

- Datadog

- Dynatrace

- Observe, Inc