In recent years, DevOps has risen to become a more prominent approach to how teams deliver software. One of the goals of this framework is to optimize and automate the actual software release process.

Given the nature of the software development lifecycle, this process is iterative and places high emphasis on velocity to expedite the journey between software changes and the final product in the hands of an end-user or whichever client will be consuming the software. These software changes can be new features, refactors to an existing code base, or bug fixes.

This is accomplished through a CI/CD (Continuous Integration/Continuous Delivery) pipeline. A CI/CD pipeline is an automated translation of the traditional workflow that consists of several tasks between software development and IT operations teams.

Previously, these aforementioned teams operated independently, which hampered the quality and speed with which software reached a production runtime environment.

The modern approach of using a CI/CD pipeline seeks to solve these issues by building a workflow designed through the collaboration of integrated or cross-functional teams (i.e., application developer, QA tester, DevOps engineer).

In this post, you will learn what a CI/CD pipeline is, the phases it consists of, the benefits it provides, some of the top CI/CD tools, and other key concepts on this topic.

What is the CI/CD pipeline?

In its simplest form, a CI/CD pipeline is a workflow designed to automate the process of building, testing, integrating, and shipping quality software. It is a sequential process that optimizes the software release cycles from the code changes committed to a source code repository until they reach a runtime environment.

Considering that modern software development entails the usage of multiple environments (i.e., dev, stage, prod), building a workflow that aims to achieve the same goals as a CI/CD pipeline, albeit manually, is tedious, time-consuming, and doesn’t scale well.

To understand CI/CD pipelines better, we have to consider the main concepts it consists of, such as continuous integration and continuous delivery.

What is continuous integration?

Continuous integration is the first main step in a CI/CD pipeline and focuses on building the application and running a series of automated tests. Each has a distinct goal, but the main objective is to ensure that the new source code being committed meets the relevant criteria in terms of quality.

Continuous integration aims to prevent the introduction of issues into the main branch of your source code repository, which typically houses the stable version of the application.

What is continuous delivery?

Continuous delivery picks up where continuous integration ends and can also be considered an extension of continuous integration. The main objective in continuous delivery is to release the software built and tested in the CI stage.

It also emphasizes the strategy around how the software is released. However, it does include human intervention. Teams can release the software through the click of a button daily, weekly or some other timeline that is agreed on.

Once triggered, the application will be automatically deployed to the respective environment.

Continuous delivery vs. Continuous deployment

Continuous deployment is a practice in which every committed change that passes all stages or phases of the pipeline is released to the runtime environment. Unlike continuous delivery, the process is completely automated and has no human intervention. In this approach, shipping software moves even faster and eliminates the idea of a dedicated day for releasing software.

Read also: CI/CD best practices

Phases of CI/CD pipeline

In practice, CI/CD pipelines may vary depending on a number of factors, such as the nature of the application, source code repository setup, software architecture, and other important aspects of the software project.

That being said, some universal concepts are generally quite common in CI/CD pipelines which are detailed below:

1. Source stage

This is the first phase and involves pulling the source code that will be used in the pipeline. Typically, the source code is taken from a branch in a version control system like Git. This phase can be triggered through various events, such as pushing code directly to a specific repository branch, creating a pull request, or when a branch is merged.

2. Compile or Build stage

In this phase, the code from the source stage is used to compile or build the application. This reflects the same exercises developers typically undergo to build or compile an application locally.

However, this also means that the application dependencies need to be installed just as they would on a developer’s workstation to ensure that the build process runs smoothly.

In addition to this, other important runtime dependencies need to be specified. For example, if you are building a Docker application, you must ensure that the CI tool’s build runtime have Docker installed.

In most cases, CI tools have build stage templates that can be used to ensure that the build phase has the relevant dependencies and software tools for your application’s build process.

Also, this is usually the phase where the code is packaged for deployment or delivery. However, in some pipelines, there’s an additional phase where the code is packaged into an artifact for delivery.

Test stage

As the name implies, this is the phase in which you run various automated tests. Examples of these tests include static code analysis, unit testing, functional testing, integration testing, acceptance testing, and API testing. As mentioned earlier, the goal is to ensure that the committed code is of a certain quality and doesn’t introduce software faults through bad code.

Deploy or Deliver stage

This is the last phase of the CI/CD pipeline. After your source code has successfully been built and passed all the relevant tests, it will either be automatically deployed to a runtime environment or released upon clicking a button.

How to ship code faster

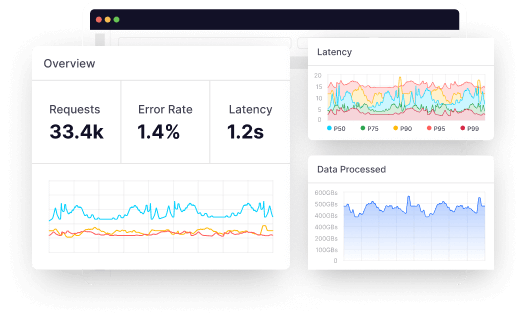

As modern software systems have increased in complexity and distribution, visibility of the infrastructure layer has become more pertinent to the success of software that is shipped to your runtime environments.

In the context of CI/CD pipelines, the software teams need to easily trace, detect and isolate particular areas of recently shipped code to remediate any issues quickly. This fits within the broader scope of monitoring infrastructure.

Infrastructure monitoring has become more critical for teams to keep their fingers on various components across the technical architecture. These components include containers, virtual machines, servers, and more.

By monitoring components of this nature, teams can respond faster, more accurately, and in some cases, proactively to infrastructure issues and incidents when they arise. Additionally, using a platform like Middleware can enhance your infrastructure monitoring strategy to better troubleshoot, optimize and forecast issues.

When it comes to shipping code, Middleware’s infrastructure monitoring zeroes in on poor-performing areas of your software for a more efficient approach to fixing bugs and issues in the development lifecycle, this complements the main goals of velocity and quality in CI/CD pipelines.

Benefits of the CI/CD pipeline

CI/CD pipelines have become the de facto approach to building and shipping software. This is the case across the board, from small startups to large enterprises. Let’s explore some of the main benefits that have contributed to the growth of this practice.

Faster software releases

Velocity is perhaps the primary issue that CI/CD pipelines aim to solve when it comes to delivering software. The flaws of the traditional approach are what contributed to the rise of DevOps as a whole.

The siloes between application developers and IT operations resulted in very poor turnaround times in the overall software shipping workflow.

This was due to the frequent communication and collaboration breakdowns that occurred as a result of the siloes. In contrast, CI/CD pipelines are built on the integration and collaboration of these two teams, developers, and operators, which automates the entire workflow and rapidly accelerates the entire process.

Improved software quality

To mitigate the risks of software faults and failures, you need to have quality gates that protect the main source code repositories. That’s where automated tests come in. Apart from expediting the process of getting software into the hands of the customer, this characteristic of CI/CD pipelines is right up there in its level of importance. As a result, software teams and companies benefit from setting a quality standard and maintaining it efficiently.

More efficient workflows

CI/CD pipelines enable teams to ship better code faster and create a more efficient workflow. The automation and optimization that CI/CD pipelines provide means that software teams no longer have to engage in the same repetitive tasks or go back and forth with external teams to ship code.

Once the pipeline is built, any further changes are minor compared to the initial creation process. As a result, the whole workflow is more efficient, and teams can spend more time on application optimization.

Cost effective workflows

Companies and software teams adopting CI/CD pipelines can also save on the costs of traditional approaches. This is because the manual way of doing things relies heavily on human intervention and has proven to be less effective.

When teams automate through pipelines, companies can save on the overhead of larger teams that don’t contribute towards the overall efficiency of the software shipping workflow.

Faster feedback loop for developers

Given the quick nature of CI/CD pipelines, software teams have a faster track to getting feedback for their recent committed changes. This allows teams to respond to issues that arise much faster.

Faster product delivery for customers

The quick feedback loop that CI/CD pipelines introduce benefits not only the development teams but also the end users. Certainly as relevant teams are made aware of the issues in their system, MTTT & MTTR are shortened.

Logging systems in CI/CD tools

CI/CD tools generate logs during the different phases of the pipeline, which serves as a leading indicator of issues. And DevOps and Development teams can use these logs to debug issues faster.

Notes : Instead of manually filtering logs by date, time and machines, you can leverage Middleware’s log monitoring capabilities by integrating it with your CI/CD tool to visualize logs with detailed time in your unified dashboard.

Example of a CI/CD pipeline in action

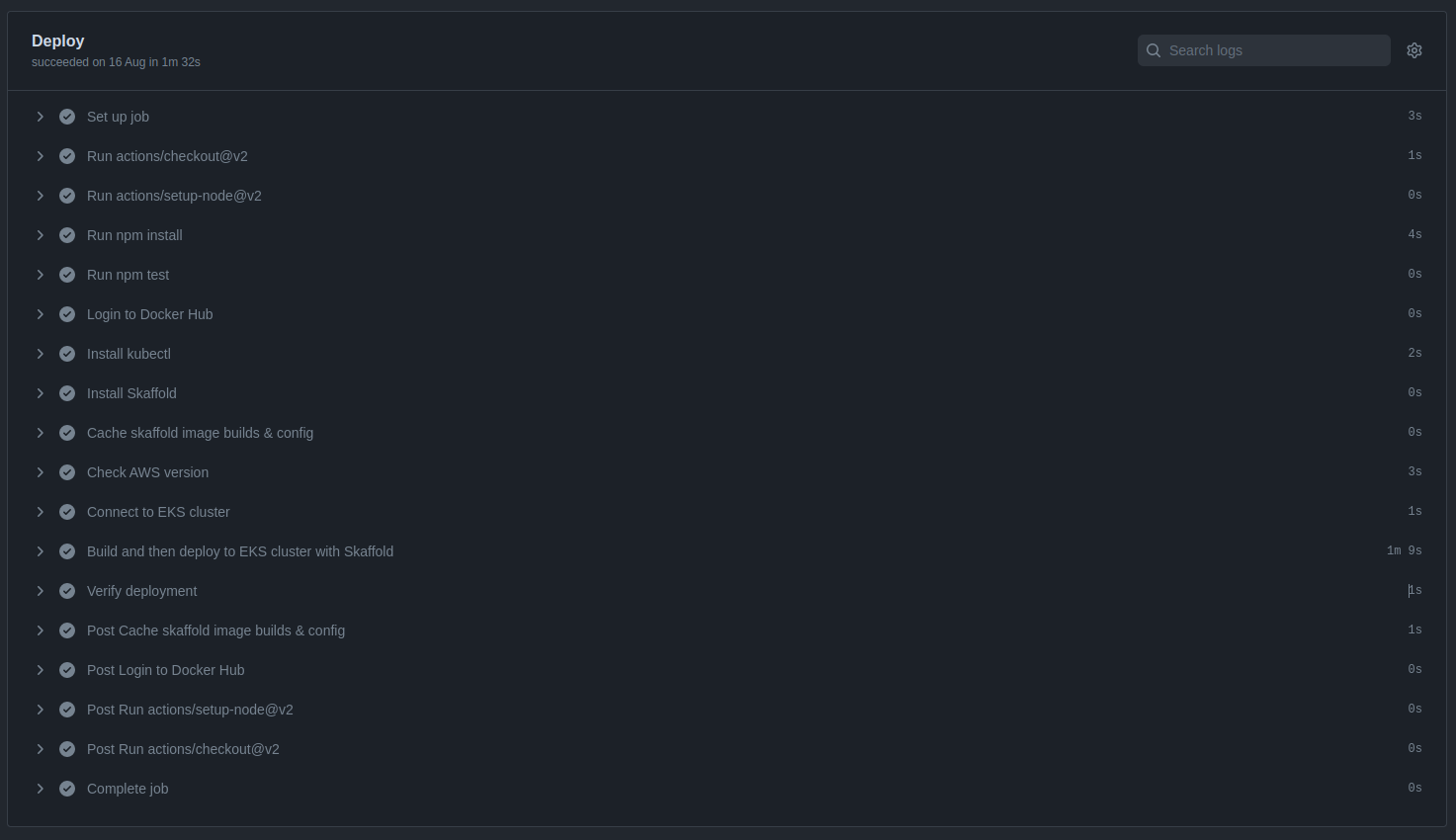

This section will cover an example of a CI/CD pipeline using GitHub Actions. The example application is a Node.js application that will be deployed to an Amazon EKS cluster. During the continuous integration phase, the application will be tested, built, and then pushed to a Docker Hub repository.

In the final phase, a connection will be established with the Amazon EKS cluster before deploying the Node.js application using a tool called Skaffold.

This is not a step-by-step tutorial. However, the project files have been detailed below for you to recreate on your own should you wish to. All the source code below can be found in this repository.

Project structure

├── .github/workflows/main.yml

├── Dockerfile

├── manifests.yaml

├── node_modules

├── package-lock.json

├── package.json

├── skaffold.yaml

└── src

├── app.js

├── index.js

└── test/index.js

Prerequisites

- AWS Account

- GitHub Account

- Node.js

- Docker

- Terraform (to create K8s cluster with IaC)

Application source code

package.json

{

"name" : "nodejs-express-test",

"version" : "1.0.0",

"description" : "",

"main" : "index.js",

"scripts" : {

"start" : "node src/index.js",

"dev" : "nodemon src/index.js",

"test" : "mocha 'src/test/**/*.js'"

},

"repository" : {

"type" : "git",

"url" : "git+https://github.com/YourGitHubUserName/gitrepo.git"

},

"author" : "Your Name",

"license" : "ISC",

"dependencies" : {

"body-parser" : "^1.19.0",

"cors" : "^2.8.5",

"express" : "^4.17.1"

},

"devDependencies" : {

"chai" : "^4.3.4",

"mocha" : "^9.0.2",

"nodemon" : "^2.0.12",

"supertest" : "^6.1.3"

}

}

app.js

// Express App Setup

const express = require('express');

const http = require('http');

const bodyParser = require('body-parser');

const cors = require('cors');

// Initialization

const app = express();

app.use (cors());

app.use (bodyParser.json());

// Express route handlers

app.get('/test', (req, res) => {

res.status(200).send ({ text: 'The application is working!' });

});

module.exports = app;

index.js

const http = require('http');

const app = require('./app');

// Server

const port = process.env.PORT || 8080;

const server = http.createServer(app);

server.listen (port, () => console.log(`Server running on port ${port}`))

test/index.js

const { expect } = require('chai');

const { agent } = require('supertest');

const app = require('../app');

const request = agent;

describe ('Some controller', () => {

it ('Get request to /test returns some text', async () => {

const res = await request(app).get('/test');

const textResponse = res.body;

expect (res.status).to.equal(200);

expect (textResponse.text).to.be.a('string');

expect (textResponse.text).to.equal('The application is working!');

});

});

manifests.yaml

apiVersion : apps/v1

kind : Deployment

metadata:

name : express-test

spec:

replicas : 3

selector:

matchLabels :

app : express-test

template:

metadata :

labels :

app : express-test

spec:

containers :

- name : express-test

image : /express-test

resources:

limits :

memory : 128Mi

cpu : 500m

ports:

- containerPort : 8080

apiVersion : v1

kind : Service

metadata :

name : express-test-svc

spec :

selector :

app : express-test

type : LoadBalancer

ports :

- protocol : TCP

port : 8080

targetPort : 8080

Dockerfile

FROM node :14-alpine

WORKDIR /usr/src/app

COPY ["package.json", "package-lock.json*", "npm-shrinkwrap.json*", "./"]

RUN npm install

COPY . .

EXPOSE 8080

RUN chown -R node /usr/src/app

USER node

CMD ["npm", "start"]

Create Amazon EKS cluster with Terraform

You can create an Amazon EKS cluster in your AWS account using the Terraform source code. It is available in the Amazon EKS Blueprints for Terraform repository.

GitHub actions configuration file

(.github/workflows/main.yml)

name : 'Build & Deploy to EKS'

on :

push:

branches:

- main

env :

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

EKS_CLUSTER: ${{ secrets.EKS_CLUSTER }}

EKS_REGION: ${{ secrets.EKS_REGION }}

DOCKER_ID: ${{ secrets.DOCKER_ID }}

DOCKER_PW: ${{ secrets.DOCKER_PW }}

# SKAFFOLD_DEFAULT_REPO :

jobs :

deploy :

name : Deploy

runs-on : ubuntu-latest

env :

ACTIONS_ALLOW_UNSECURE_COMMANDS: 'true'

steps :

# Install Node.js dependencies

- uses: actions/checkout@v3

- uses: actions/setup-node@v3

with:

node-version: '14'

- run: npm install

- run: npm test

# Login to Docker registry

- name: Login to Docker Hub

uses: docker/login-action@v1

with:

username: ${{ secrets.DOCKER_ID }}

password: ${{ secrets.DOCKER_PW }}

# Install kubectl

- name: Install kubectl

run: |

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/

release/stable.txt)/bin/linux/amd64/kubectl"

curl -LO "https://dl.k8s.io/$(curl -L -s https://dl.k8s.io/release/

stable.txt)/bin/linux/amd64/kubectl.sha256"

echo "$(kubectl.sha256) kubectl"="" |="" sha256sum="" --check=""

sudo="" install="" -o="" root="" -g="" -m="" 0755="" kubectl="" usr="" local=""

bin="" version="" --client="" # Install Skaffold

- name: Install Skaffold

run: |

curl -Lo skaffold https://storage.googleapis.com/skaffold/releases/

latest/skaffold-linux-amd64 && \

sudo install skaffold /usr/local/bin/

skaffold version

# Cache skaffold image builds & config

- name: Cache skaffold image builds & config

uses: actions/cache@v2

with:

path: ~/.skaffold/

key: fixed-${{ github.sha }}

# Check AWS version and configure profile

- name: Check AWS version

run: |

aws --version

aws configure set aws_access_key_id $AWS_ACCESS_KEY_ID

aws configure set aws_secret_access_key $AWS_SECRET_ACCESS_KEY

aws configure set region $EKS_REGION

aws sts get-caller-identity

# Connect to EKS cluster

- name: Connect to EKS cluster

run: aws eks --region $EKS_REGION update-kubeconfig --name $EKS_CLUSTER

# Build and deploy to EKS cluster

- name: Build and then deploy to EKS cluster with Skaffold

run: skaffold run

# Verify deployment

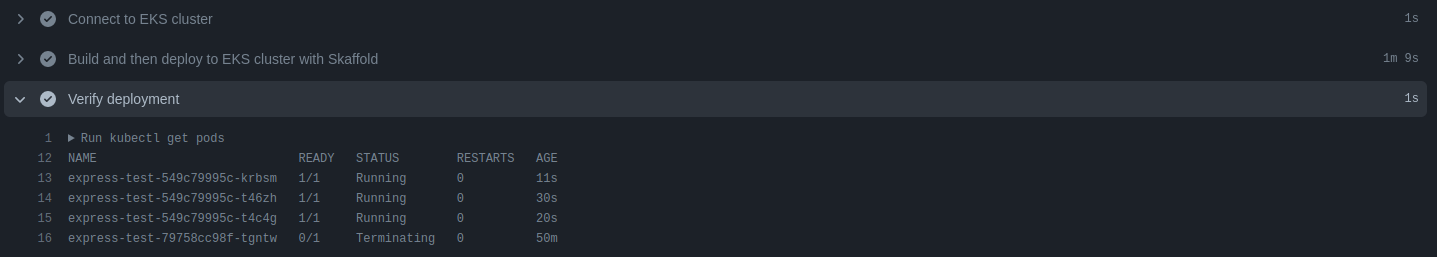

- name: Verify deployment

run: kubectl get pods

kubectl.sha256)

Successful pipeline example

After creating all the files in the project, be sure to committee them to source code repository in GitHub. Because, GitHub Actions will detect the push to the main branch and trigger a pipeline following the configurations of the main.yml file.

As shown in the screenshot, the pipeline passed successfully in each phase, and the application was deployed to Amazon EKS.

Successful pipeline build and deployment

Deployment verification in Amazon EKS cluster

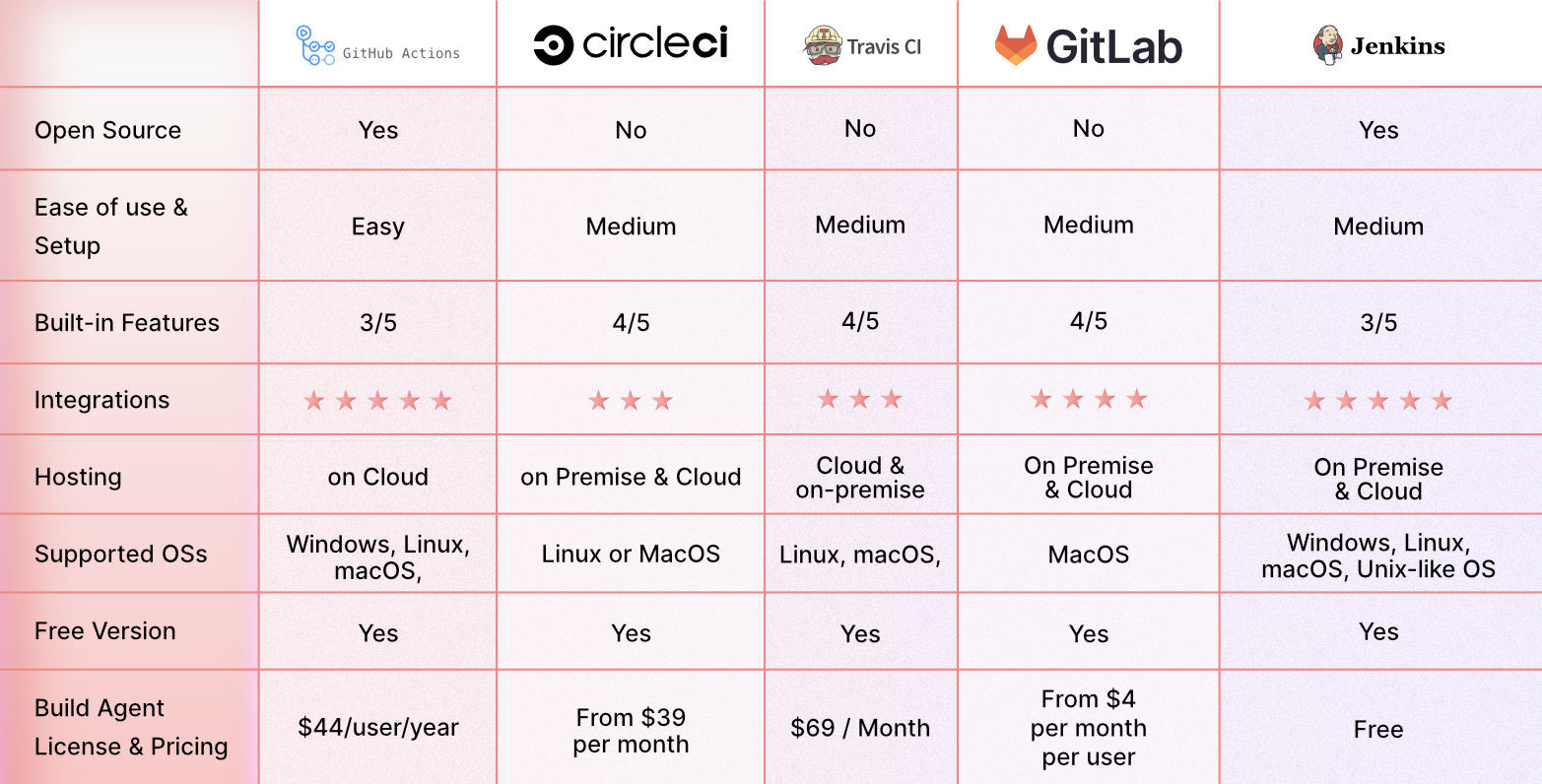

Top CI/CD tools

Your team can automate development, deployment, and testing with the aid of CI/CD tools. Some tools specialize in continuous testing or related tasks, while some manage the development and deployment (CD) and others manage the integration (CI) side.

Below are some of the top CI/CD tools:

1. GitHub Actions

GitHub Actions is a great CI/CD platform that allows you to automate your build, test, and deployment pipeline. You can create custom workflows that build and test every pull request to your repository or deploy merged pull requests to production.

2. Circle CI

Circle CI is a cloud-based CI/CD platform that helps development teams release their code rapidly and automate the build, test, and deployment.

3. Travis CI

Travis CI is a hosted continuous integration tool that allows Development teams to test and deploy your projects with ease.

4. GitLab

Gitlab is an all-in-one DevOps platform that helps Devs automate their code’s builds, integration, and verification.

5. Jenkins

Jenkins is an open-source automation server that helps Development teams automate their build, testing, and deployment part of the software development lifecycle.

Start scaling your CI/CD pipeline with Middleware

CI/CD pipeline helps you automate the build, test and deployment part of the software development lifecycle. Integrating Infrastructure monitoring tools like Middleware can help you scale up the process.

Similarly, leveraging log management tools can help your Dev & Ops team increase productivity while saving time required for debugging applications.

Now that you know how Middleware can help you accelerate your CI/CD pipeline. Sign up on our platform to see it in action!