Netflix relies on AWS for almost all its storage and computing needs, including databases, analytics, recommendation engines, and video transcoding, using over 100,000 server instances. Now imagine a major malicious activity in one of the databases. It can have disastrous outcomes if not identified and addressed timely. Ensuring security and compliance with such a vast and complex infrastructure becomes difficult. AWS CloudTrail plays an important role in this process.

CloudTrail events log details about user activities, specifying which user executed which actions, on what resources, and at what time. This comprehensive logging is vital for security auditing, compliance, and operational troubleshooting in AWS environments.

In this blog, we will cover the whole kit and caboodle of best monitoring practices for CloudTrail Logs. We will look at:

- Understanding and setting up CloudTrail

- Monitoring and analyzing CloudTrail logs

- Best practices for CloudTrail log management

- Advanced CloudTrail log monitoring

Understanding CloudTrail logs

CloudTrail allows AWS account owners to monitor and record every API call made to any resource within their AWS account. The recorded events capture user activity and API calls across AWS accounts. These events can include the following information:

| Event Information |

| The identity of the API caller |

| The source of the API caller’s IP address |

| The request parameters |

| The response elements returned by the AWS service |

| The time of the API call |

CloudTrail logs three primary types of events to facilitate monitoring:

1. Management events: These capture control plane actions on resources, like creating or deleting Amazon S3 buckets.

2. Data events: These record data plane actions within resources, such as reading or writing Amazon S3 objects.

3. Insights events: These assist AWS users in detecting and addressing unusual activity linked to API calls and error rates by analyzing CloudTrail management events continuously.

All event types use a CloudTrail JSON log format. The event data is enclosed in a Records array. Here’s an example of a CloudTrail JSON for a management event:

{

"eventVersion": "1.09",

"userIdentity": {

"type": "IAMUser",

"principalId": "EXAMPLE6E4XEGITWATV6R",

"arn": "arn:aws:iam::123456789012:user/Mary_Major",

"accountId": "123456789012",

"accessKeyId": "AKIAIOSFODNN7EXAMPLE",

"userName": "Mary_Major",

"sessionContext": {

"attributes": {

"creationDate": "2023-07-19T21:11:57Z",

"mfaAuthenticated": "false"

}

}

},

"eventTime": "2023-07-19T21:33:41Z",

"eventSource": "cloudtrail.amazonaws.com",

"eventName": "StartLogging",

"awsRegion": "us-east-1",

"sourceIPAddress": "192.0.2.0",

"userAgent": "aws-cli/2.13.5 Python/3.11.4 Linux/4.14.255-314-253.539.amzn2.x86_64 exec-env/CloudShell exe/x86_64.amzn.2 prompt/off command/cloudtrail.start-logging",

"requestParameters": {

"name": "myTrail"

},

"responseElements": null,

"requestID": "9d478fc1-4f10-490f-a26b-EXAMPLE0e932",

"eventID": "eae87c48-d421-4626-94f5-EXAMPLEac994",

"readOnly": false,

"eventType": "AwsApiCall",

"managementEvent": true,

"recipientAccountId": "123456789012",

"eventCategory": "Management",

"tlsDetails": {

"tlsVersion": "TLSv1.2",

"cipherSuite": "ECDHE-RSA-AES128-GCM-SHA256",

"clientProvidedHostHeader": "cloudtrail.us-east-1.amazonaws.com"

},

"sessionCredentialFromConsole": "true"

}The following example illustrates a single log record of a management event. In this event, an IAM user named Mary_Major executed the aws cloudtrail start-logging command, triggering the CloudTrail StartLogging action to initiate the logging process for a trail named myTrail.

Let’s take a look at a hands-on example of CloudTrail for a better understanding.

Setting up CloudTrail logging

Let’s set up CloudTrail for our AWS account here.

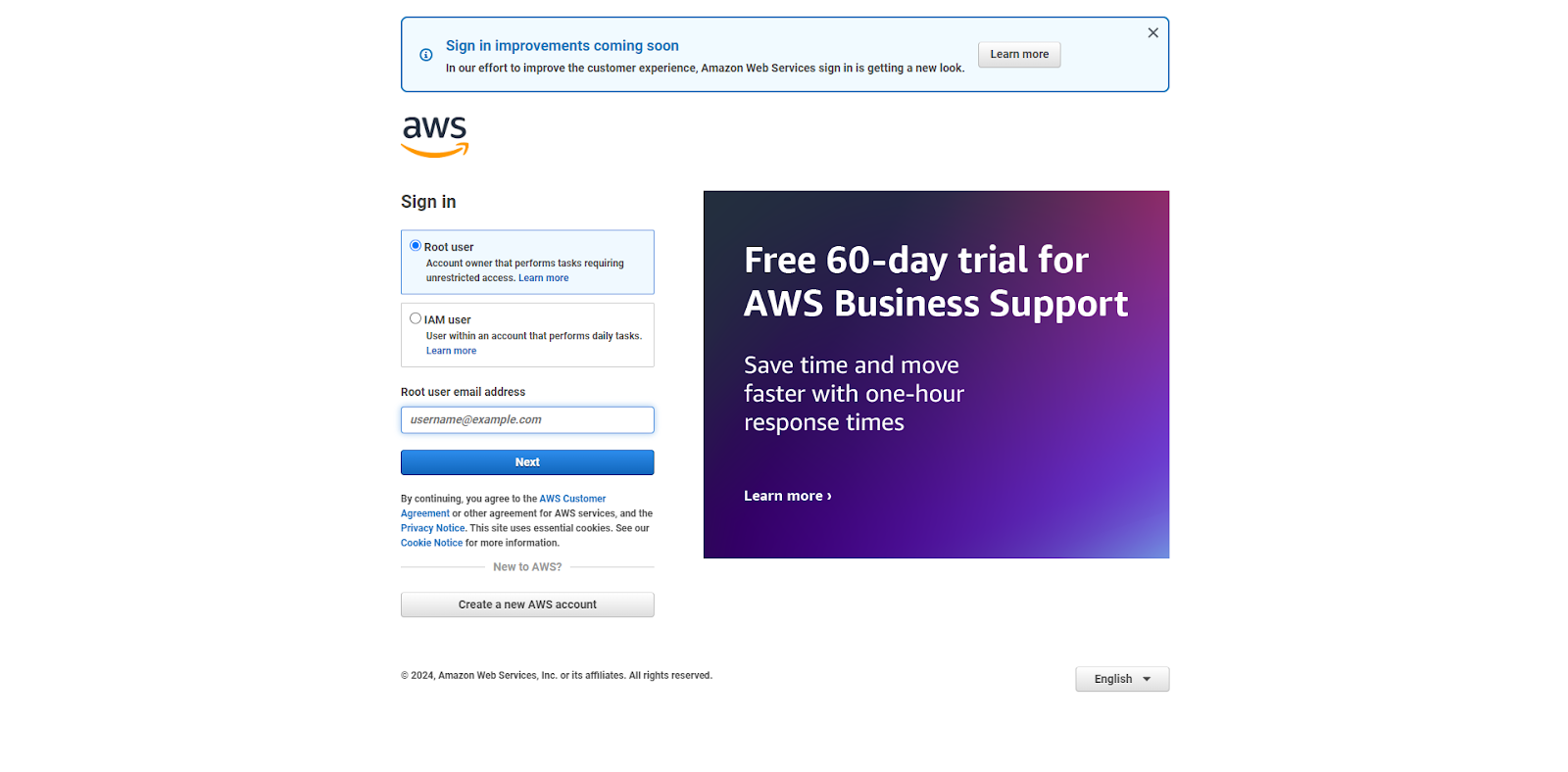

Step 1: Sign in to the AWS management console

- Open the AWS Management Console at AWS Console.

- Sign in with your credentials.

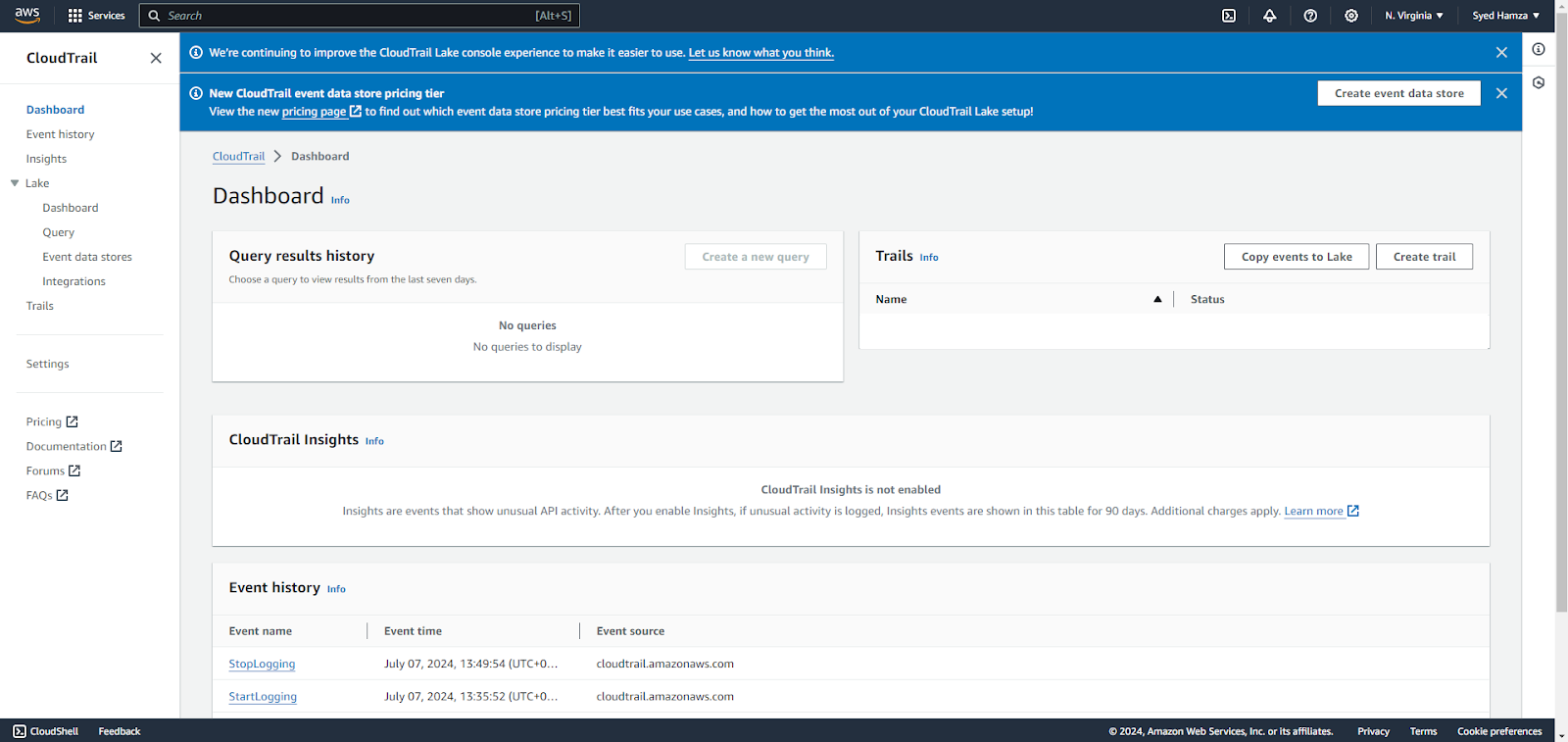

Step 2: Navigate to CloudTrail

- In the AWS Management Console, type “CloudTrail” in the search bar and select it from the results.

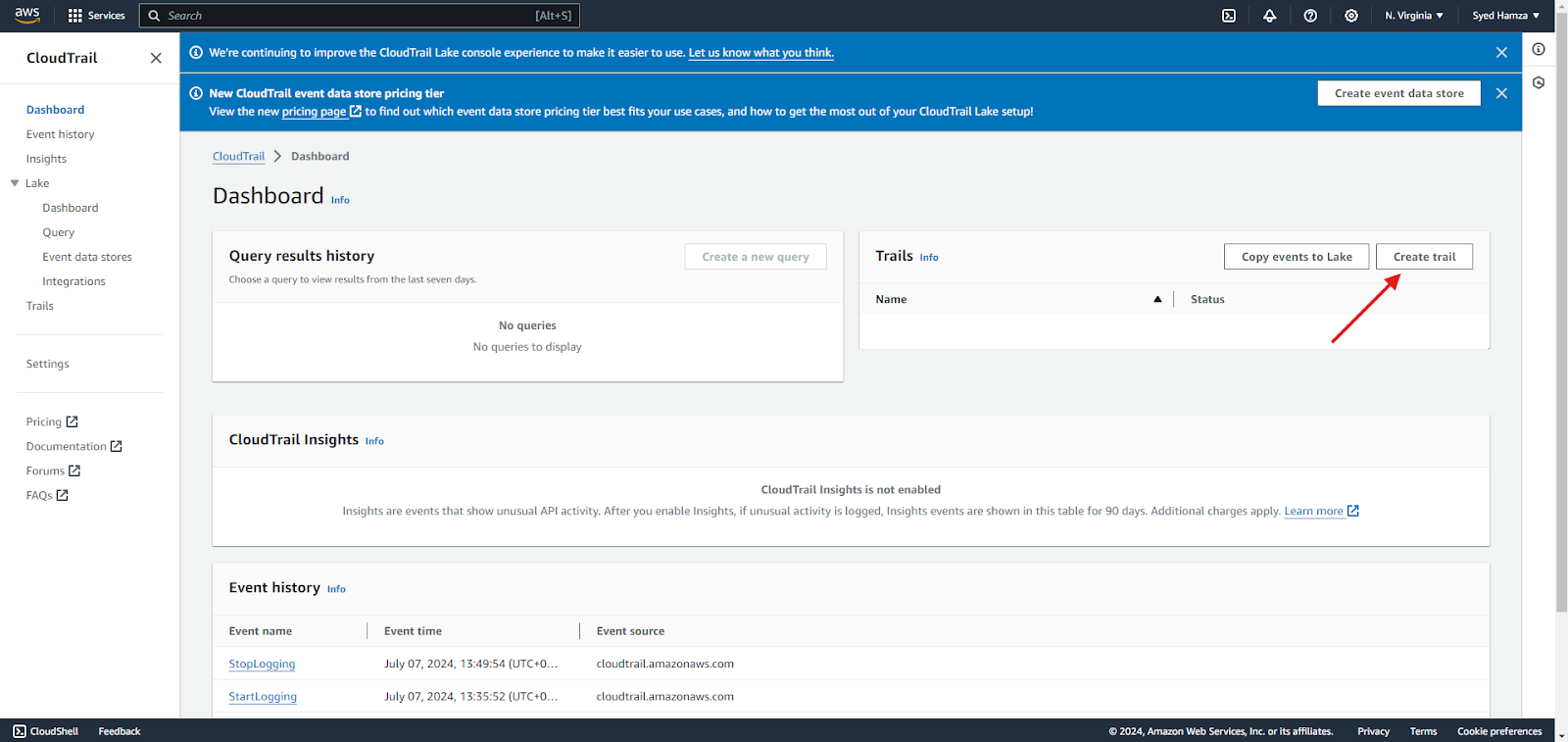

Step 3: Create a trail

- Click on Create Trail.

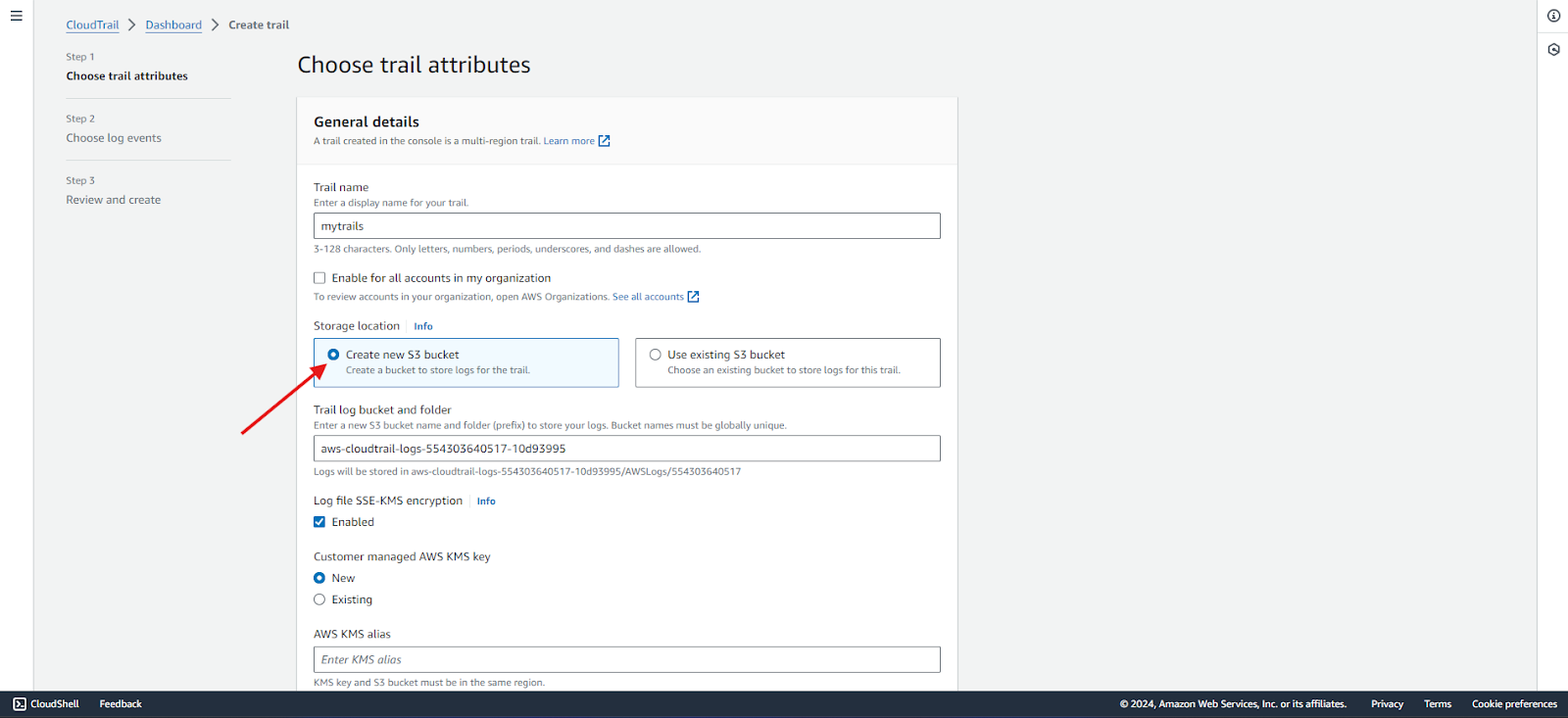

- Provide a name for your trail (e.g., myTrails).

Step 4: Specify storage location

- Choose an existing S3 bucket or create a new one to store your logs. For now, we will create a new one.

- If creating a new bucket, specify a unique name (e.g., my-cloudtrail-logs).

- If the existing S3 bucket is chosen, ensure that the bucket policies allow CloudTrail to write logs to it.

Step 5: Security

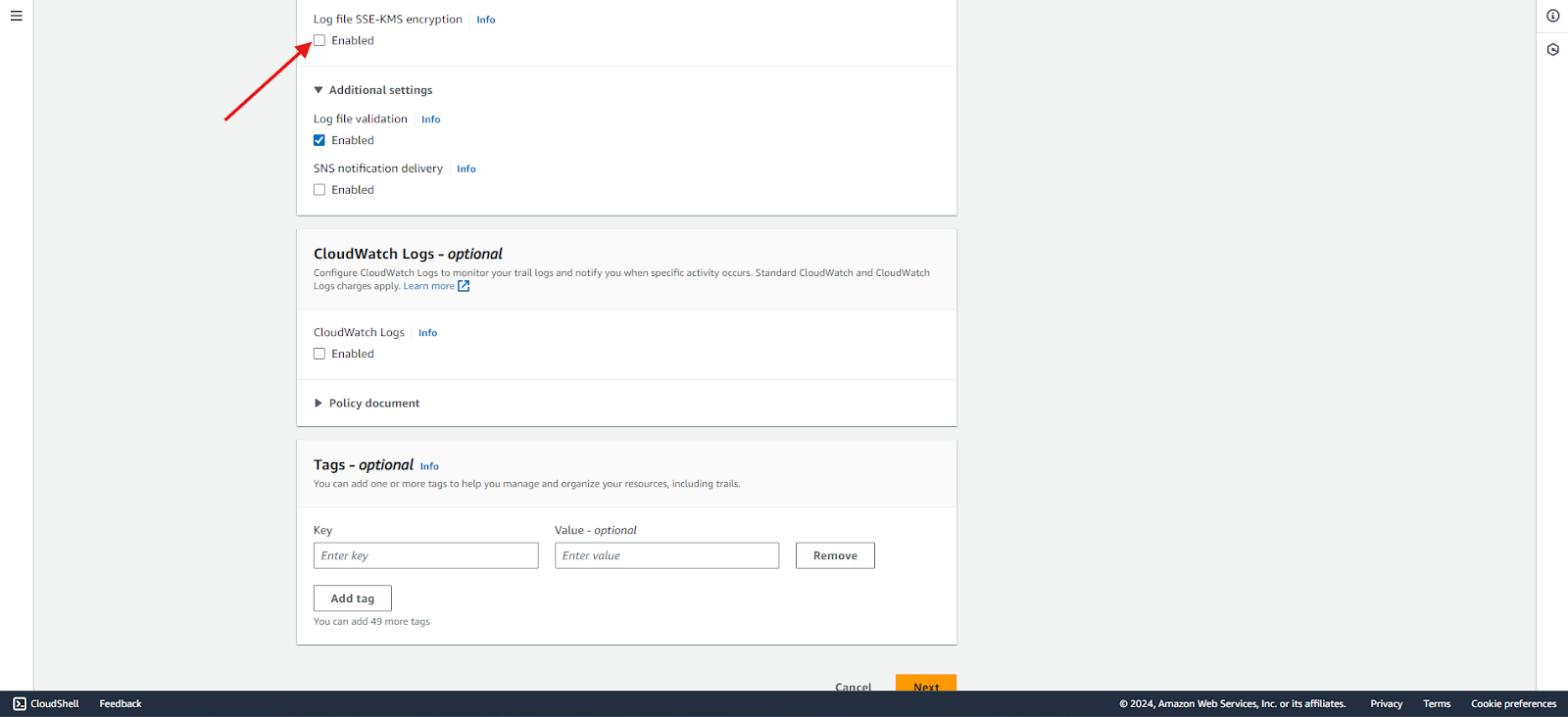

- All new objects uploaded to Amazon S3 buckets are automatically encrypted by default using server-side encryption with Amazon S3 managed keys (SSE-S3). However, you can choose to use server-side encryption with a KMS key (SSE-KMS). In this case, we will disable SSE-KMS.

Step 6: Configure log file validation

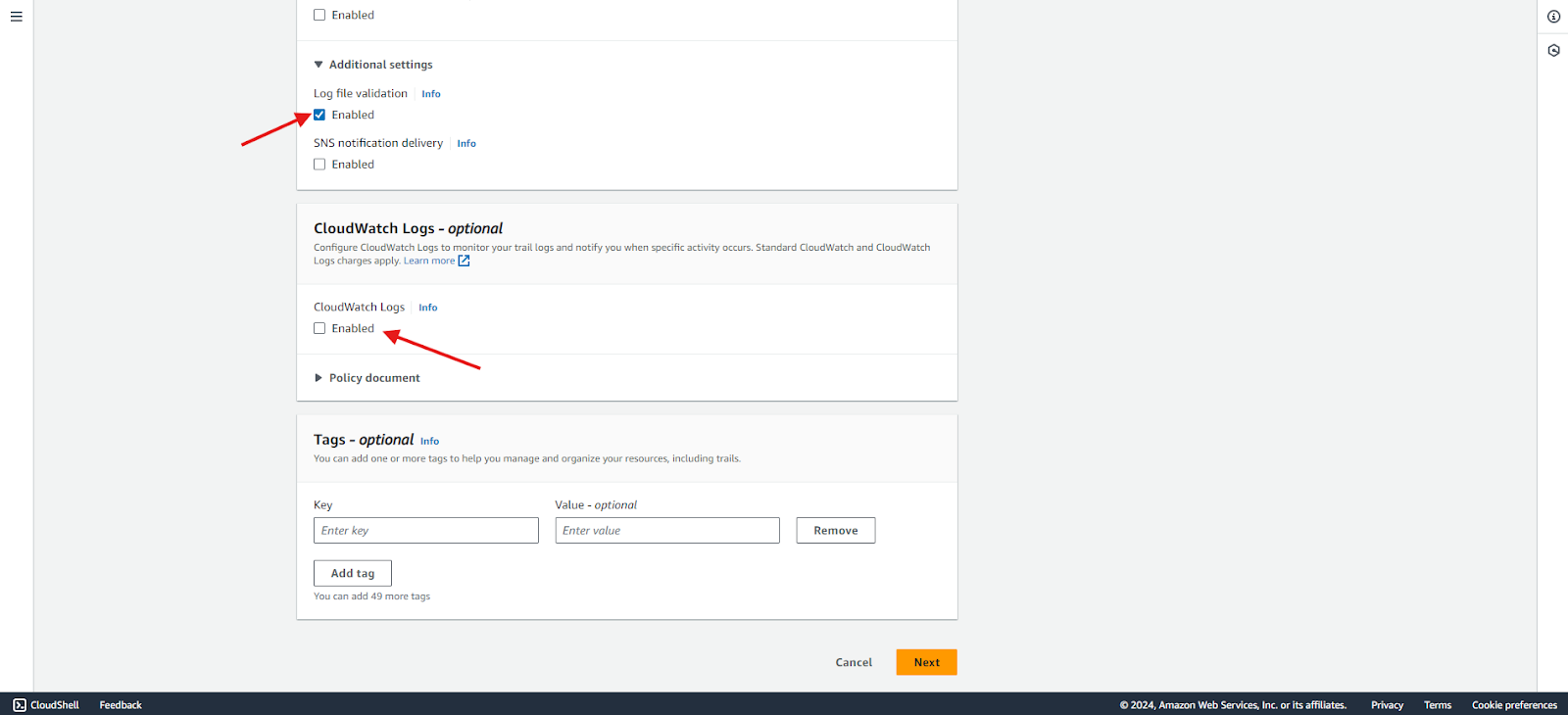

- Optionally, enable log file validation to ensure the integrity of the logs. CloudTrail log file integrity validation will determine whether a log file was modified, deleted, or unchanged after AWS CloudTrail delivered it. In this example, we will enable log file validation.

- You also have the option to send logs to CloudWatch. We will disable this option for now.

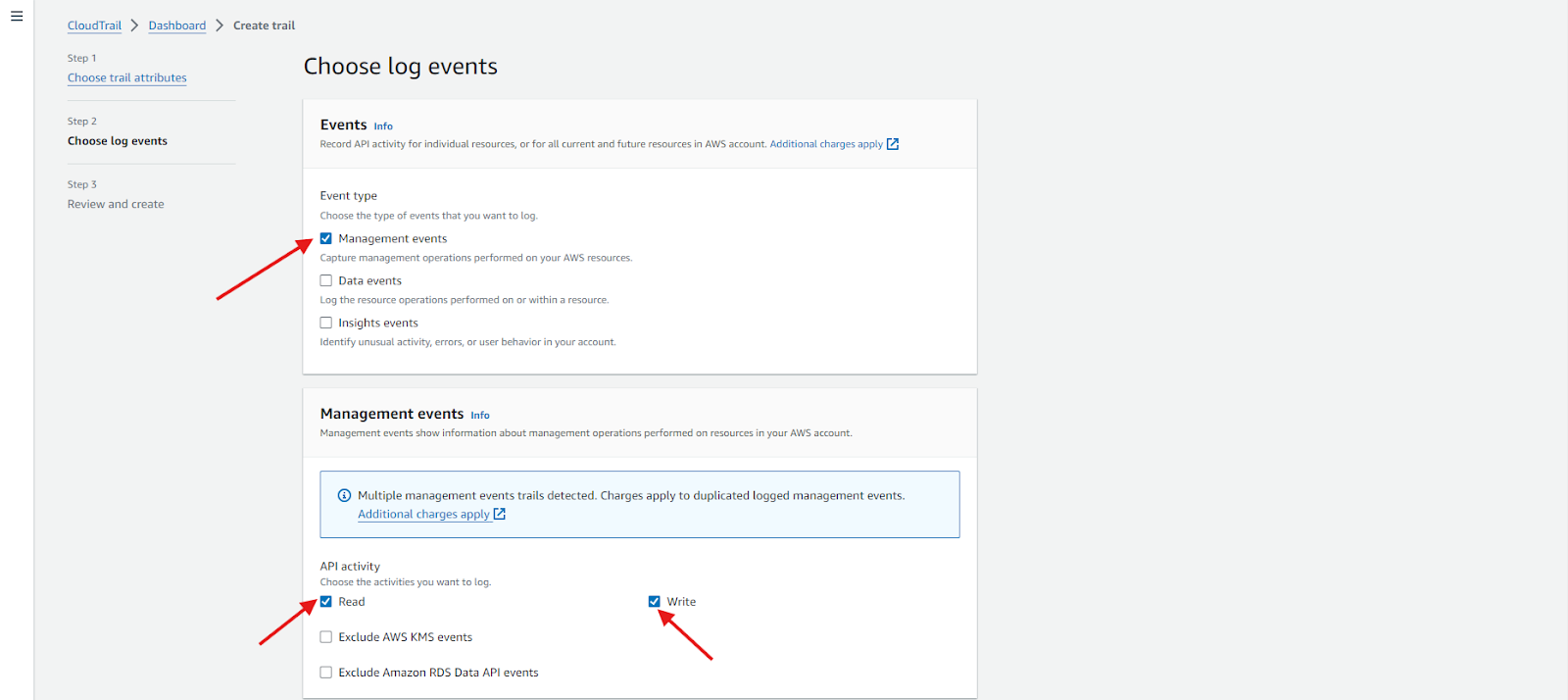

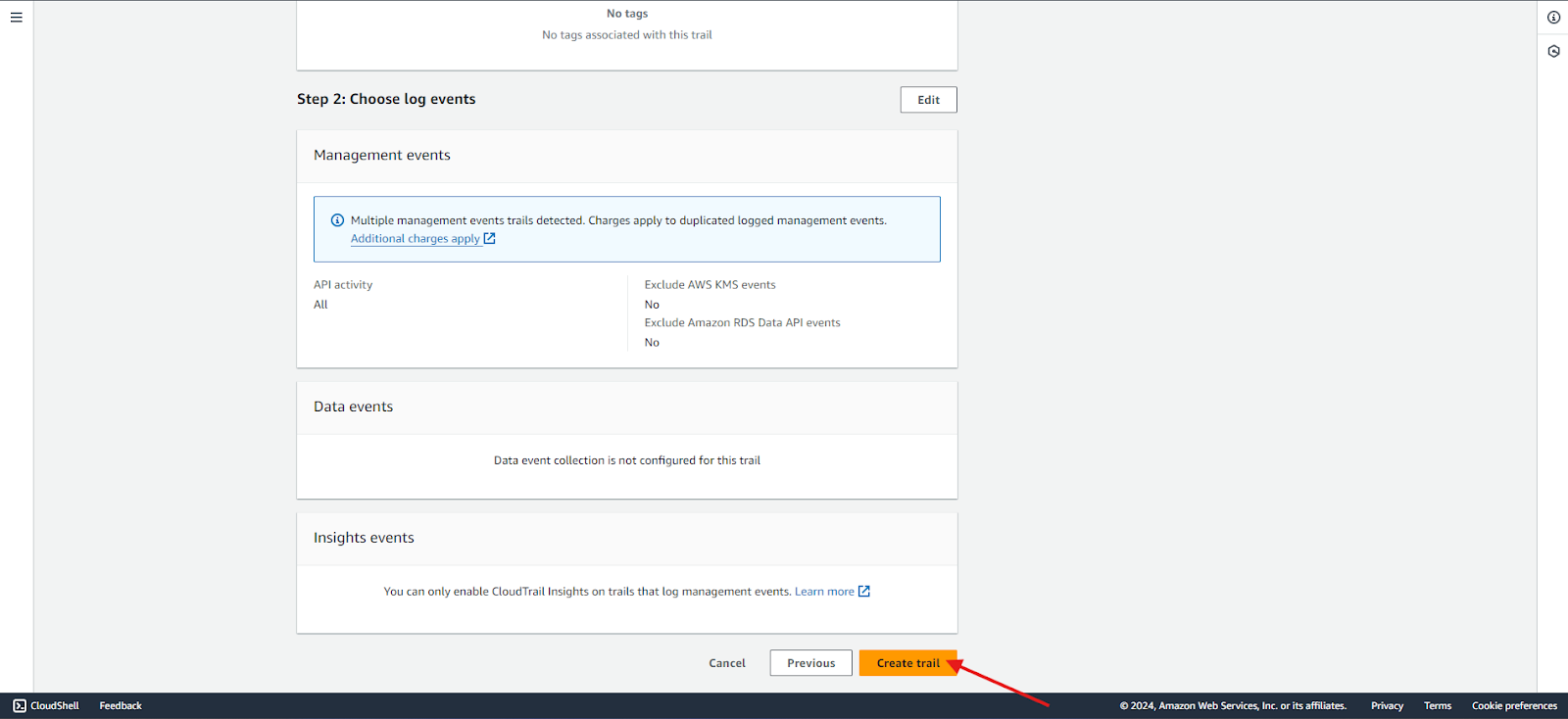

Step 7: Configure event

- On the Choose Log events page, select the event types to log. For this trail, keep the default setting of Management events. In the Management events section, ensure both Read and Write events are selected if they are not already. To log all management events, leave the checkboxes for Exclude AWS KMS events and Exclude Amazon RDS Data API events unchecked.

Step 8: Review and create

- Review the steps and create the trail as shown below:

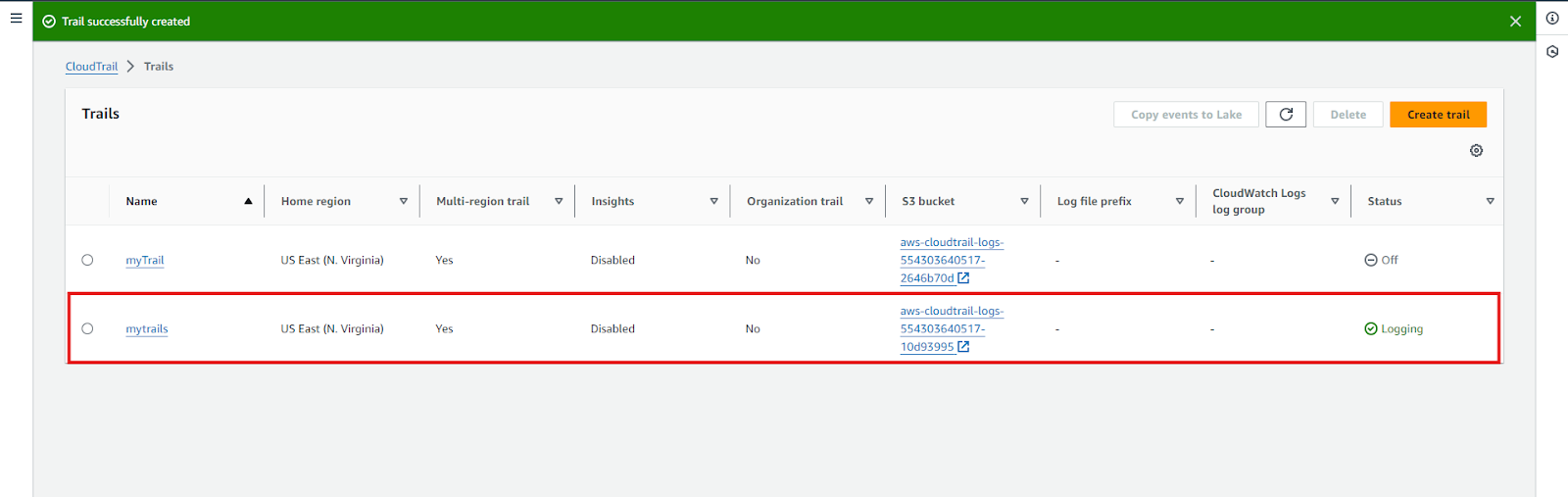

- The new trail is created, as shown below, and the log file can be found in the S3 bucket.

We can use an existing S3 bucket for some advanced features and configure it according to our use case. Let’s see some configurations:

S3 bucket configuration

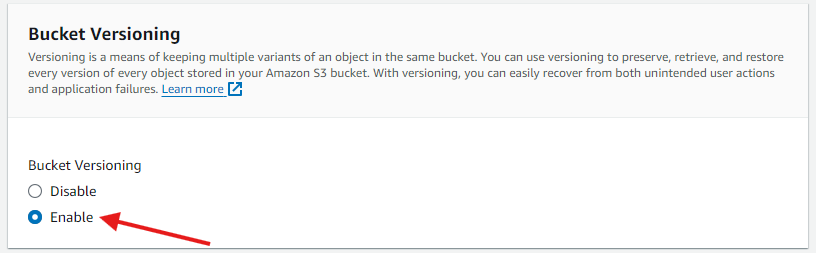

- Ensure the S3 bucket has versioning enabled for added protection. Versioning allows for easy recovery from unintended user actions and application failures.

- Set up bucket policies to restrict access to the logs.

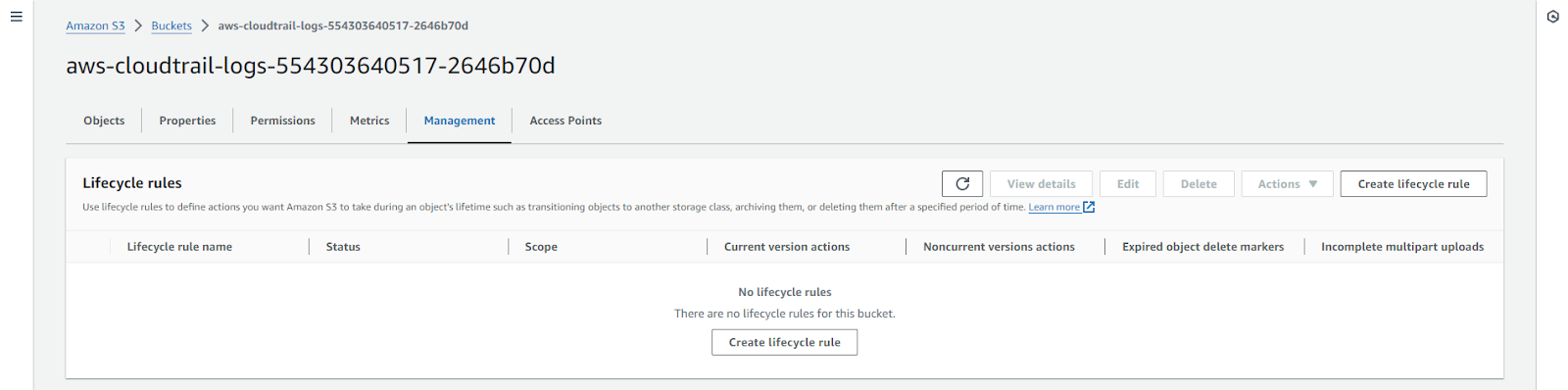

Lifecycle policies

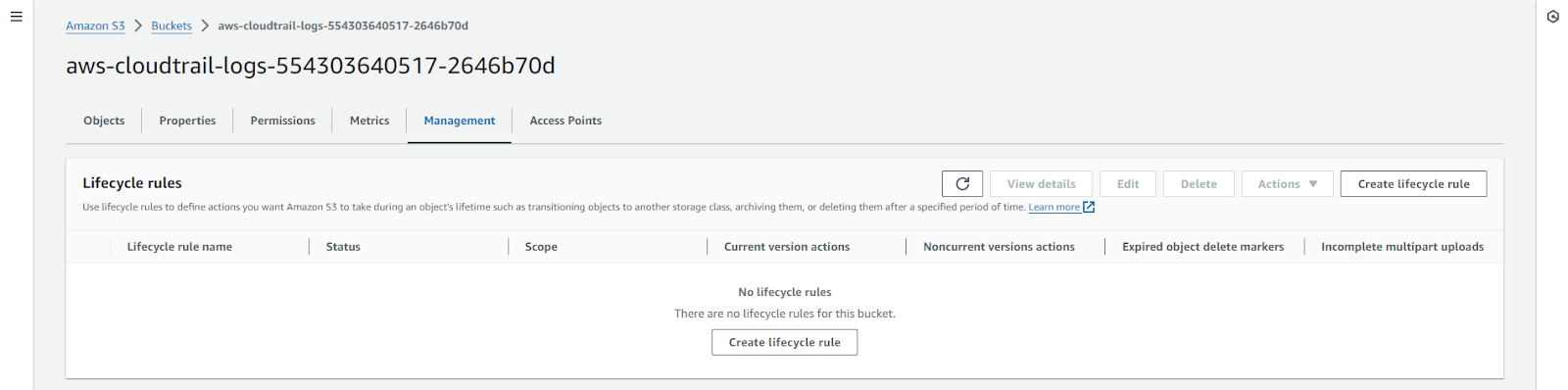

- In the S3 bucket, configure lifecycle policies to manage log file retention.

- Define rules to transition logs to colder storage classes (e.g., Glacier) after a specified period.

- Set rules for log expiration to delete old logs after a certain period.

Best practices for naming and organizing log files

Systems like Netflix often have a lot to manage, making organization essential. Here are some best practices:

- Consistent naming conventions:

- Use a clear and consistent naming convention for your trails and S3 buckets (e.g., accountName-serviceName-region-purpose).

- Hierarchical structure:

- Organize logs in a hierarchical structure within the S3 bucket.

- Use prefixes to categorize logs by date, service, or event type (e.g., logs/year/month/day/service).

- Tagging:

- Tag your CloudTrail trails and S3 buckets with metadata to facilitate identification and management.

With CloudTrail logging set up, the next step is to focus on monitoring the logs of your AWS environment.

Monitoring CloudTrail logs

Manually monitoring logs can be tiresome work. AWS offers various options to monitor logs and identify anomalies automatically. Here are some options it offers:

CloudTrail Insights

Brief: AWS CloudTrail Insights helps users identify and respond to unusual activity associated with API calls.

How it works: CloudTrail Insights analyzes normal patterns of API call volume and API error rates, also called the baseline, and generates Insights events when the call volume or error rates are outside normal patterns.

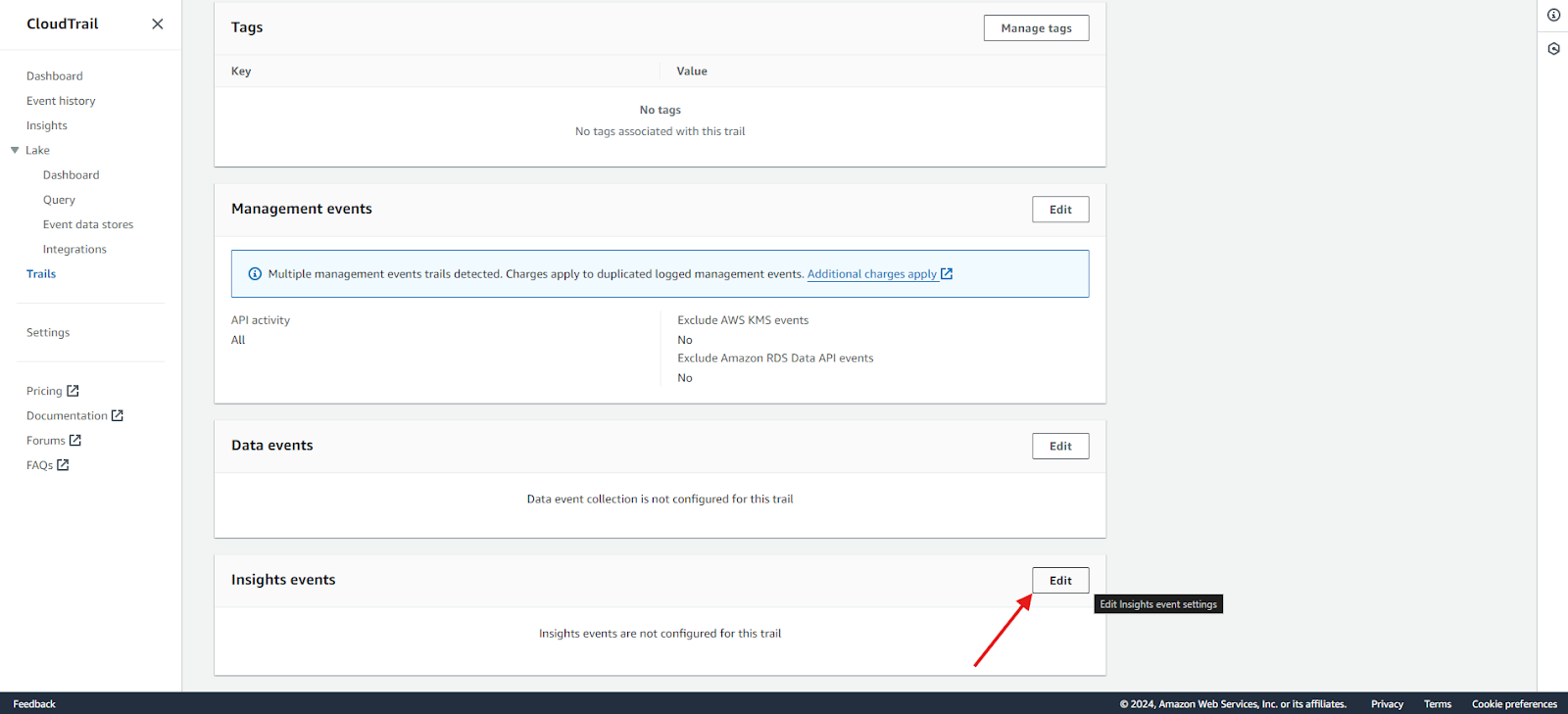

Enabling insights: The next steps will guide you on how to enable them:

- Navigate and select trail: In the CloudTrail console, go to the Trails page and select the trail name.

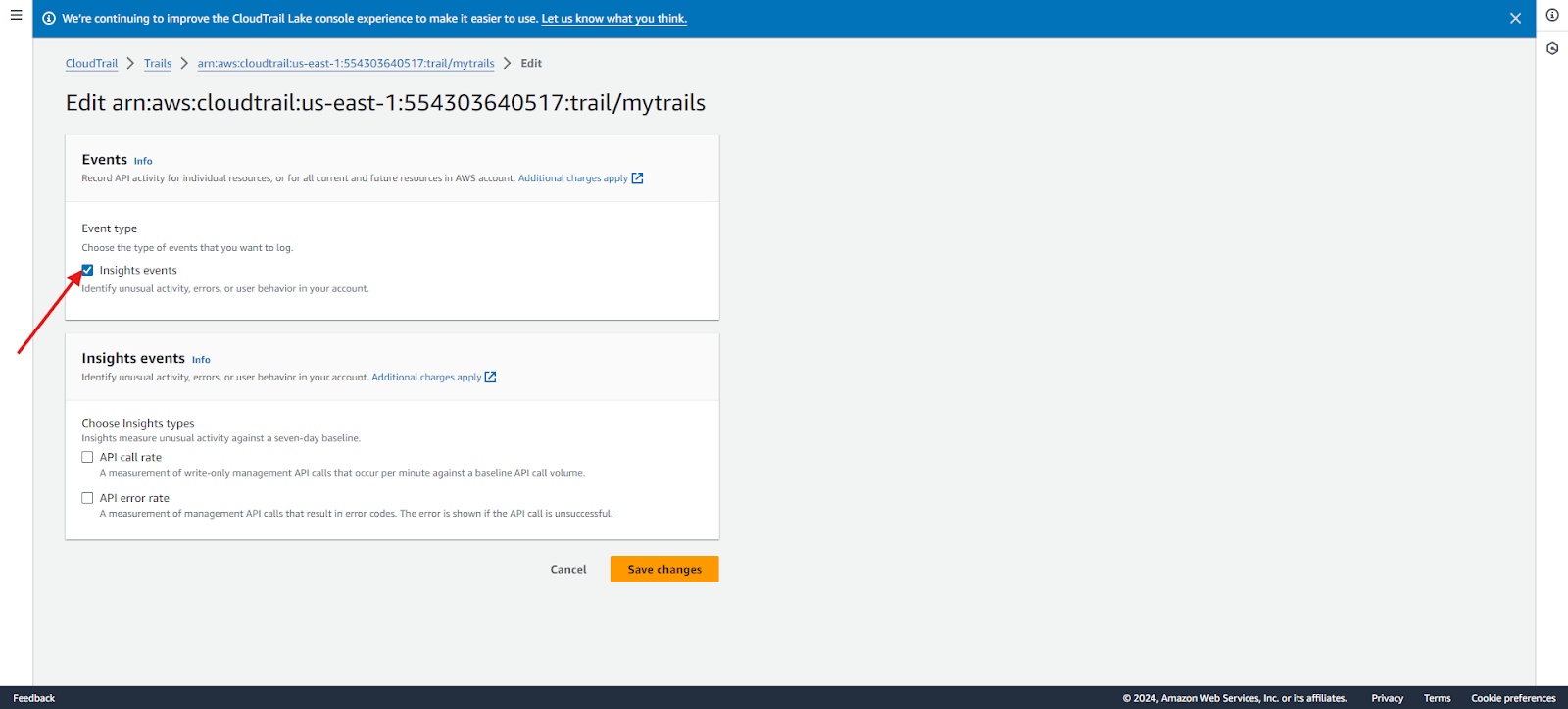

- Edit insights events: Click Edit under Insights events, then select Insights events in the Event type section.

3. Select insight type & event: In the Event type section, select Insights events.

4. Confirm: Click Save Changes to apply settings. If unusual activity is detected, it may take up to 36 hours for the first Insights events to be delivered.

CloudWatch Logs

Brief: Amazon CloudWatch Logs allows you to store, monitor, and access log files from Amazon EC2 instances, AWS CloudTrail, Route 53, and other sources.

How it works: CloudWatch Logs centralizes logs from all your systems and AWS services, allowing you to view, search, filter, and securely archive them. For CloudTrail, CloudWatch Logs Insights provides real-time analysis and visualization of API activity, helping you monitor and detect unusual behavior efficiently.

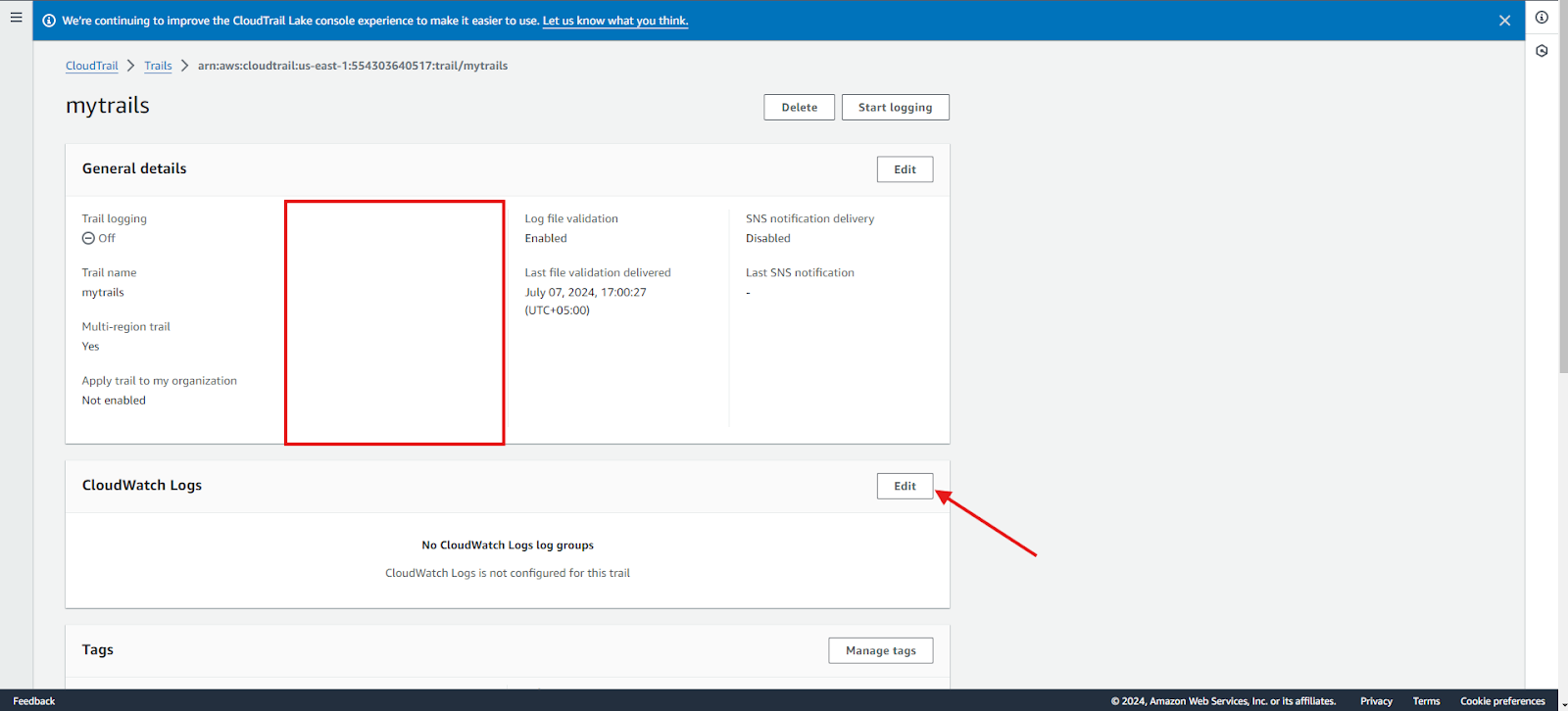

Enabling CloudWatch: Here are the steps to enable CloudWatch for CloudTrail:

1. Log in with sufficient permissions: Ensure you are logged in as an administrative user or role with permissions to configure CloudWatch Logs integration.

2. Open CloudTrail console: Go to the CloudTrail console at AWS CloudTrail Console.

3. Select trail and configure logs:

- Choose the trail name. For this, we will select myTrails.

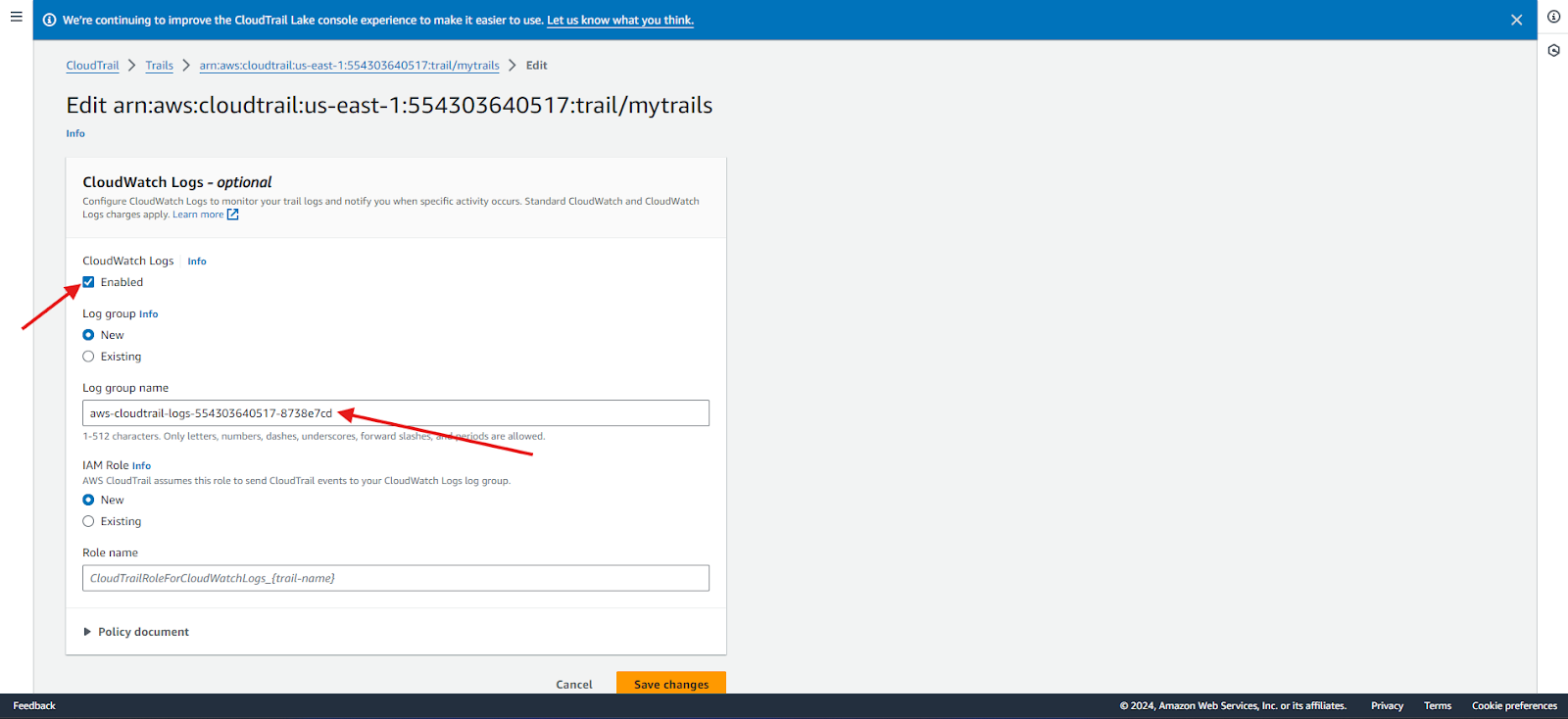

- In CloudWatch Logs, choose Edit and select Enabled for CloudWatch Logs.

4. Set up log group and IAM role:

- For Log group name, choose New to create a new log group or Existing to use an existing one.

- For the Role name, choose New to create a new IAM role or Existing to use an existing IAM role for permissions to send logs to CloudWatch Logs.

CloudTrail typically delivers events to your log group within an average of about 5 minutes of an API call.

SNS

Brief: CloudTrail requires S3 buckets for data storage and optionally integrates with SNS for real-time notifications upon new data delivery.

How it works: Amazon SNS delivers messages from publishers to subscribers via a topic. Subscribers can receive messages through endpoints like Amazon SQS, AWS Lambda, HTTP, email, push notifications, and SMS.

Enabling SNS: Here are the steps for enabling SNS:

- Open CloudTrail console: Go to the CloudTrail console at AWS CloudTrail Console.

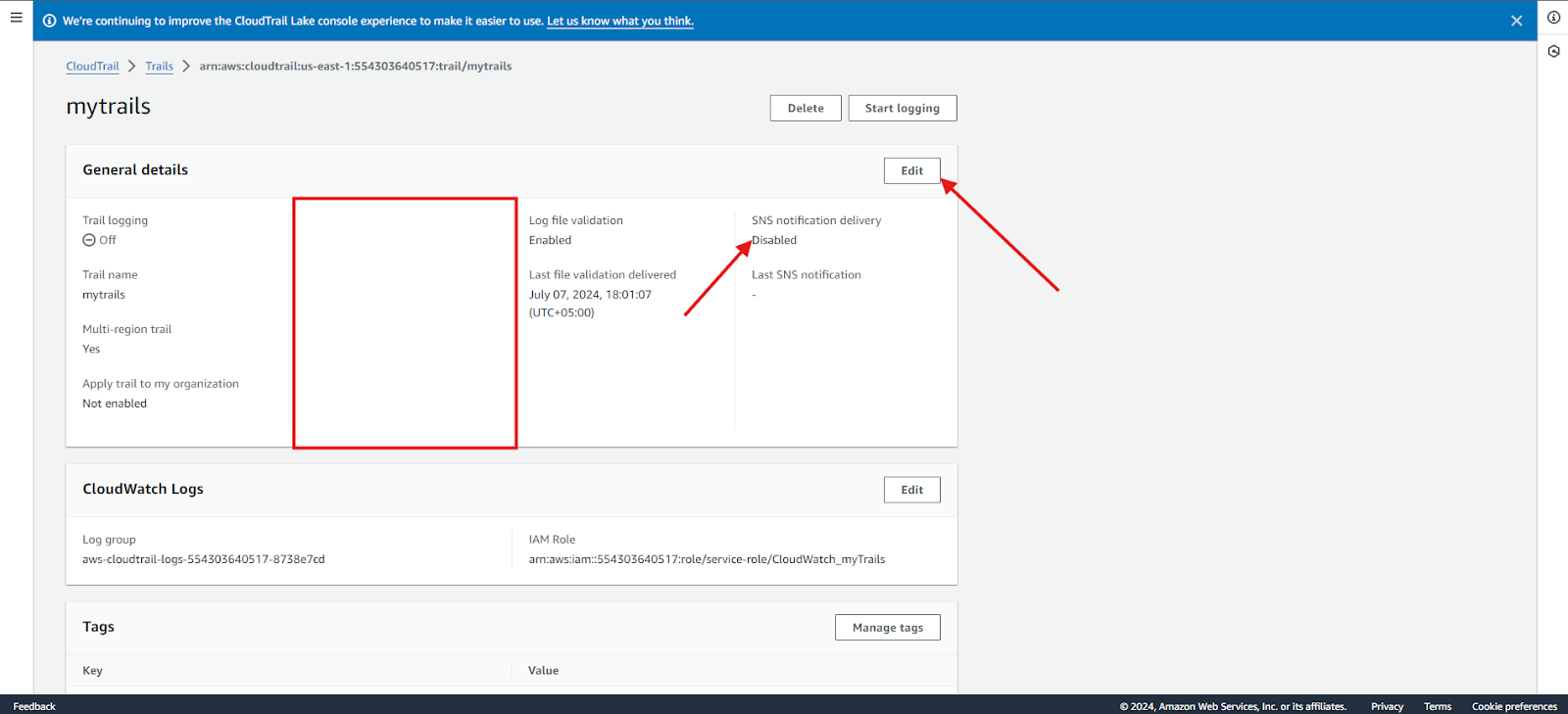

- Set up SNS: Click Edit in the General Details section. Right now, SNS is disabled.

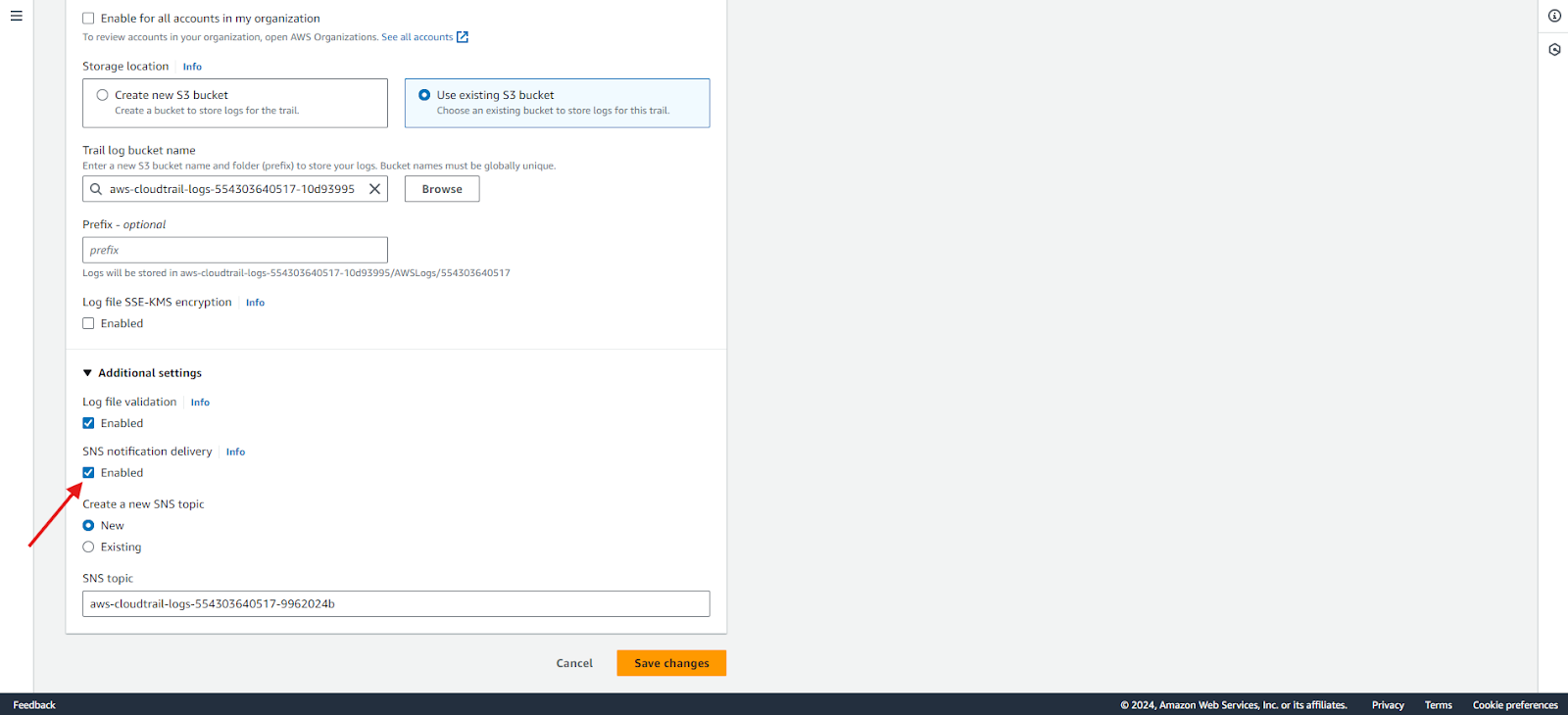

3. Enable SNS notification delivery and click Save Changes.

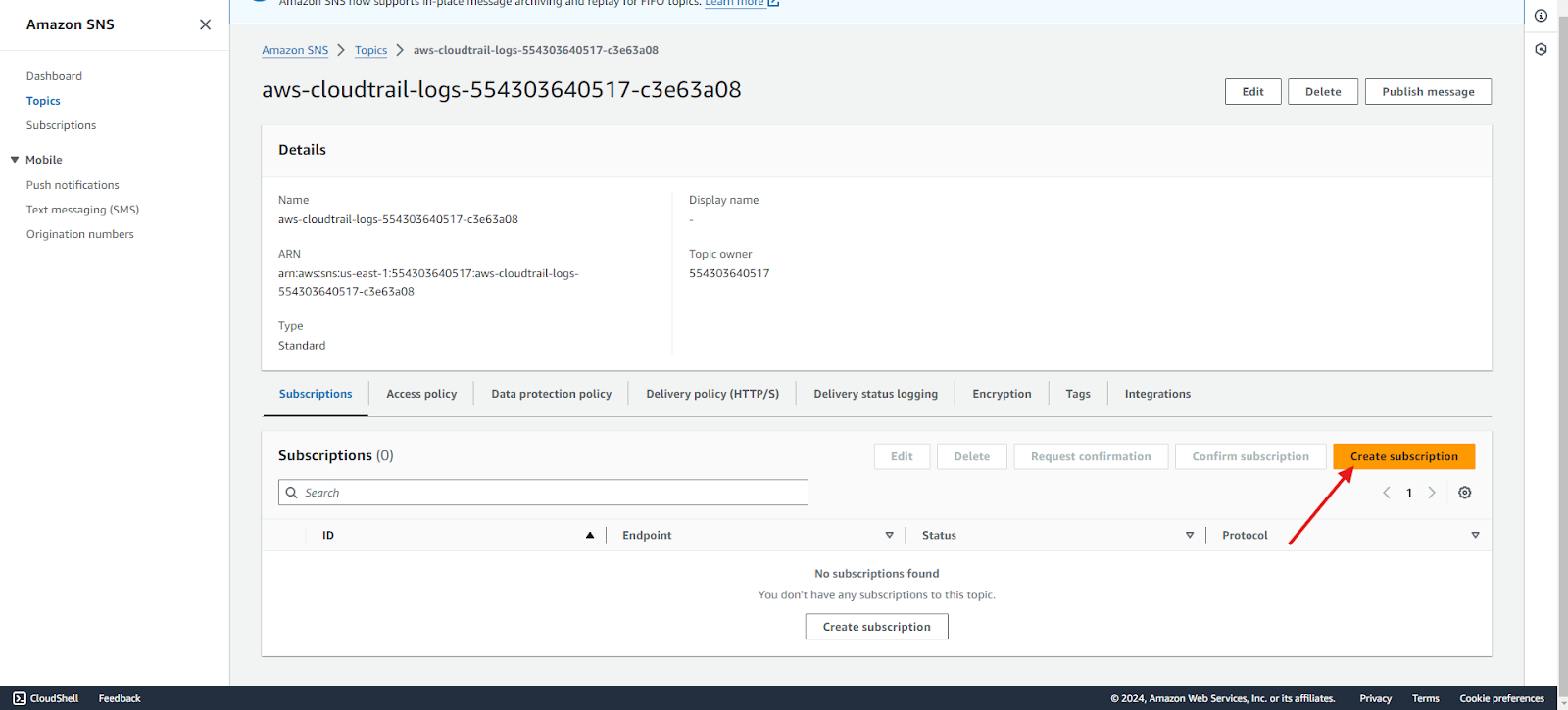

4. Create subscription: Go to Amazon SNS and select the topic created by Cloudtrail. Create a subscription to publish the results.

Analyzing CloudTrail logs

Analyzing CloudTrail logs is crucial for security, compliance, and auditing purposes. In this section, we will analyze using AWS CLI. Follow these steps to parse and analyze log data effectively.

1. Access and download Logs: Access the S3 bucket containing CloudTrail logs via the S3 console. CloudTrail log file names include the AWS region and a creation timestamp. Select specific date ranges to avoid downloading large volumes of log data. Use the AWS CLI to list and download logs.

aws s3 ls s3://my-cloudtrail-logs --recursive

aws s3 cp s3://my-cloudtrail-logs/AWSLogs/XXXXXXXXXXXX/CloudTrail/us-east-1/2022/01/01/logfile.json.gz .Now, we will extract the downloaded log file.

gunzip -c logfile.json.gz > logfile.json2. Parse and format logs: CloudTrail logs are in JSON format, making them easier to read with tools like jq or JSON formatters. For example:

cat logfile.json | jq '.Records[] | select(.eventName == "DeleteBucket")'3. Identify API actions: Focus on the eventName field to identify critical API actions. For instance, filtering for delete actions:

cat logfile.json | jq '.Records[] | select(.eventName | startswith("Delete"))'4. Investigate user activity: Check the userIdentity field to track actions to specific users.

cat logfile.json | jq '.Records[] | {user: .userIdentity.arn, action: .eventName}'5. Analyze trends and anomalies: Aggregate data to find patterns and anomalies. For example, count events per user:

cat logfile.json | jq '.Records[] | group_by(.userIdentity.arn) | map({user: .[0].userIdentity.arn, eventCount: length})'6. Ensure compliance and audit: Use the logs for auditing by visualizing trends or generating compliance reports. CloudTrail logs accumulate quickly. Archive logs after 60–90 days, keeping a recent subset locally for convenient analysis. Archive old logs for long-term storage:

aws s3api put-bucket-lifecycle --bucket my-cloudtrail-logs --lifecycle-configuration file://lifecycle.jsonFollowing the steps provided above will help analyze the CloudTrail logs. Now, let’s look at how to manage logs for CloudTrail.

Best practices for CloudTrail log management

As systems grow, multiple components generate logs into files, leading to scalability issues. The following are some best practices to effectively manage log files:

- Log rotation and retention: Log rotation, also known as log rolling, involves managing individual log sizes to facilitate easier ingestion and analysis. In contrast, log retention ensures logs do not consume excessive storage space beyond what is necessary or available. As log rotation is configured inside the instances, it can’t be controlled. To control the retention policies for your CloudTrail log files, which are stored indefinitely by default, use Amazon S3 lifecycle rules to delete or archive old log files to Amazon Glacier.

2. Access control and encryption: AWS IAM can restrict access to ensure that the right person manages log files. To restrict the users, apply the principle of least privilege, which grants permissions only to those who absolutely need them. In terms of encryption, CloudTrail log files are encrypted by default with SSE-KMS. If SSE-KMS is not enabled, logs use SSE-S3 encryption. Server-side encryption with AWS KMS keys (SSE-KMS) has some advantages over SSE-S3.

3. Regular log review and analysis: Review and analyze CloudTrail logs regularly to spot and respond to unusual or unauthorized activities promptly. Use AWS CloudWatch Logs Insights or integrate with third-party SIEM tools like Middleware, Splunk or Sumo Logic for advanced analysis.

We have covered best practices for creating, monitoring, and analyzing logs and log management. Next, we will explore advanced techniques for monitoring logs.

Advanced CloudTrail Log Monitoring

AWS provides many services and integrations for advanced CloudTrail monitoring; here we will discuss a few of them:

- ML and AI for anomaly detection: Anomaly detection can automatically identify and respond to unusual activities within your AWS environment. Services like AWS Macie use machine learning to detect anomalies in S3 data, while AWS GuardDuty identifies broader attacker activities, such as reconnaissance, within an account.

- Integrating with other AWS services: Use IAM for fine-grained access control, store logs in S3 for scalable storage, and set S3 lifecycle policies for automatic log retention. Integrate with CloudWatch for real-time monitoring and alerting to improve visibility and control over your AWS environment. Furthermore, you can integrate CloudWatch with Middleware to scrape the logs using the API Polling approach either by Role Delegation or Access Keys. This way, you can quickly search relevant logs based on the attributes of your choice.

- Customizing log analysis with AWS Lambda: AWS Lambda allows customized CloudTrail log analysis in real-time. Create Lambda functions to process log data and execute actions based on log content. Lambda’s serverless nature complements log management since fewer logs result in lower costs for the Lambda function.

Conclusion

Application development requires coding and implementing risk avoidance measures to secure your AWS environment. While this article covers various aspects, here are some final tips and recommendations:

- Implement best practices: Regularly review and implement the best practices for log rotation, retention, access control, and encryption.

- Leverage advanced monitoring: Use advanced monitoring techniques like machine learning and AI to detect anomalies. Integrate CloudTrail with other AWS services like IAM, S3, and CloudWatch for a zero-tolerance monitoring strategy.

- Automate with AWS Lambda: Customize and automate your log analysis with AWS Lambda.

- Regularly Review Logs: Set up a regular log review and analysis routine to detect and respond to unusual or unauthorized activities promptly.