Since logging is one of the most exciting and widely discussed topics, we need to understand it at all levels.

Logging is an essential aspect of the application development cycle. Whether you’re working on integrating containers into applications, just getting started with Docker, or more of an auditor looking to understand the container ecosystem better, this quick guide will help you achieve your goals.

What is Docker?

Docker is a tool that allows developers to create and deploy applications more efficiently. It’s an alternative to virtual machines (VMs) to run multiple isolated applications on a single control host without introducing virtual machine monitor (VMM) overheads.

Containers are lighter than VMs. Docker containers provide an additional abstraction and automation layer for OS-level virtualization on Linux. Docker uses the libcontainer library instead of the kernel’s namespaces and cgroups (also see Linux containers). It was developed by dotCloud Inc., founded by Solomon Hykes, the LXC project’s creator.

Docker images are the heart of Docker containers. They’re the foundation for building, shipping, and running distributed applications. These images are a great way to package your application with all the necessary parts, so you can ship and run it anywhere Docker runs.

Docker images are also known as ‘containers’ because they’re atomic and shareable. You can deploy them to any machine with Docker installed, making it easy to deploy the same application to multiple machines. Built into Docker is a way to create logs from your containers and view them locally or stream them to a logging service.

Now you’re probably wondering how to create these images?

The Docker Engine API helps create images and containers, while the command-line interface (CLI) allows users to interact with the Docker daemon. This is when the API exposes several interfaces.

The REST API uses HTTP requests to interact with the Docker daemon. It’s a highly usable interface for scripting or interacting with a remote Docker daemon. This is also the most feature-complete interface, preferred for interacting with the Docker Engine from remote applications.

There are multiple ways to build Docker images using CLI, but the most common is the Dockerfile.

Docker build-f DOCKERFILE -t .

Why are logs and logging critical in Docker?

Logging is a crucial part of any system. Without logging, it’s difficult to troubleshoot complex issues. There are many ways to log information. A log is a record of events that occur over a period. They can be generated anywhere and are generally used for debugging or auditing.

Logging is a mechanism for capturing and recording information about a program to monitor and debug it when it’s running. Logging helps developers understand what their code does, people how a live system behaves, and application owners what their application does.

Many tools or services use logs for monitoring or troubleshooting. For example, an application server running a web application will generate access logs for each request made to the server. Logs are an essential component of IT Operations. They’re the lifeblood of any system. Without logs, you can’t debug complex problems, identify performance or capacity planning issues, track down security incidents, or know who did what to your systems.

Containers have a few ways of logging, including Docker’s logging drivers, Fluentd, and rsyslog. These tools are valuable in helping you troubleshoot your container or log data. Docker uses the well-known System Logging (Syslog) protocol for logging and expects you to handle all your logging needs outside of Docker.

The Docker logging driver then passes the logs from your container to this external tool using the standard syslog protocol. The only real advantage Docker offers is its plugin system that allows you to customize the output format of container logs passed to the syslog daemon to make them more readable and easier to parse. This is where things get a little more complicated.

How is logging in Docker different?

Logging in Docker is a little different from a regular host. There are two main reasons for this:

- Containers are transient

- Containers are multi-leveled

Containers are temporary, and Docker daemon regularly replaces them. Also, there’s no host as such, only multiple containers at multiple levels, so each container runs inside a different container.

Logging in Docker containers differs from traditional logging because containers are tiered. When you run a container, you run it in an environment provided by the host operating system. For example, when you run a container with the “docker run command,” it runs in a Linux environment. Other processes and services also run in this environment. This can result in isolating error logs and debugging applications running in containers.

To solve the problem of debugging and isolating errors inside containers, you can set up logging on the host OS itself. When setting up, you collect information from all containers on the host in one location. This allows you to gather all container logs in one place instead of looking at each container’s output separately.

Docker has an option to set up logging called log-driver. The “log-driver” option enables you to set up one of four different types of logging drivers.

But before we get into log drivers, let’s understand why we need to store logs in the first place.

- Disaster recovery: When something goes wrong in production, you need to get a detailed picture of what happened and what the environment was like at the time. Additionally, logs can be used for monitoring, debugging metrics, and more.

- Performance analysis: You can also leverage them for performance analysis. Logs are a great tool to measure the application or service performance over time. They help compare system behavior under different conditions or monitor how your application behaves as it scales. Logs from multiple instances, machines, and regions can help you spot problems before they happen).

- Debugging: When your service behaves unexpectedly or doesn’t work as expected, you can examine its logs for clues about why things went wrong. Simply put, logs are an essential part of the software development and deployment process; you use them during development and in testing and staging environments when preparing for production rollout.

What are Docker logging drivers?

Docker containers are isolated processes, meaning each container has its own namespace and filesystem. When you run a container, it can’t access the host files. To exchange information and debug between the container and the host, you can use logging drivers.

A logging driver writes logs in plain text, JSON, or other formats. They’re designed for distributed environments and centrally configured using logging-configuration files. Implementing logging drivers varies slightly on different platforms but share a similar interface.

The default logging driver in Docker is “json-file”. A logging driver writes container logs to a JSON file on the host system. This is convenient because you don’t need a third-party service to access your container logs, but you need to periodically remove old logs, or your disk will fill up quickly. Also, it’s not very portable because the logging driver writes to a file on the host machine.

What if you want to use a different machine?. In this case, Docker provides three other logging drivers:

- Syslog: The syslog driver sends container logs remotely through the local syslog service

- Journald: The journald driver sends container logs directly through the journal via the logging service used by systemd.

- Gelf: The gelf driver sends container logs using the Graylog Extended Log Format (GELF), an open-source log format for sending logs over User Datagram Protocol (UDP) or Transmission Control Protocol (TCP). You can configure and scale all of these services independently from your application or Docker.

Docker enables you to run multiple containers on your host using the same logging system. So if you log data in JSON format and want to run your container with the default driver, all your container data logs into a single file “/var/lib/docker/containers/<container_id>/<container_id>-json.log”. Essentially, this format isn’t that useful for humans. You need to configure your application to use another logging driver or, even better, a combination of both drivers.

How to configure a docker logging driver

Docker contains multiple logging drivers that help extract information from the running container. Unless you configure Docker daemon to use a different logging driver, it always uses the default logging driver, the “json-file”. These drivers internally collect logs in JSON.

Configuring the default logging driver

To configure the Docker daemon to use a specific log driver, you need to edit the configuration file, also known as “daemon.json”. On Linux, you can find this file in “/etc/docker/daemon.json”. And on windows, it’s located in “%programdata%\docker\config\daemon.json”. In the daemon.json file, you can set the value of the log-driver to the default logging driver. This variable is set to “json-file”.

{

“log-driver” : “json-file”

}

If the logging drivers have a configurable option, you can set them with the “log-opts” key. Here’s an example:

{

“log-driver” : “json-file”

“log-opts” : {

"max-size" : "10m",

"max-file" : "3",

"labels" : "production",

"env" : "os"

}

}

You can use this command to know what’s configured:

docker info --format '{{.LoggingDriver}}'

This returns the current logging driver associated with the Docker daemon.

Configuring the logging driver for a container

If you want to use a different logging driver when starting a container than the one configured in the Docker daemon, you can use the “–log-driver ‘flag when you run the container.

docker run -it --log-driver local alpine ash

This command starts an Alpine container with ”local” as the logging driver.

To find a specific container’s logging driver, you can use the “docker inspect” command.

docker inspect -f '{{.HostConfig.LogConfig.Type}}'

Once you run the container to view the logs, you can use the “docker logs” command.

docker logs

To view new logs, use:

docker logs -f

Docker logging strategies

Docker offers many logging strategies. Some of these are discussed below.

Docker logging driver

Docker logging driver is the most popular method for collecting logs. It sends container logs to a centralized logging cluster, so you can collect and view logs from your “dockerized applications”. Docker uses the ContainerLog API provided by the container runtime. This API enables Docker to capture all active container logs. When you start a Docker container, it captures all the output from Standard Out (STDOUT) and Standard Error (STDERR) by default.

The default behavior is to send all container logs to Syslog, but this may not be necessary in some cases. Since this logging approach is native to Docker, the configuration process is easy, and the logs are collected in a centralized location. But this also makes containers dependent on host machines. You can use the default logging driver if your system is simple and you don’t want to set up a complex logging process.

Dedicated logging container

A common pattern seen in Docker Logging is to create a dedicated logging container that accepts syslog messages from containers and store them on disk in a structured format (specifically JSON) so that the ecosystem of log management tools can easily consume them. This also provides a common entry point for the various logging tools.

The main benefit of using a dedicated logging container is you don’t need to worry about logging issues when scaling because you can just add more logging containers. But the logging application containers must know about the logging container. With this strategy, you don’t need a host machine, removing all dependencies of a host machine. You should use dedicated logging strategies to collect logs locally before sending them to a log management tool or analytics platform.

Sidecar approach

This approach lets you create a new container that uses the host’s logging driver. This means you can use the existing Docker API calls to control logging, but you have more logging flexibility. A better approach is to use the sidecar logging driver that sends container logs to STDOUT by default. Docker captures this driver’s output and sends it to a central logging server running Fluentd or Logstash.

With Fluentd and Logstash, you can collect log data from multiple servers and forward it to a central location for aggregation and analysis. Following a sidecar approach allows you to fully customize your application logging approach. It’s also pretty easy to maintain since logging is done automatically.

However, the major issue is its complexity. Setting up sidecar logging can be difficult as it consumes more resources. Use the sidecar approach when you have a large distributed system and want granular access to your logging process.

Logging made simple

Docker is fairly simple, but various complexities can mar it. The main goal here is to get a good overview of the capabilities of a Docker logging system. This handy guide should expand your knowledge and help set up your monitoring capabilities to ensure that everything goes in the right direction.

Although troubleshooting applications with logging is a time-consuming process and setting up logging drivers requires good technical expertise.

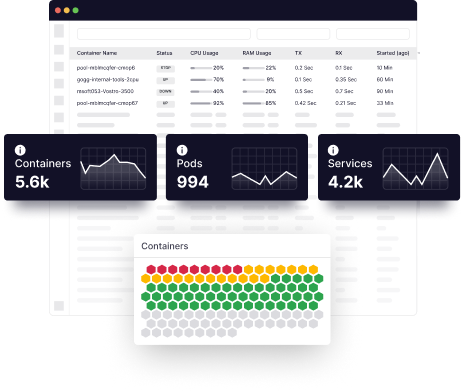

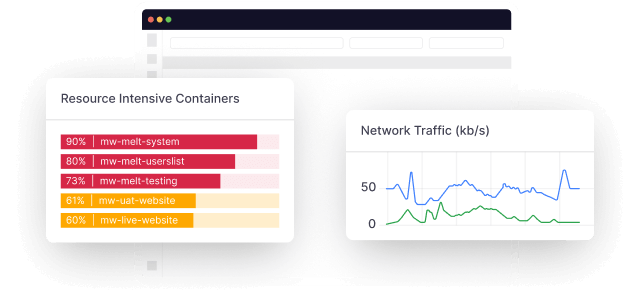

Platforms like Middleware set up a logging driver with the most suitable settings and show you the container logs in real time!