Gone are the days when a hardware failure led to an organization’s entire infrastructure coming to a complete standstill. With digital databases and cloud-based architecture, we now have sophisticated mechanisms to ensure that your database is protected and can stay running despite operational issues.

However, just because it is safer doesn’t mean it can never experience downtime or failures. Database failures remain a persistent threat for most businesses and can cause significant financial losses or reputational damages if they happen at a crucial time.

But why do database failures occur? The reasons can range from hardware malfunctions to human errors, and even the minutest of mistakes can cause an entire database to fail. To mitigate these risks, organizations must adopt a proactive approach to database management. This involves implementing a robust set of strategies to prevent failures, protect data integrity, and ensure business continuity.

In this comprehensive guide, we will explore nine essential safety tips for safeguarding your database and minimizing the impact of potential disruptions.

9 ways to prevent database failures

So, despite being safer than traditional hardware-based infrastructure, why do database failures still occur? The answer can be several, like hardware failures, software failures, network failures, bugs in the database, or security breaches.

Surprisingly, 70% of organizations in a global report mentioned “careless users” as the cause for data losses, and fewer than 50% cited technical issues as the reason for it.

Thus, the ideal approach to database failures is to undertake preventive steps to minimize the risks of failure and ensure that even if a failure occurs, its impact can be contained. Here are nine proactive steps you can implement to keep your data safe and ensure business continuity even if a failure occurs.

1. Regular backups

The best way to avoid data loss during a database failure is always to have a backup. It’s that simple!

By regularly backing up your data, you can instantly use the last saved instance and get back in action, ensuring that your data loss due to hardware failures, cyberattacks, or human errors is minimized. Depending on your organization’s dependency on the database and business usage, you can backup the data by determining:

- Frequency: Determine the optimal backup frequency based on data criticality and business requirements. As per Recovery Point Objective (RPO) protocols, a business cannot afford data loss of more than 10 hours.

- Backup types: Consider full, incremental, and differential backups to balance data protection and storage efficiency.

- Backup media: Choose reliable storage options like tapes, disks, or cloud-based storage.

- Backup testing: Regularly test your backup and restore processes to verify data integrity and recovery time objectives.

- Backup retention: Define backup retention policies to manage storage space and compliance requirements. The Recovery Time Objective (RTO) mentions that a database must run within 3 hours after a failure occurs.

2. Database monitoring

Database monitoring is a systematic approach that helps you monitor your database systems and closely monitor their network and database performance.

This allows you to gain deep insights into the behavior and performance of your entire database, enabling you to identify slow queries, address bottlenecks, and take action regarding resource constraints that could impact your overall performance. An effective database monitoring process usually involves the following:

- Tracking key metrics, such as CPU utilization, memory usage, disk I/O, query performance, and error rates.

- Real-time monitoring using advanced database monitoring and observability tools to gain insights into the health and performance of your database.

- Setting up alerts to notify you of abnormal behavior or performance deviations in your database.

- Monitoring performance data 24/7 to identify and address performance bottlenecks before they can lead to a slowdown or database failures.

- Forecasting resource requirements based on database growth and workload trends.

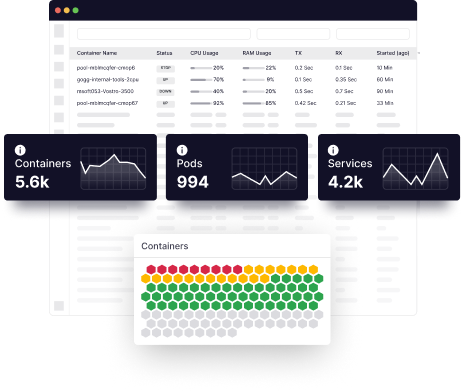

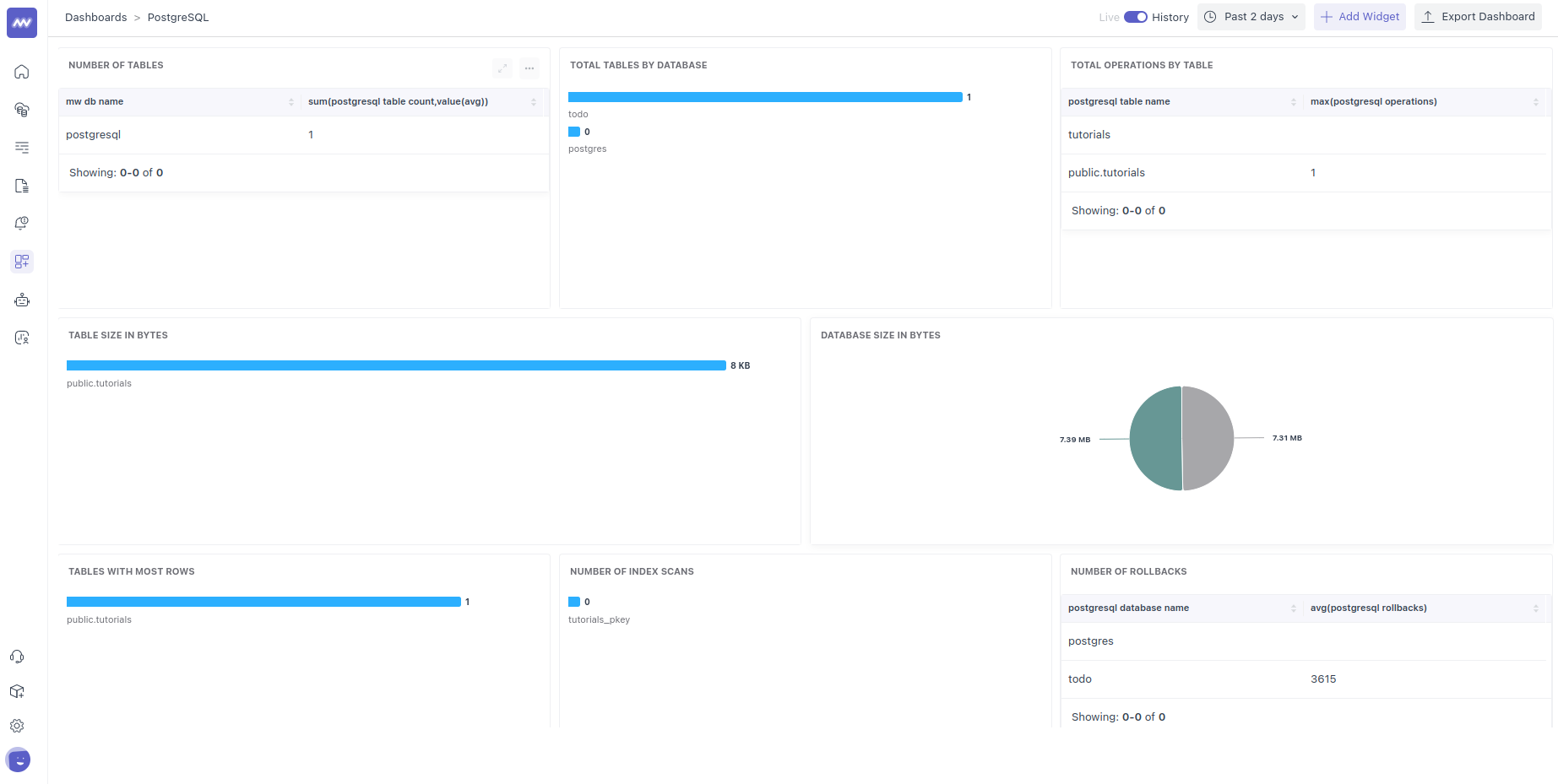

Comprehensive observability solutions like Middleware can help you proactively monitor and manage your database activities. Middleware is an all-encompassing tool that provides detailed insights into your database’s health and performance, enabling you to identify potential issues before they escalate into major problems.

This proactive database monitoring allows you to perform activities like:

- Real-time monitoring: Continuously monitor your database metrics such as CPU utilization, memory usage, disk I/O, query performance, and error rates.

- Anomaly detection: Leverage advanced analytics to identify unusual patterns and deviations from normal behavior, alerting you to potential issues before they impact your database.

- Root-cause analysis: Middleware helps pinpoint the root cause of database performance issues by correlating database metrics with other system data, such as application logs and infrastructure metrics.

- Capacity planning: By analyzing database growth trends, Middleware helps you anticipate future resource requirements and avoid performance bottlenecks.

- Index management: Middleware offers recommendations for creating, dropping, or rebuilding indexes to improve query performance.

- Improved MTTR: By providing a comprehensive view of your system, Middleware helps reduce the time it takes to resolve database problems, improving your mean time to repair (MTTR).

- Optimized MTTD: Middleware’s early warning capabilities help detect issues sooner, reducing your mean time to detect (MTTD).

3. Schema optimization

Regularly reviewing and optimizing your database schema is crucial for maintaining performance and preventing bottlenecks. Some of the key aspects of schema optimization include:

- Normalization: Ensure data is organized into tables with minimal redundancy and dependencies.

- Indexing: Create appropriate indexes to speed up query execution on frequently accessed columns.

- Data types: Choose the correct data types for columns to optimize storage and performance.

- Table partitioning: Divide large tables into smaller, more manageable partitions for improved query performance and manageability.

- Regular review: Periodically assess your schema for potential optimization opportunities.

4. Query optimization

Most databases can be accessed using queries, making query execution essential to maintaining database performance and effectiveness. Some key strategies for query optimization are:

- Query analysis: Identify slow-performing queries and analyze their execution plans.

- Indexing: Ensure appropriate indexes are in place to support query execution.

- Query rewriting: Rewrite inefficient queries to improve performance.

- Parameterization: Use parameterized queries to reduce query compilation overhead.

- Caching: Implement query caching to store frequently executed queries and their results.

- Query tuning: Fine-tune query parameters and execution plans for optimal performance.

5. Resource management

Allocating sufficient resources to your database is essential for optimal performance and preventing bottlenecks. Proper resource management involves:

- Hardware resources: Ensure adequate CPU, memory, and storage capacity to handle the database workload.

- Software resources: Allocate sufficient licenses for database software and related tools.

- Monitoring resource utilization: Track resource consumption to identify potential bottlenecks and adjust allocations as needed.

- Capacity planning: Forecast future resource requirements based on database growth and workload trends.

6. Access control

Protecting your database from unauthorized access is crucial for maintaining data integrity and security. Access controls allow you to provide every user with limited access to your network and overall infrastructure, protecting it from any unauthorized access. You can define access controls using parameters like:

- Authentication: Verify the identity of users attempting to access the database through strong password policies, multi-factor authentication, and biometric verification.

- Authorization: Define user permissions based on roles and responsibilities, granting access only to necessary data and functions.

- Least privilege principle: Grant users the minimum privileges required to perform their tasks.

- Regular reviews: Periodically review and update user permissions to ensure they align with current roles and responsibilities.

- Security awareness training: Educate users about the importance of data security and best practices for protecting sensitive information.

7. Patch management

Keeping your database software up-to-date with the latest patches is essential for maintaining security and performance. Regular patch management helps address vulnerabilities, improve stability, and enhance overall database reliability.

Key aspects of patch management include:

- Patch testing: Thoroughly test patches in a controlled environment before deploying them to production.

- Patch scheduling: Develop a patch deployment schedule to minimize disruptions to database operations.

- Change management: Implement change management processes to document patch installations and track their impact.

- Vulnerability assessment: Regularly assess your database environment for vulnerabilities and prioritize patch applications accordingly.

- Emergency patching: Plan to apply critical patches outside of regular maintenance windows in case of security threats.

8. Disaster recovery plan

Since database failures are sometimes unavoidable, you will need a comprehensive disaster recovery plan to help you in this stage. The plan should include the steps and resources you can use to get back up to speed and minimize downtime, data loss, or any sort of disruption to your business.

A complete disaster recovery plan should include:

- Risk assessment: Identify potential threats and vulnerabilities to your database environment.

- Recovery objectives: Based on business requirements, define recovery time objectives (RTO) and recovery point objectives (RPO).

- Backup and recovery procedures: Develop detailed procedures for backing up and restoring database data.

- Testing and validation: Regularly test your disaster recovery plan to ensure its effectiveness.

- Communication plan: Establish communication channels for coordinating disaster recovery efforts.

- Documentation: Maintain up-to-date documentation of the disaster recovery plan and procedures.

9. Database high availability

A major reason for database failures is the continuous and high usage of its resources. To ensure uninterrupted database access and minimize downtime, consider implementing high-availability solutions. These solutions provide redundancy and failover mechanisms to protect against database failures. Some of the approaches for managing database availability include:

- Database clustering: Create a cluster of database servers where one acts as the primary and others as standbys.

- Database replication: Maintain multiple copies of the database across different servers.

- Load balancing: Distribute database traffic across multiple servers to improve performance and availability.

- Failover mechanisms: Implement automatic failover to a standby database in case of primary database failure.

- Regular testing: Test high-availability configurations to ensure their effectiveness.

Conclusion

Database failures can be a nightmare, but with proper precautions, their frequency and impact can be controlled. The strategies outlined in this guide will help you reduce the risk of these failures and ensure smooth operations. However, to note any impending disaster, you need constant monitoring to ensure that any issue or anomaly gets flagged early on, giving you ample time to mitigate it.

Database monitoring tools like Middleware offer several features that help you proactively monitor your database, preventing failures and optimizing overall performance. This also lets you gain valuable insights into your database performance and take the right steps to safeguard it.Ready to explore how Middleware can help you achieve database resilience and peace of mind? Try us for free today!