Developers rank Docker as the leading technology for containerization, and it’s considered a “game-changer” in DevOps. Many large companies use Docker containers to manage their infrastructures, including Airbnb, Google, IBM, Microsoft, Amazon, and Nokia.

In the few years since its inception, the Docker ecosystem has quickly become the de-facto standard for managing containerized applications. For those new to the Docker ecosystem, it can be daunting to understand how it works and how to evolve with it. Let’s help you get the basics out of the way first.

Docker and containerization

Docker is a relatively new technology, but it’s already well underway in DevOps. It provides developers and system administrators an open platform to deploy, manage, and run applications via containers. Each container is like a separate Linux system, so you have a complete operating system (OS) running within a single machine.

A containerization platform lets you quickly build, test, and deploy applications as portable, self-sufficient containers that can run virtually anywhere.

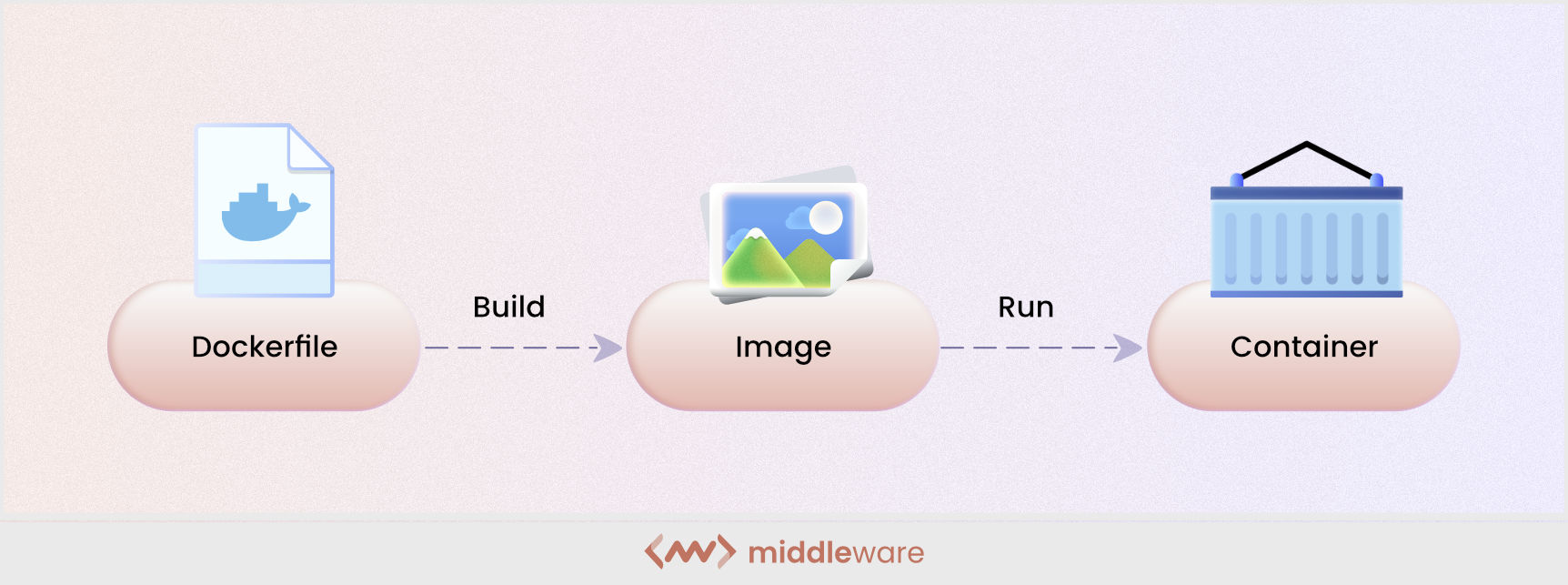

When learning more about Docker, images and containers pop up first. These two fundamental elements are critical to understanding the Docker ecosystem. So, what exactly are they?

An image is a read-only template that creates containers. It’s a set of instructions that help run a container on the host operating system and is built using the “build command”.

On the other hand, containers are runtime instances of an image. They run applications and software in an isolated environment. Containers are isolated and bundled with software, libraries, and configuration files and communicate through well-defined channels.

How do images and containers integrate into a Docker ecosystem?

Images launch containers. However, an image isn’t a container. Instead, it’s a template of what the container looks like when it’s launched. As a result, you can use the same image multiple times without having to reconfigure each container.

Dockerfile creates images. It’s a script that describes what needs to be done to build an image successfully. Similar to any script, it contains instructions on how to perform specific tasks, as well as what information to use when performing those tasks.

For example, Dockerfile can contain instructions for installing specific programs or downloading specific files from other servers.

Dockerfiles parse line by line, so each instruction can be executed in order until an image is ready to use. The important thing about Docker images is that they use a layered file system. This means that adding a new layer of code doesn’t rewrite everything below it. Instead, it only writes what’s changed, leaving the underlying layers intact and speeding up the process tremendously.

You can think of an image as a snapshot or template for building Docker containers. Images include everything needed to run an application: the code or build, runtimes, dependencies, and any other necessary file system objects.

Docker tools and components

The Docker ecosystem includes many tools and elements that make it so useful for standardizing software development. Here are some of its important components.

Docker client

Docker client is a “Go” program that acts as a client for the Docker daemon (the Docker engine). It runs on any machine, accepts commands from the user, and sends these commands to the Docker daemon. The daemon does the heavy lifting of building, running, and distributing Docker containers.

By default, the Docker client interacts with a remote Docker daemon over TCP/IP. However, you can also connect it to a local “Unix socket” or use “SSH” for a remote daemon.

To interact with the daemon, you need to install the Docker client on your machine (Docker for Windows/Mac), or you can use “boot2docker” for Mac/Windows. On Linux systems, you can use the “apt-get” or “yum package manager” to install it.

The Docker command uses the Docker API. Docker [option] [command] [arguments] invokes this API. After the user types a command and presses Enter, the Docker client sends it to the Docker daemon. As you can see, the main component of the Docker environment is the Docker client.

Docker server

A Docker server is a service that uses the Docker API to manage its containers. This is where the magic happens. It’s a central place where containers are deployed – an abstraction from running applications.

A Docker server is referred to as a “host,” and each application container running on the host is called a “container”. Multiple containers can run concurrently on a single host. A common example of this is the Docker Hub Registry. It provides access to public images and enables collaboration between users in pushing and pulling images.

Docker server offers a full development lifecycle to develop and run an application. It’s similar to a virtual machine but uses the host kernel where a container is isolated from other containers, processes, and resources.

Docker Hub

Docker Hub is a cloud-based registry service that allows you to link to code repositories, build and test images, store manually pushed images, and link to Docker Cloud so you can deploy images to your hosts.

It’s a centralized resource for container image discovery, distribution and change management, user and team collaboration, and workflow automation across the development pipeline.

Here are some of Docker Hub’s main features:

- Repositories: Push and pull container images

- Teams and organizations: Manage access to private repositories of container images

- Official images: Pull and use high-quality container images via Docker

- Publisher images: Get and use high-quality container images from external providers. Publishers can be official organizations or individuals.

Docker Engine

Docker Engine is an application that manages the entire container lifecycle from creating and storing images to running a container, network attachments, and so on. It can be deployed as a container or installed directly on the host machine.

Docker Engine is a client-server application. Some of its main components are:

- Server: It’s a type of long-running program also called a daemon process (the Docker command)

- Command-line interface (CLI) client: The Docker command acts as a CLI client in the Docker ecosystem.

- REST API: It determines interfaces programs can use to communicate with the daemon and tells the daemon what to do

Docker Engine builds and runs containers using Docker’s components and services. It’s a portable, lightweight runtime and packaging tool with infrastructure services to run applications.

What is the Global Distribution Store (GDS)?

Global Distribution Store (GDS) is a service for storing the images you want to distribute. These can be public or private and accessed by all Docker users or only specific users.

The difference between a GDS and a local store is that an image must be downloaded as a whole to access it from a GDS, while with a local store, you can download only the image layers that are not on the existing system.

If you use Docker to run containers, you can use Docker Store for all the images you need. However, with Kubernetes or Rancher, you need to visit their respective websites to download the necessary images.

This is where GDS comes into the picture. It’s a single place where you can store all the images of all the different technologies (Docker, Kubernetes, Rancher, etc.). A user can retrieve these images from a GDS instead of going to different websites for each technology.

Docker and service discovery

Service discovery, also called service registry, is a pattern to locate services for load balancing and failover of middleware applications. A service registry is often coupled with other functions such as monitoring and administration.

With service discovery, each microservice instance registers when entering and deregisters when it leaves the cluster. The service registry uses a name to identify a service, called the “logical name”. Each logical name can be assigned to multiple physical services.

Service discovery is one of the key tenets of a microservice-based architecture. You can achieve it in many ways, including:

- Using a service registry to register services and find relevant services through querying

- Leveraging multicast DNS (mDNS to send service information to different network nodes

- Using CNAME records in the Domain Name System (DNS)

- Retrieving information from a Configuration Management Database (CMDB)

Service discovery tools and the Docker ecosystem

Service discovery tools are extremely important in the Docker ecosystem. They automatically detect network nodes and configure routing between them. The three most popular service discovery tools are:

- Docker Swarm

- Kubernetes

- Mesos

These tools automatically discover the services and containers deployed on the network by periodically polling the registries. The registrations, in this case, are dynamic, which means the tools update their data whenever the services or containers on the network change. They also automatically update the load balancers with new information.

You can find many service discovery tools on the market. Some of the popular ones are:

- Consul: Consul is a distributed, highly available, and data center-ready solution for connecting and configuring applications across dynamic, distributed infrastructures.

- Etcd: The Etcd community provides a key-value store for common configuration and service discovery.

- ZooKeeper: ZooKeeper is distributed configuration software for managing large numbers of hosts.

Networking tools backed by Docker

As discussed earlier, Docker tools connect the containers to each other and the outside world. And this is what Docker networking is based on, a libnetwork that provides a “native implementation of Go” for networking.

Libnetwork has a client/server architecture, with the daemon running as a server and all other components as clients. The client libraries provide the API to manage and control the network.

These tools help manage the network connections on a Docker host. Some network tools Docker supports are:

- docker0: The docker0 bridge is the default bridge network inside the Docker host. All containers running on the same host connect to this bridge network. The containers running on other hosts cannot communicate with this container unless they explicitly initiate communication.

- Brctl: Linux Bridge Utilities is an optional tool that can help create and manage Linux virtual bridges. This tool sets up and manages container networks.

Scheduling, cluster management, and orchestration

Scheduling helps allocate container nodes for execution. The most basic scheduling algorithm simply assigns the next available container to the next available node. This approach works well in many situations. However, you may also require advanced algorithms in other instances.

For example, if you have a web application with a front-end container and a back-end container running on different nodes, the back-end container should access the front-end container through its internal network address. More complex scheduling algorithms can consider the network dependencies of containers when deciding where to place them.

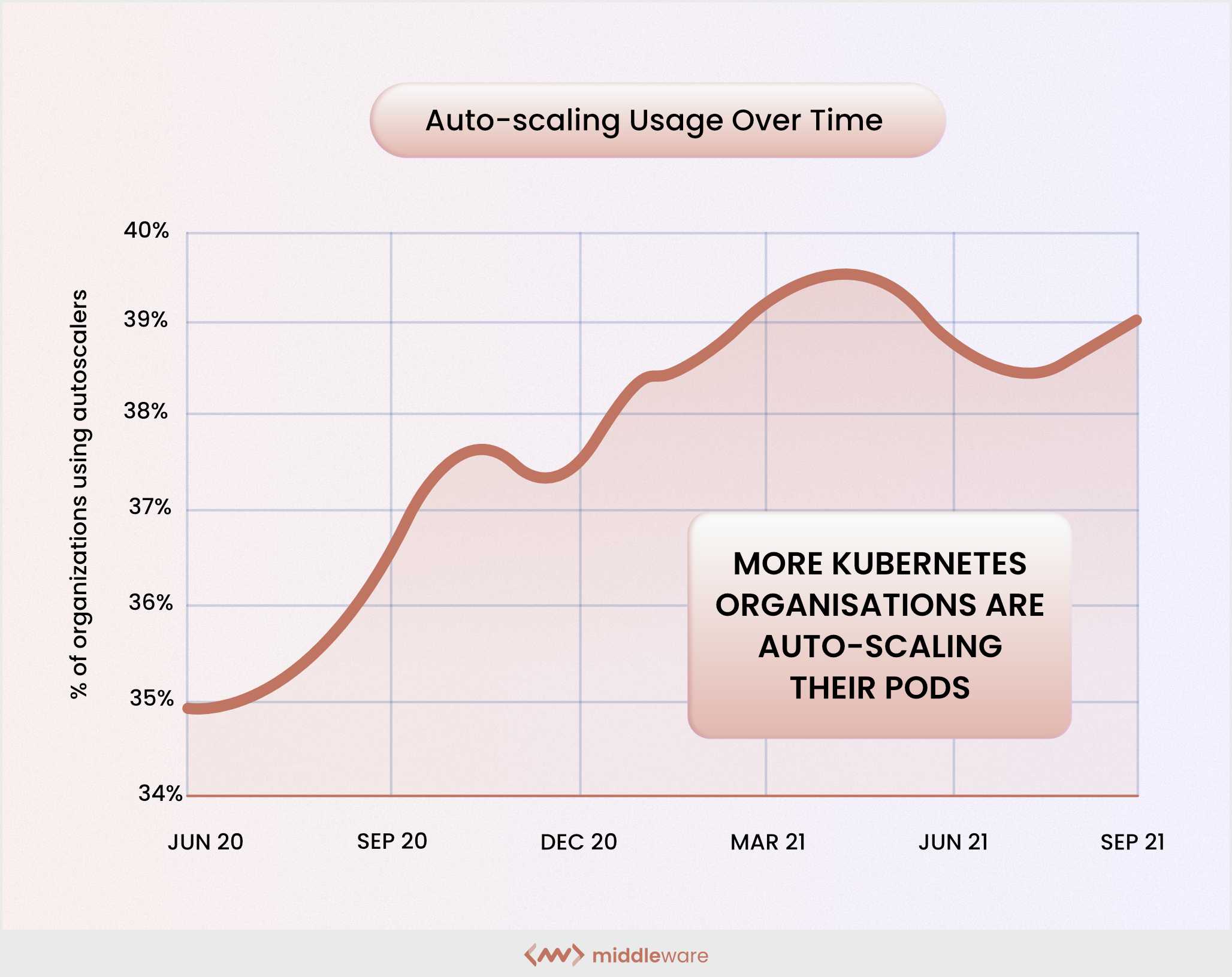

Cluster management refers to how individual nodes within a cluster are managed and communicate with each other. It’s an important process because it allows you to autoscale your system as demand changes.

For example, in a two-node system with dual demand, you can add another node without shutting down the system and ensure high availability at all times.

Container Orchestration is an automated process for provisioning, configuring, deploying, and managing software containers in container clusters. It monitors and manages container scheduling, clustering, and high availability while providing failover and scaling.

Benefits of using Docker

Docker is inexpensive, easy to deploy, and offers increased development mobility and flexibility. Here are some major benefits of using Docker:

- As an open-source tool, Docker automates deploying applications in software containers.

- It allows developers to package applications with all required parts like libraries and other dependencies and deploy them as one package.

- Docker helps developers create a container for each application and focus more on building it without having to worry about the operating system.

- Developers can easily move their software from one host to another using containers to deploy software. Containers are portable and self-contained.

- Containers are the standard for delivering microservices in the cloud. Microservices are deployed in separate containers and use virtual networks when they need to communicate with each other. Each microservice container is isolated from the others, making debugging much easier.

- Containers are easy to install and run because they don’t require installing an application or runtime environment. All you need is a Docker container image running on a container platform such as Docker Engine or Kubernetes.

Is Docker the right choice for you?

Docker is a promising platform that could rock the IT world and make life easier for many. If you’re considering adopting Docker, study the basics to understand its ecosystem. The more you learn, the better your decisions.

Opt for a practical and viable solution like Middleware to understand and implement Docker on the go.