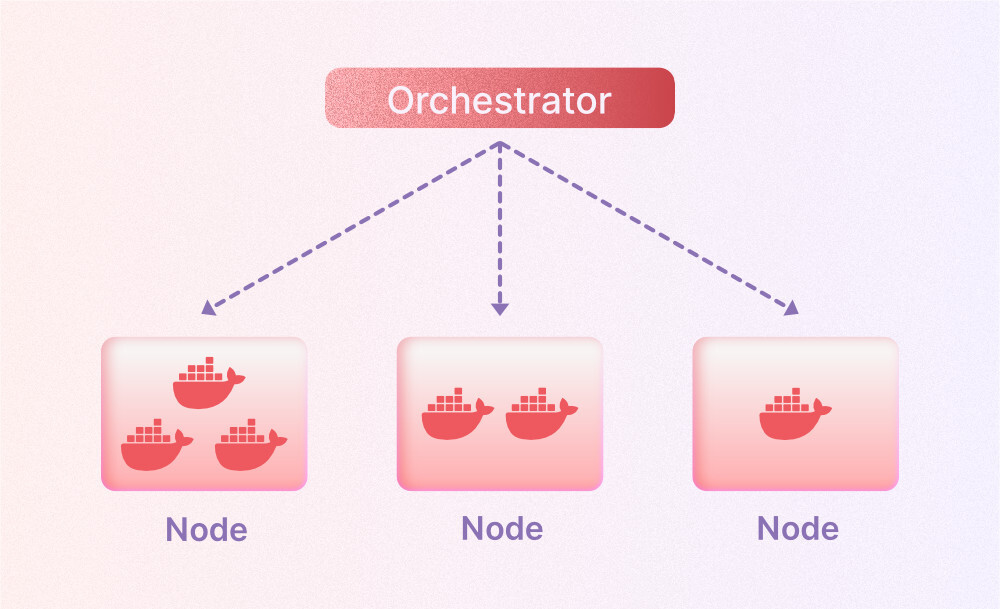

Container orchestration is a software solution that helps you deploy, scale and manage your container infrastructure. It enables you to easily deploy applications across multiple containers by solving the challenges of managing containers individually.

This microservice-based architecture enables your application lifecycle’s automation by providing a single interface for creating and orchestrating containers.

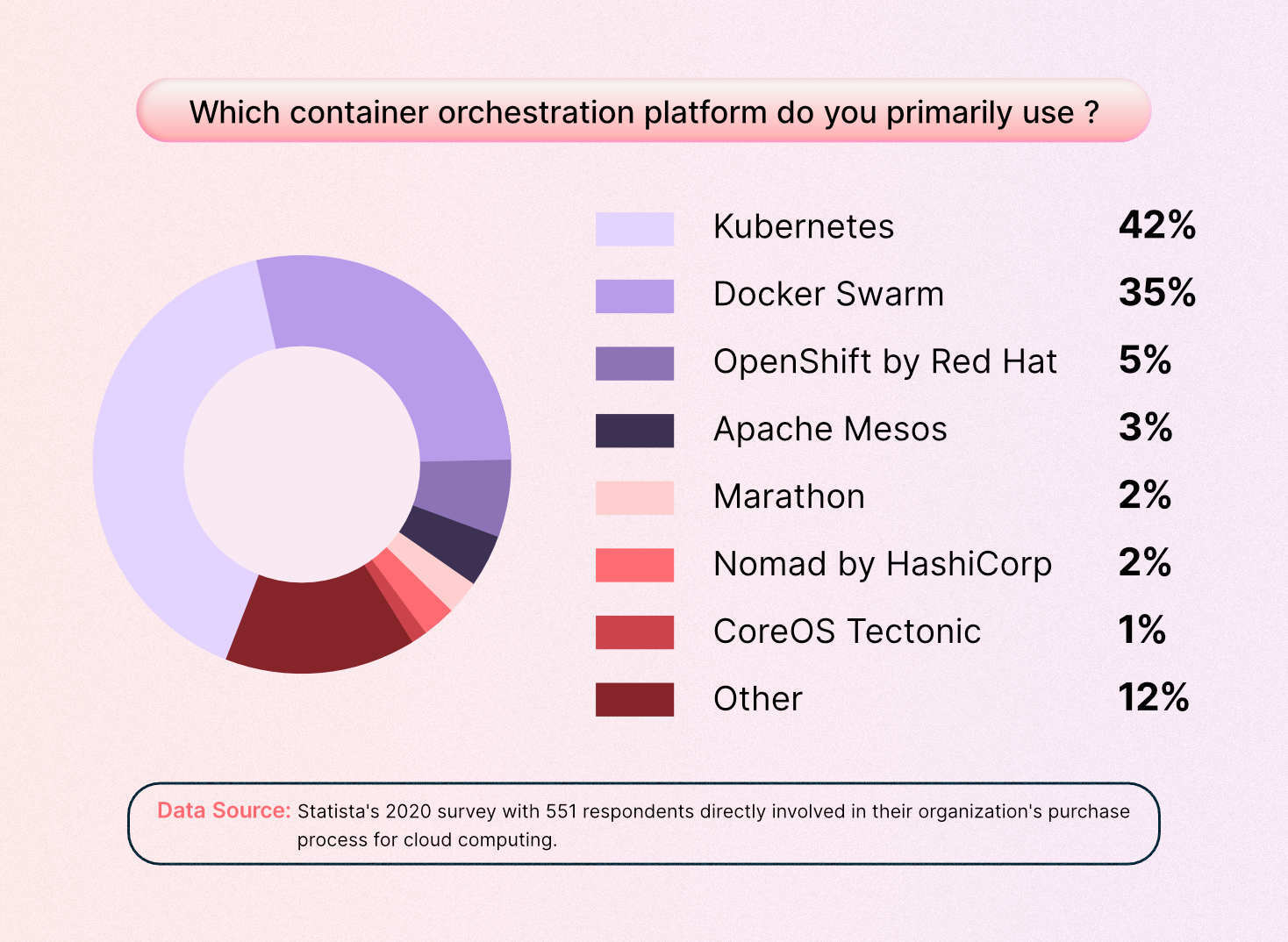

Container orchestration is yet a young technology with many challenges to overcome. As a result, there are many competing container orchestrators on the market today, each with its strengths and weaknesses. Kubernetes is by far the most popular, but it doesn’t come without its challenges.

But first, let’s understand what container orchestration is, why your company needs it, the benefits and challenges of container orchestration, and much more!

What is Container orchestration?

Container orchestration is the process of managing multiple containers in a way that ensures they all run at their best. This can be done through container orchestration tools, the software programs that automatically manage and monitor a set of containers on a single machine or across multiple machines.

Container orchestration tools provide an easy way to create, update and remove applications without worrying about the underlying infrastructure—this makes them ideal for use in DevOps teams working on projects involving large numbers of microservices (small pieces of software). And these microservices make it easier to orchestrate services, including deployment, storage, networking, and security.

Why do you need Container orchestration?

Here are several of the most common reasons why you would need container orchestration –

- To create and manage containers: Containers are prebuilt docker images that contain all the dependencies necessary for your application. The container can be deployed on any host machine or cloud platform without significant changes in the codebase or configuration files (i.e., no need for manual configuration).

- To scale applications: Containers provide fine-grained control over how many instances of your applications run at any given time based on their resource requirements—such as memory usage or CPU cycles—and how much load they’re expected to handle before failure occurs.

- To manage container lifecycle: Using a container orchestration tool such as Kubernetes (K8s), Docker Swarm Mode, Apache Mesos, etc., one can easily manage multiple services within an organization or across multiple organizations with ease through automation.

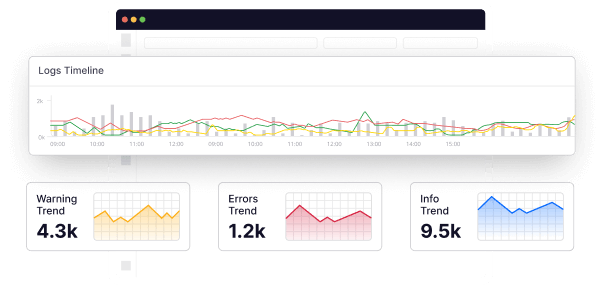

- Maintain visibility into container health: K8s monitoring platform provides an overview dashboard that gives real-time visibility into each service’s status.

- Automate deployment: Automation tools like Jenkins allow developers to work remotely from anywhere worldwide without having access to a system where source code resides; instead, these tools simply upload changes made locally onto remote servers where Jenkins runs tests against them. It then reports if anything failed unexpectedly during the testing process, like changes made by other developers who might have forgotten about updating something else before submitting a new build file(s).

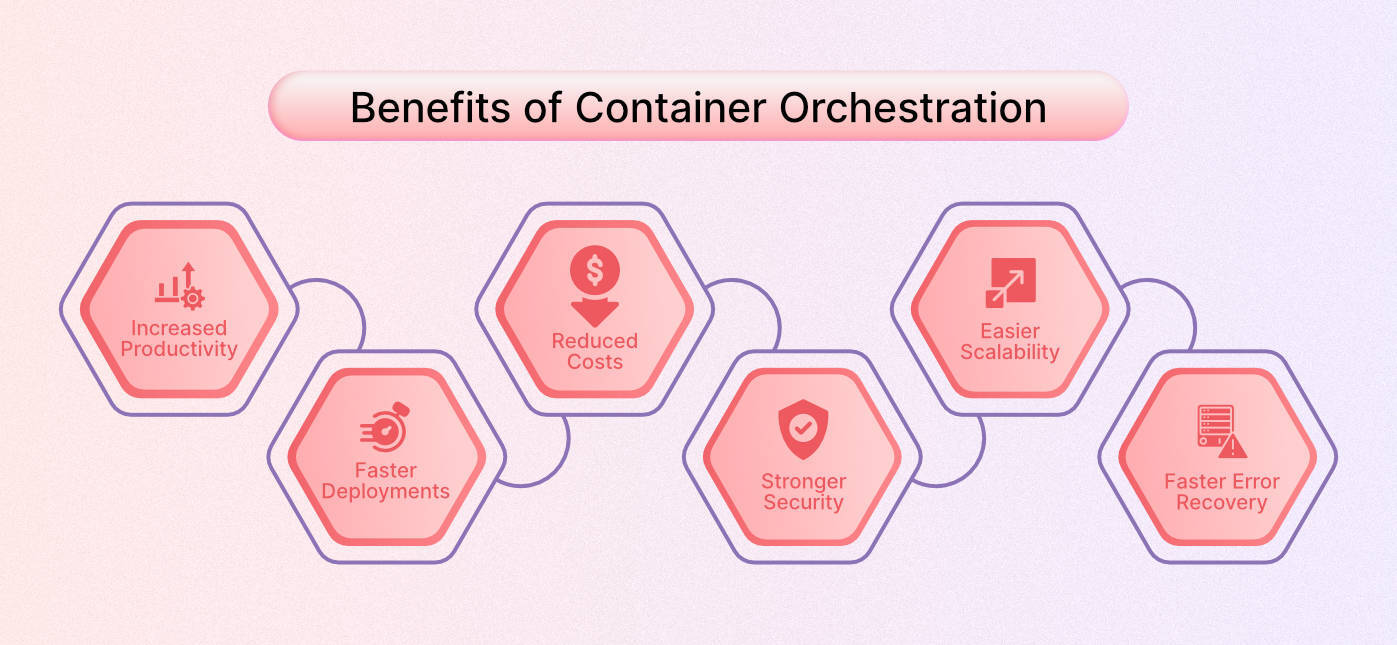

What are the benefits of Container orchestration?

There are many benefits to container orchestration, including:

- Improved scalability – Your application becomes more scalable when you use container orchestration. This is because the number of containers running simultaneously is limited only by the number of resources available on each machine. As a result, your applications will scale better than they could without orchestration tools.

- Improved security – Container orchestration tools help improve security because they allow users to manage security policies across multiple platforms (e.g., Linux vs. Windows). They also provide visibility across all components in an application stack so that attackers cannot hide behind individual components and evade detection at any level in the stack (e.g., operating system kernel).

- Improved portability – Containers allow developers to move their applications from one cloud provider or device type to another with little effort. This is because there is no need for code changes when moving between environments like this due to how portable they are designed within those environments’ ecosystems. This makes them easy enough that even someone new would know how to use them immediately without any previous experience since everything else has already been set up beforehand.

- Reduced cost – Using containers over virtual machines helps a lot in reducing infrastructure costs because they use fewer resources and have lower overhead costs.

- Faster error recovery – One of the biggest benefits of using container orchestration is that it can detect issues like infrastructure failures and automatically work around them. This helps in maintaining high availability and increasing the uptime of your application.

How does Container orchestration work?

To understand how Container orchestration works from an operations team or SREs perspective, let’s take an example of a simple application with a frontend, backend and a database and each application is made up of multiple services.

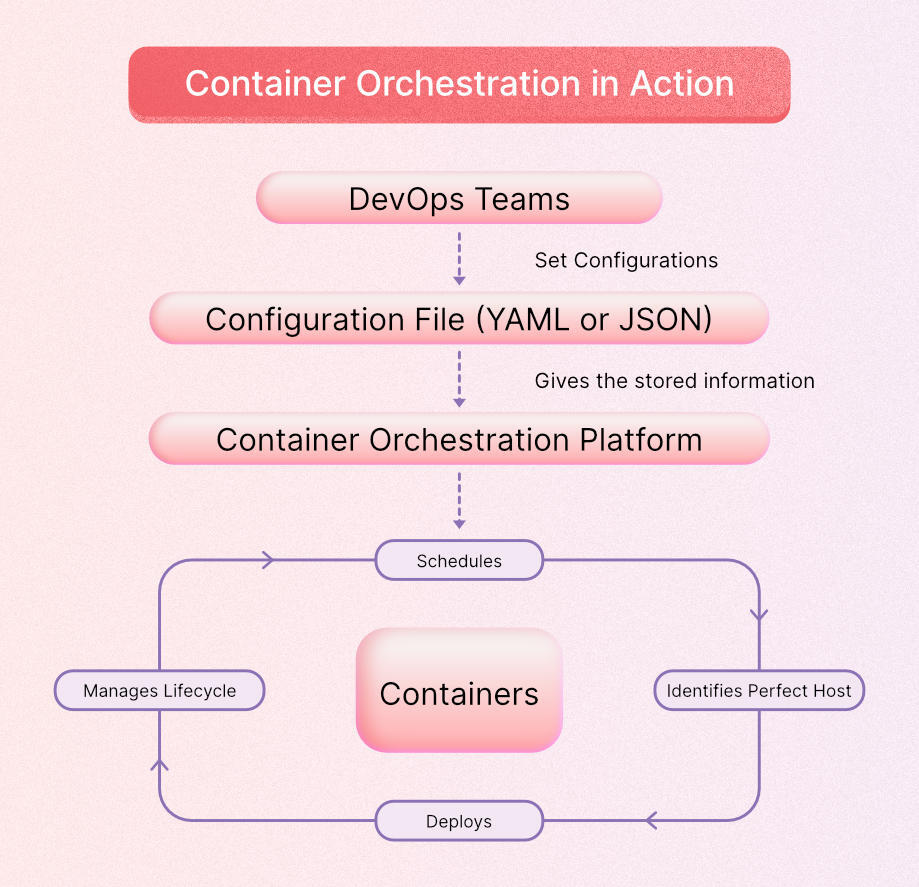

The Container orchestration works because, first off, DevOps teams or SREs set up the basic configurations; then, based on this configuration, Container orchestration tools like Kubernetes and Docker Swarm work by creating clusters of containers that are linked together.

Clusters can be linked together to form an application, or they can also be linked to form an infrastructure.

Referencing our previous example of a basic application, without a Container orchestration platform, you would have to manually deploy each service, manage load balancing & service discovery of each service.

With a Container orchestration platform, most of your work is automated because it takes care of:

1. Deploying:

The container orchestration platform ensures that your application and its services are deployed effectively and efficiently.

2. Scheduling

Container orchestration platforms also ensure that services are deployed so that maximum computing resources are utilized.

3. Scaling:

One key thing the Container orchestration platform does is schedule your services to make sure your compute resources are utilized to their fullest. At the same time, it also makes sure that all pods are up and running, and in case a pod or node fails, it automatically brings up a new one in the preview of that service.

4. Networking:

With a Container orchestration platform in place, you don’t have to manage load balancing and service discovery of each service manually; the platform does it for you.

Additionally, using Container orchestration platforms gives you a single point of access for each service, making it easy to manage the entire infrastructure.

5. Insights

One of the underrated ways a Container orchestration platform helps SREs and operations teams is that it provides multiple pluggable points which can be utilized by platforms like Middleware or open-source technology like Isitio to get logging, analytics and many more vital details.

What is Container orchestration tools – Kubernetes, Docker

One of the most popular ways to deploy Docker containers is with Kubernetes. Kubernetes is a container orchestration tool that manages resources such as CPU, memory, and network bandwidth across multiple systems running on different machines in a cluster.

Kubernetes and Mesos run on clusters of commodity servers without requiring special hardware to be tuned to a specific application. This makes them suitable for scaling up with ease. In addition, both Kubernetes and Mesos have their unique benefits:

What is Kubernetes?

Kubernetes is an open-source container orchestration system that allows you to manage your containers across multiple hosts in a cluster. It is written in the Go language by Google engineers who have been working on it since 2013 when they launched the first version (v1).

Main components of Kubernetes:

- Worker node: A worker node is a node that runs the application in a cluster and reports to a control plane.

- Control plane: It manages the worker nodes and the pods in the cluster.

- Kubelet: The kubelet is the primary “node agent” that runs on each node. It can register the node with the apiserver using: the hostname, a flag to override the hostname or a specific logic for a cloud provider.

- Pod: Pods are the smallest and most basic deployable objects in Kubernetes. A Pod represents a single instance of a running process in a cluster.

What is Apache Mesos

Mesos is an open-source distributed systems platform that enables resource sharing between applications running on different machines.

Mesos does it in a way that works well even if there are lots of resources available at once but not all at once because some parts may require more processing power than others do.

This allows developers more flexibility when building applications for larger scale up/down scenarios and improving overall performance through better use of resources within each process running within those applications themselves!

Docker containers are lightweight virtual machines that run within the same operating system as their host operating system (OS), allowing you to develop applications quickly without worrying about installing additional software or patching security vulnerabilities.

Kubernetes uses containers as building blocks for building applications by grouping them into logical units called pods (or “chunks”). A pod consists of one or more containers and can be created from scratch using the docker build command line tool or pull images from repositories like GitHub/Gitlab etc.

Kubernetes is a powerful container orchestration system that allows you to specify how applications should be deployed, scaled, and managed. It’s more focused on applications than Mesos, which can also manage clusters but focuses more on data centers.

Kubernetes has several features to support its goal of simplifying the management of containers across multiple clouds:

- It provides an API for creating and adding resources (e.g., pods), including images.

- Specifying pod lifecycle rules

- Running pods in any number of different environments (e.g., testing)

- Monitoring resource usage

- Interacting with tools such as kubectl CLI client or KOPS agent (for managing clusters).

The API works through an abstraction layer called DC/OS that provides its scheduler as well as others from other companies like CoreOS if needed instead of relying solely on Docker images themselves—which might not always match up exactly across versions due to security updates being rolled into new releases rather than just patches applied directly from that place.

Read also: Best Container Monitoring Tools

Kubernetes and Tectonic

Tectonic is a commercial version of Kubernetes, which is an open-source container orchestration platform. Tectonic aims to provide tools to manage and orchestrate containers across all major cloud providers, including Google Cloud Platform (GCP), AWS, and Azure.

It can be used as-is or as part of an integrated solution with other tools such as Terraform or Jenkins CI/CD so you can automate your entire infrastructure management process from DevOps automation to continuous deployment into production environments.

Tectonic comes preconfigured with enterprise-level support such as 24/7 phone support, email alerts on issues affecting your clusters, and integration with third-party SaaS services like Traefik Load Balancer Autoscaler.

This allows you to manage traffic across multiple applications running in different regions using one load balancer configuration.

Not everyone has moved over to Kubernetes and Mesos Yet

Both Kubernetes and Mesos have very large user bases, but not everyone has moved over to them yet. This means that many still use other container orchestration systems, including Docker Swarm and Apache Mesos.

Mesos is more mature than Kubernetes, which should make it easier for users to get started with the platform. It also has a wider range of features available out-of-the-box than Docker Swarm or CoreOS Tectonic (formerly known as Rocket).

Challenges of Container orchestration

Container orchestration is a new technology that has been around for only a few years. It enables developers to manage multiple applications across different servers, allowing them to run their apps in separate containers.

This makes it easier to scale up and down as needed, but it also creates some challenges for enterprise organizations that want to use containers but don’t have the right infrastructure in place yet.

Let’s explore some of the problems with container orchestration so you can make an informed decision about whether or not it’s right for your business today!

The main challenges of container orchestration include:

- Complexity: Like any other technology, the complexity of container orchestration increases with its growing popularity.

- Security Challenges: Many companies are still figuring out how to secure their containers, and it’s not an easy task!

- Scalability Challenges: Container orchestration requires lots of resources from an orchestration platform such as Kubernetes or Mesosphere that can’t be easily scaled up or down depending on your needs at any given time (or even within an hour). This can create issues if you’re looking for a quick solution for something but don’t want to pay a premium price for features like autoscaling and other advanced features that come along with these solutions. However, there are several free options available that do provide some basic functionality without sacrificing too much performance or reliability.

We still have a lot of work to do with container orchestration before they can meet the enterprise’s needs –

We have seen some companies try to use containers to solve all their problems, but this approach has been unsuccessful because they don’t understand how they work or how they fit into your overall architecture.

Many people are still using legacy technologies like virtual machines (VMs) or serverless functions (FaaS) as part of their infrastructure instead of moving towards containerized environments where everything is defined by software artifacts, instead of hardware hosts running them on top of physical servers in data centers around the world!

There are many competing container orchestrators on the market today, each with its strengths and weaknesses –

Mesos is a popular choice for running Docker containers in production environments because it’s easy to set up and manage. Still, it requires you to use a separate server from your application container (which can be problematic if you use multiple containers).

Rancher and CRI-O are both good options if you want something that integrates seamlessly into your existing infrastructure—but these two systems don’t offer as much control over how applications get deployed or scaled up/down within their environment as Kubernetes does.

Docker Swarm allows teams working together across organizations or continents to build distributed applications quickly without knowing how those applications run inside individual virtual machines running atop one another (like Mesos does).

How to get started with Container orchestration?

To get started with container orchestration, you need to:

- Get a container orchestration tool. This step has many choices, depending on your needs and budget. You can use Kubernetes, Docker Swarm, or Hyperconverge from VMware Cloud Foundation (VCF).

- Install the container orchestration tool in your cluster. This is typically done by installing it on each server that will run applications within the cluster. Some tools even have features that allow you to install multiple versions of their products at once — which is great if you’re looking for reliability across different versions!

- Set up your cluster to contain multiple apps running in various environments and zones (if applicable). Some tools make setting up these environments very easy; others require more effort—but either way works fine as long as everyone understands what they’re doing before starting!

Scaling Container orchestration

Containers are the future of application architecture, but they’re not just replacing one monolithic app with another. But, they are easily scalable as microservices in containers can be deployed and managed independently (and in isolation) by their container orchestrator.

Invest in Infrastructure that can scale

If your team is unfamiliar with containers, it’s time to invest in an infrastructure that can scale.

A scalable platform will help you avoid issues down the line since scaling up will be easier. Therefore, choosing a managed platform means you don’t have to worry about maintaining your infrastructure and can focus on other aspects of running an organization.

Conclusion

Container orchestration is a crucial technology for organizations that want to improve efficiency and reduce costs, but it isn’t easy to start.

There are a lot of moving parts involved, and there are some serious challenges to overcome. But the payoff can be huge if you choose wisely when selecting an orchestration tool and have the patience to learn how it works before making any changes.

You can integrate Middleware with any (open source & paid) container orchestration tool and use its Infrastructure monitoring capabilities to give you complete analytics about your application’s health and status.

Sign up on our platform to see how we can help you manage your containers more effectively.