Kubernetes or K8s, as you know, is an open-source container orchestration system that is used for automating computer application scaling, management, and deployment. Designed by Google and maintained by the Cloud Native Computing Foundation, Kubernetes is the solution of choice for many developers.

While Kubernetes is an ideal solution to most microservice application delivery problems, it presents many user challenges and obstacles.

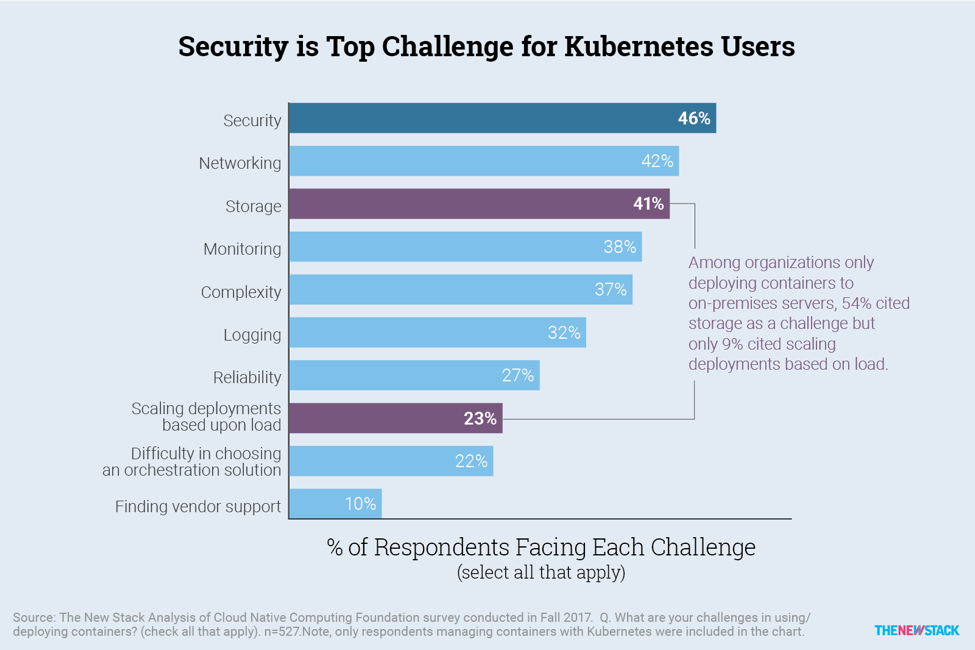

Kubernetes challenges generally arise in security, networking, deployment, scaling, and vendor support. With different usage and functional patterns, the challenges for particular users can be different. But most of the hurdles fall into these categories.

While some of these challenges are typical of all technology platforms, challenges like setup, management, and Kubernetes monitoring are unique.

Therefore, selecting an appropriate container orchestration solution is critical after considering all the criteria and determining what factors to consider. Most importantly, using Kubernetes often requires changes in the roles and responsibilities of multiple departments within the IT organization and an organization-wide familiarization phase to cope with the learning curve.

This also affects cloud systems and on-premises systems differently. Organizations with on-premises servers only face deployment issues that may not exist in the public cloud. Security in the cloud can also pose a greater risk than on-site.

Let’s take a deeper look at some of the overarching challenges of Kubernetes and identify possible solutions.

5 Kubernetes challenges and their solutions

To reiterate what we’ve discussed so far, deploying Kubernetes requires identifying and understanding potential risks and challenges and evaluating solutions. Here are five notable Kubernetes challenges.

1. Security

Security is one of Kubernetes’ greatest challenges because of its complexity and vulnerability. If not properly monitored, it can obstruct identifying vulnerabilities. When you deploy multiple containers, it’s difficult to detect vulnerabilities. This provides an easy way for hackers to break into your system.

The Tesla cryptojacking attack is one of the best examples of a Kubernetes break-in, where the hackers infiltrated Tesla’s Kubernetes admin console. This led to the mining of cryptocurrencies through Tesla’s cloud resources on Amazon Web Services (AWS). There are a few things you can do to avoid these security challenges.

- Improve security with modules like AppArmor and SELinux.

- Enable RABC (role-based access control). RABC makes authentication mandatory for every user and regulates the data each person can access. Depending on users’ roles, they’re granted certain access rights.

- Use separate containers. The private key is hidden for maximum security when you separate a front-end and a back-end container through regulated interaction.

2. Networking

Traditional networking approaches are not very compatible with Kubernetes. As a result, the challenges you face continue to grow with the scale of your deployment. Some problem areas include complexity and multi-tenancy.

- When the deployment spans more than one cloud infrastructure, Kubernetes becomes more complex. The same thing happens with mixed workloads from different architectures like virtual machines (VMs) and Kubernetes.

- Similar issues arise due to static IP addresses and ports on Kubernetes. Because pods use an infinite number of IPs in a workload, implementing IP-based policies is challenging.

- Multi-tenancy problems come up when multiple workloads share resources. Other workloads in the same environment are affected if resources are improperly allocated.

The container network interface (CNI) plug-in allows developers to solve networking challenges. It enables Kubernetes to seamlessly integrate into the infrastructure and access applications on different platforms.

You can also solve this problem with service mesh. A service mesh is an infrastructure layer inserted in an app that handles network-based intercommunication via APIs. It also allows developers to be stress-free with networking and deployment.

These solutions make container communication smooth, fast and secure, resulting in a seamless container orchestration process. You can also use delivery management platforms to perform activities such as managing Kubernetes clusters and logs and ensuring full observability.

3. Interoperability

As with networking, interoperability can be a significant Kubernetes issue. When enabling interoperable cloud-native apps on Kubernetes, communication between the apps can be a bit tricky. It also affects the deployment of clusters, as the app instances it contains may have problems executing on individual nodes in the cluster.

Kubernetes doesn’t work as well in production as in development, quality assurance (QA), or staging. Additionally, migrating to an enterprise-class production environment creates many complexities in performance, governance, and interoperability.

Users can implement some of these measures to reduce the interoperability challenges in Kubernetes:

- Use the same API, user interface, and command line

- Fuel interoperability for production problems

- Enable interoperable cloud-native apps via the Open Service Broker API to increase portability between offers and providers

- Leverage collaborative projects across multiple organizations (Google, Red Hat, SAP, and IBM) to provide services for apps that run on cloud-native platforms.

4. Storage

Storage is an issue with Kubernetes for larger organizations, especially organizations with on-premises servers. One of the reasons is that they manage their entire storage infrastructure without relying on cloud resources. This can lead to vulnerabilities and memory crises.

Even if a separate IT team manages the infrastructure, it’s difficult for a growing business to manage the storage. In fact, 54% of companies deploying containers on on-premises servers viewed storage as a challenge.

The most permanent solution to storage problems is to move to a public cloud environment and reduce reliance on local servers. Some other solutions to avoid using temporary storage options are:

- Ephemeral storage: Refers to the volatile temporary storage attached to your instances during their lifetime – data like cache, session data, swap volume, buffers, and so on.

- Persistent storage: Storage volumes can be linked to stateful applications such as databases. They can also be used after the life of the individual container has expired.

- Other: Storage and scaling problems can be resolved with persistent volume claims, storage, classes, and stateful sets.

5. Scaling

Every organization aims to increase the scope of its operations over time. However, if their infrastructure is poorly equipped to scale, it’s a major disadvantage. Since Kubernetes microservices are complex and generate a lot of data to deploy, diagnosing and fixing any type of problem is daunting task.

Without automation, scaling can seem impossible. For any business that works in real-time or with mission-critical applications, outages are extremely damaging to revenue and user experience. The same goes for customer-facing services that depend on Kubernetes.

The density of applications and the dynamic nature of the computing environment make the problem worse for some companies, including:

- Difficulty managing multiple clouds, clusters, designated users, or policies

- Complex installation and configuration

- Differences in user experience depending on the environment

Another problem with scaling is that the Kubernetes infrastructure may not work well with the other tools. With errors in the integration, expansion is a difficult undertaking.

There are a few ways to solve the scaling problem in Kubernetes. You can use the autoscaling or v2beta2 API version or tools to specify multiple metrics to scale the horizontal pod autoscaler.

If that doesn’t solve your problem, you can choose an open-source container manager to run Kubernetes in production. It helps manage and scale applications hosted on the cloud or on-premises. Some of the functions of these container managers include:

- Joint infrastructure management across clusters and clouds

- User-friendly interface for configuration and deployment

- Easy to scale pods and clusters

- Management of workload, RBAC, and project guidelines

Overcome Kubernetes challenges for easy deployment

Kubernetes helps you manage and scale container, node, and cluster deployments. You can face many challenges when managing and scaling between cloud providers. But if you master these challenges and create solutions that focus on your problem areas, Kubernetes offers you a simple and declarative model for programming complex deployments.

Server outages and downtime are common when scaling infrastructure to meet the increasing demand. Worry less and do more with an autoscaler that automatically scales your infrastructure on the go.