Latency affects the usability and performance of your system. Even when your servers are well-resourced, high latency causes everything to respond slowly and applications to feel unresponsive. It is an issue with real-time applications such as trading applications, video calling, and gaming where every millisecond matters.

Low latency is both a system performance and a user experience requirement. You must minimize latency in the backend, network, and database, among other components of your stack, so that everything will be quick and efficient.

In this guide, you’ll learn practical strategies for latency reduction. We’ll first cover the common reasons why latency is high. Then we’ll cover how to reduce latency in your application.

Learn how Middleware’s Application Monitoring can improve performance in real-time environments.

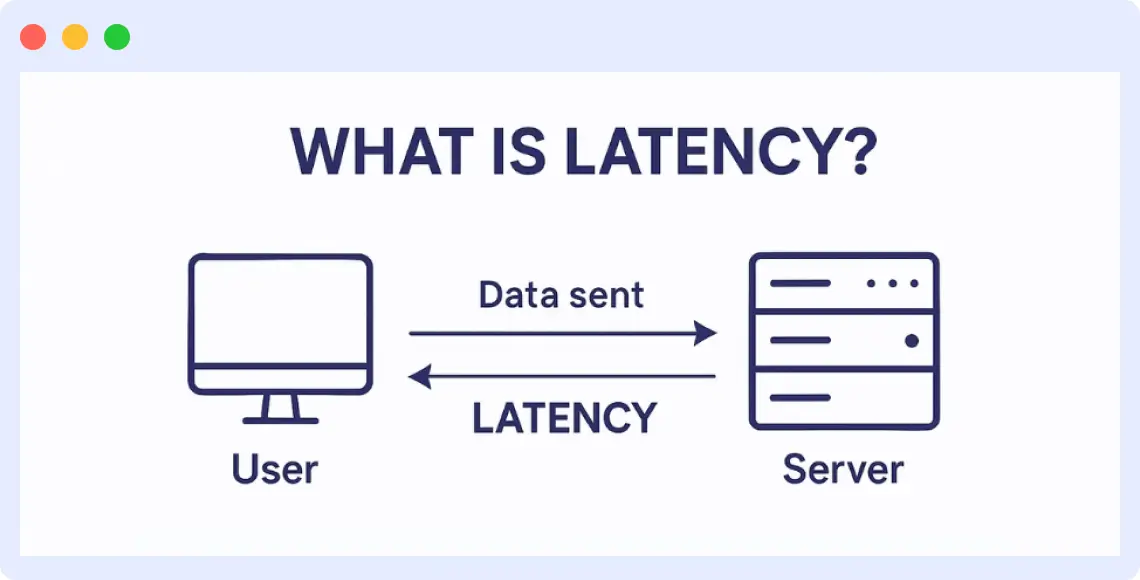

Understanding Latency

Latency, also referred to as response time, is the delay in getting a response after making a request. In computing systems, it is the interval between a user’s action and the corresponding response given by the system. Lower latency provides improved speed and performance in applications, whereas high latency causes delays that can render applications sluggish or unresponsive.

There are different types of latency that determine the performance of an application:

1. Network Latency

This is the amount of time it takes data to travel over the network, like from the server to the client. Imagine you’re on a video call with a friend in another country, and there’s a delay before your friend hears and replies. That awkward delay is caused by network latency.

The same thing can also happen to someone trading stocks or cryptocurrency and might lead to a loss of funds. Latency reduction is crucial in such scenarios to ensure real-time responsiveness and avoid negative outcomes.

2. Application Latency

The time it takes for the application to execute a request, including internal logic and service-to-service requests. This request can be clicking on the “Request Ride” button on a rider app like Uber. Sometimes, it might take a couple of seconds or even minutes before you see available drivers.

Now, imagine you are rushing somewhere for something very important; you can see how frustrating that can be. That’s what happens when there’s a low latency in your application.

3. Database Latency

The time taken to execute a read or write on a database as part of handling a request. You might have noticed some delay in loading items from either a food delivery or e-commerce app before now. That could be due to database latency.

The app might be querying a large table of data and filtering it based on location and availability. If the table is too big or the query is not optimized, it might take a while to get those items.

4. Storage Latency

This is basically how long it takes for data to show up from where they’re stored. It could be from your own hard drive or from the cloud. Most apps that let you save large images and video files are a good example here. When you try to open an image with high resolution, it takes several seconds to load. That delay might not be caused by your internet or the app itself; it might be from the cloud storage or a slow disk.

Causes of High Latency

It’s important to know why latency is high before you think about reducing it. This section will talk about some of the most common reasons for high latency.

1. Network-Related Issues

DNS resolution delays, ISP throttling, and long distances between the client and the server are all things that can make network latency high. Each of these makes it take longer to send data, especially in programs that need to send and receive data back and forth many times.

2. Server-Side Problems

Lack of caching, ineffective load balancing, or sluggish or overloaded APIs can all contribute to high backend API latency, slowing response times when requests aren’t properly distributed or cached.

3. Application Issues

Blocking I/O operations, synchronous code in asynchronous contexts, or poorly structured logic can all cause latency in an application. These problems make the application take longer to process each request, which makes responses to users take longer.

4. Database and Storage Problems

Latency can go up a lot if database queries aren’t working right, indexes are missing, or storage access is slow. Even simple tasks can take a long time when the system has to scan large datasets or wait for disk I/O.

5. Frontend and Client-Side Issues

Render-blocking JavaScript, unoptimized assets, or too much DOM manipulation are some of the things that can cause latency on the client side. These make it take longer for the browser to load content, especially on devices with less power or networks that are slower.

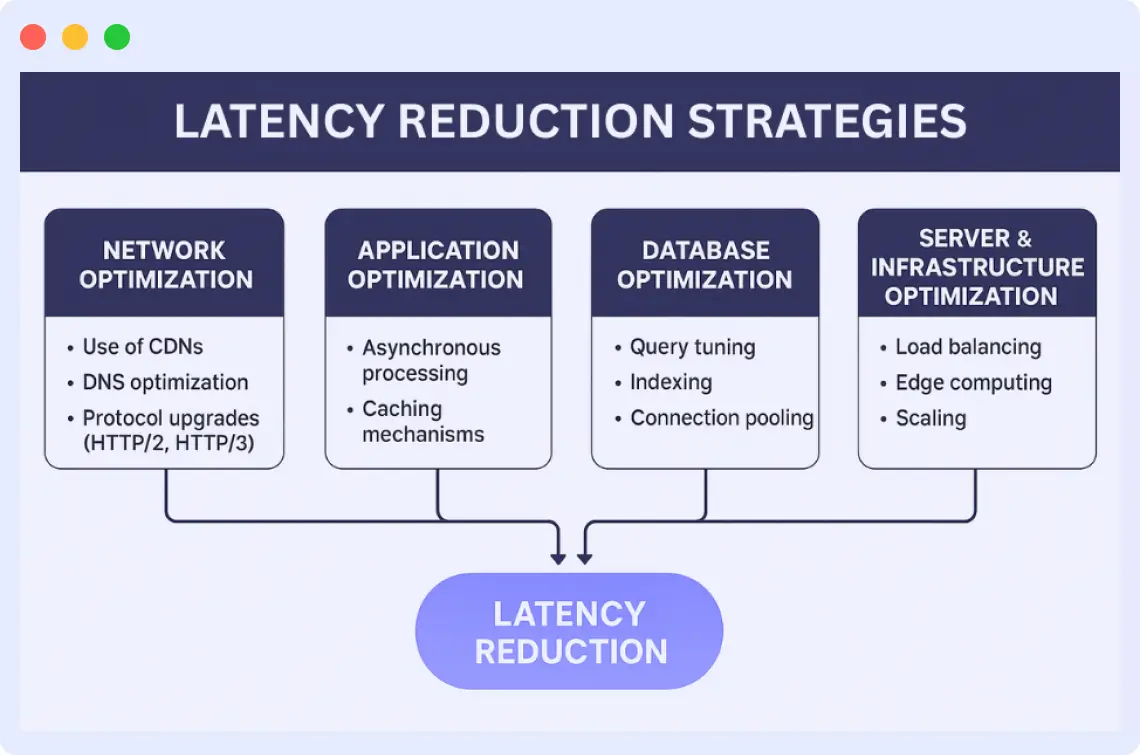

Strategies for Latency Reduction

In this section, we’ll talk about useful tips you can use to make your app run better and respond more quickly.

Improving the Network for Latency Reduction

Network optimization focuses on reducing delays caused by data being transferred between users and servers. Latency can be reduced by improving how data is routed, delivered, and processed. The following approaches help minimize delay:

- Use of CDNs: CDNs keep copies of the static content (such as images, CSS, and JavaScript) of your website or application on servers located all over the world. By requesting data from the closest server rather than a far-off origin server, users can reduce travel time. This results in a quicker load time, particularly for users from around the world.

- Optimizing DNS: Each request may be delayed by milliseconds due to ineffective or slow DNS resolution. Reducing the quantity of DNS lookups, selecting faster DNS providers, turning on DNS caching, and minimizing redirect chains are all part of DNS optimization. These steps decrease the time to first byte (TTFB) and speed up domain resolution.

- Upgrades to the HTTP/2 and HTTP/3 Protocols: HTTP/2 reduces latency by compressing headers and allowing multiple requests to pass through a single connection (multiplexing). By reducing handshake delays and improving packet loss handling, HTTP/3 (based on QUIC) speeds up even further. Data transfer is accelerated by both protocols, particularly on unreliable networks.

How Hotplate Cut Latency by 90% with Middleware

Learn how the Hotplate team optimized infrastructure, improved the user experience, and saved time by adopting Middleware’s observability platform.

👉 Read the Full Case Study

Optimizing Applications for latency reduction

Response time can be reduced by optimizing the way your app handles requests and data. These methods will speed up and improve your app:

- Asynchronous Processing: Users can do more than one thing at once with asynchronous operations. They don’t have to wait for one to finish before starting another (synchronous). For instance, a web app can load information and get more at the same time.

Synchronous code:

console.log("1. Start");

function syncTask() {

console.log("2. Running a synchronous task");

}

syncTask();

console.log("3. End");Output:

1. Start

2. Running a synchronous task

3. EndAsynchronous Code:

console.log("1. Start");

setTimeout(() => {

console.log("2. Running an asynchronous task");

}, 1000);

console.log("3. End");Output:

1. Start

3. End

2. Running an asynchronous taskWhile the setTimeout function waited for 1 second, the application didn’t wait. It moved on to the next task, while processing the asynchronous function (setTimeout).

- Caching Mechanisms: Caching stores data that is used often in memory so that the application doesn’t have to compute or fetch it every time. It cuts down on repeated processing and latency by a lot, whether it’s page content, API responses, or database queries.

- Lazy Loading: This requires you to only load the data or parts that a user needs right now, not everything at once. This method speeds up the initial load time and makes the app respond faster.

Improving the Database for latency reduction

Databases are a common cause of latency when there is a lot of data or traffic. Improving how your app works with the database can make responses faster and better.

Learn how Middleware’s Database Monitoring helps you optimize queries and indexing.

- Query Tuning: This involves changing or rewriting SQL queries so that they run more quickly. It includes limiting the data that is returned, cutting down on unnecessary joins, and not doing full table scans. Well-structured queries get results faster and put less stress on the database server. Here’s a good example:

-- Before

SELECT * FROM orders

WHERE customer_id = 100

ORDER BY created_at DESC;

-- After: Adds index, limits fields, and paginates results

SELECT id, order_total, status

FROM orders

WHERE customer_id = 100

ORDER BY created_at DESC

LIMIT 10;- Indexing: Indexes help the database find things more easily. They are like a list of the things in your data. The database has to look through every row to find a match if there are no indexes, which takes time. With the right indexing, lookups and queries are both much faster.

- Connection Pooling: This lets your app use database connections that are already open instead of making a new one for each request. Not having to set up connections over and over again saves time and helps the system handle more requests better.

Improving the Server & Infrastructure

The way that your infrastructure and servers are set up has a major impact on how quickly your application responds. By streamlining these elements, you can handle traffic more effectively and process requests closer to the user.

- Load Balancing: The process of distributing incoming traffic among several servers so that no one server is overloaded. It accelerates response, makes resources more available, and maintains low latency despite high traffic.

- Edge Computing: This pushes processing nearer to the user, typically to data centers at or close to the “edge” of the network. You can minimize latency, particularly for real-time applications, by processing requests near the source.

- Scaling: Scaling is the act of expanding (up or down) computing resources to manage heightened demand. You can scale by either increasing the number of server instances or enlarging the current servers.

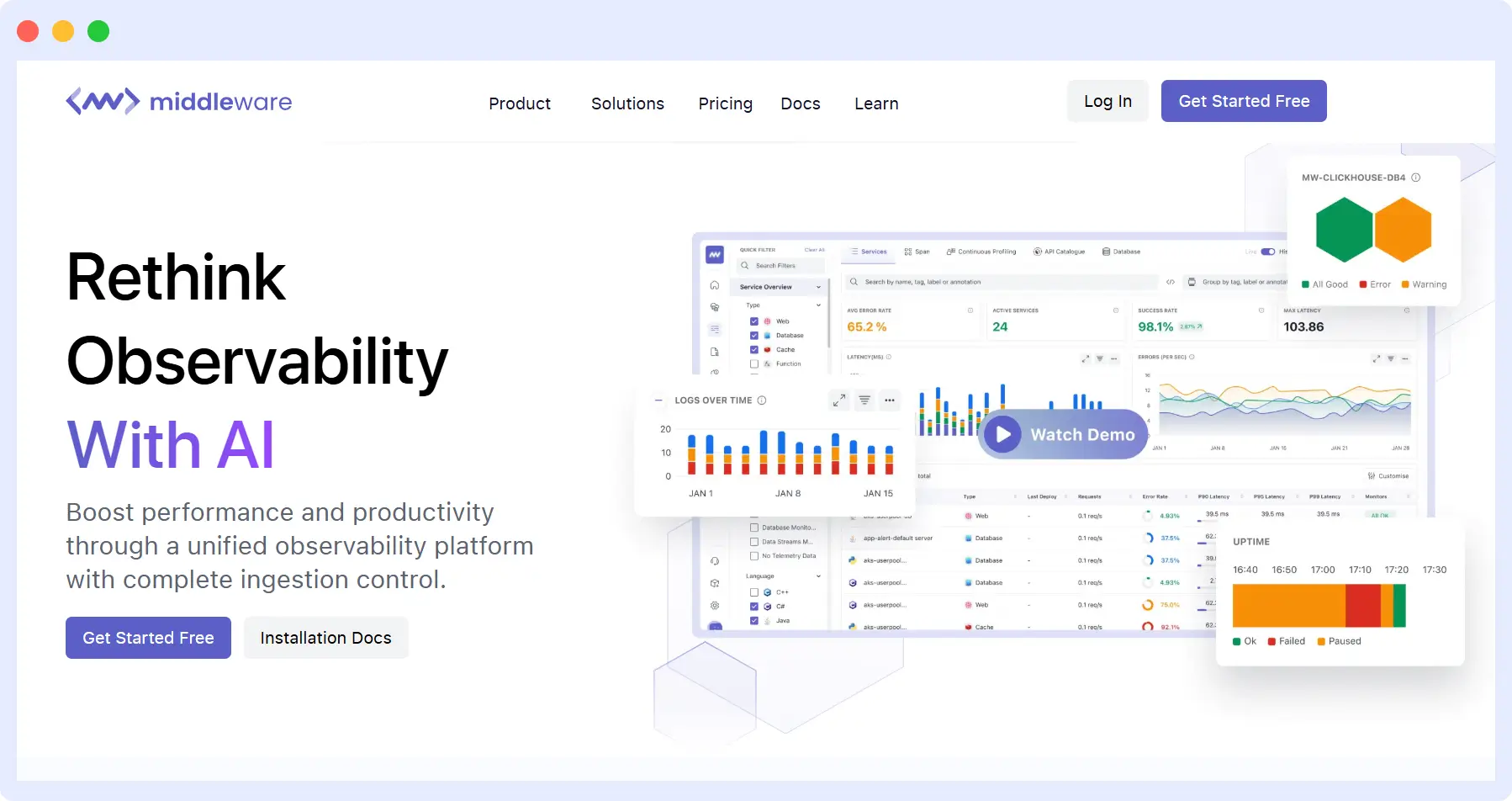

Monitoring and Continuous Improvement

Latency needs to be constantly watched if you wish to ensure peak performance. Despite having applied the latency minimization measures outlined in this guide, various circumstances can introduce new latencies. Ongoing daily infrastructure monitoring catches these problems at an early stage, and corrective action can be taken before they affect users.

If you want to monitor latency across your stack, there are various tools out there that can help you achieve that. Middleware is one of those tools. It provides real-time observability and let you see how your system is performing.

Using Middleware to Monitor Latency

Middleware is different from other monitoring tools because it gives teams end-to-end visibility. This lets them see the particular place where the delay is coming from. Whether it’s from the database, network, or a third-party service.

Try Middleware’s real-time monitoring with a free trial.

Here’s a breakdown of how middleware can help in latency reduction:

- Real-time Monitoring: Latency has to do with time. Middleware monitors your application in real time and gives you instant feedback on the performance.

- Full-Stack Tracing: With Middleware, you can track requests that go from the frontend to the backend to the database. This will help you see the exact point an issue occurs.

- Easy to Set Up: Unlike other monitoring tools, It is very easy to set up and get started with Middleware. There’s no complex configuration involved.

- Smart Alerts: With middleware, you can customize alerts so you can get notifications immediately when something goes wrong. This way, things won’t be too serious before you identify them.

With Middleware, teams will stop guessing and start making smart, data-driven decisions that make the system more responsive by continuously monitoring and giving them good performance insights.

Conclusion

Latency directly impacts how responsive and fast your app will feel to users. We discussed the common causes of high latency and provided you with some practical advice on how to reduce it in this post.

You can make sure your application has low latency as it grows by following the steps outlined and using tools like Middleware to check performance on a regular basis.

Get started with Middleware in minutes, read our quick-start guide.

FAQs

What is latency?

Latency is the delay between when you take an action, like clicking a button, and when you see a response. It’s a way to measure how fast (or slow) your app feels to the user.

What is latency reduction?

Latency reduction means making the system respond faster by shortening the time it takes for different parts of the system to communicate with each other.

What are the types of latency?

There are many types of latency, such as network latency, application latency, database latency, storage latency, and client-side latency.

Why does latency matter for user experience?

Apps may respond slowly or not at all if latency is high. Apps that must function in real time will not benefit from this. Low latency increases interactions and speeds up loading times.

What tools can I use to measure latency?

You can use Middleware, Datadog, or New Relic to check on how quickly your system responds. They show you where things are getting stuck so you can fix them.