Have you ever had to sift through multiple files and folders just to find a vital piece of information? You may have spent an entire day looking for the information that could have taken you just a few minutes if you had some filing system.

Applications and systems constantly generate data, and trying to make sense of all this information is an equally big task. Thankfully, applications and systems generate what is called logs, which store vital information about system health, performance, and user activity. These logs help us understand information about the data, like which system it is stored in, what it contains, and more.

However, like sifting physical files, wading through countless individual log files scattered across your infrastructure can be daunting.

This is where log aggregation comes in. It acts as the missing puzzle piece for efficient log management and unlocks the power of your system’s hidden insights. It consolidates log data from multiple sources, including network nodes, microservices, applications, and systems, into a centralized repository. This log management helps us find the information we need easily and provides additional details to help improve system performance, detect anomalies, and support decision-making.

Let’s explore in greater detail log aggregation, how it works, and how it can make our lives easier.

What is log aggregation?

Log aggregation is an aspect of log management that refers to the process of collecting log data from various sources across your IT infrastructure and centralizing it in a single location. This centralized repository allows for efficient storage, analysis, and visualization of logs, providing a consolidated view of system activity.

How does log aggregation work?

If you are wondering how log management and log aggregation are unique, here is a simple differentiation. While log aggregation focuses on collecting and storing logs, log management encompasses the entire lifecycle of logs—from collection and aggregation to analysis, alerting, and archiving. Thus, log aggregation is a crucial building block for effective log management.

By having all your log data stored in a centralized repository, you can easily observe your entire networks and systems. This improves the ability to diagnose issues or find anomalies without having to interpret each log file individually.

The core components of the log aggregation process include:

- Data Collection: The process starts with collecting log information through what are known as log collectors, also known as agents, on your servers, applications, and devices. These agents continuously gather log data and send it to the central repository.

- Centralized Storage: Next, a central storage solution, like a dedicated log server or a cloud-based platform, houses all the aggregated log data from various sources. This ensures easy access and efficient log management practices.

- Parsing and Indexing: Once collected, logs need to be parsed to extract relevant information like timestamps, severity levels, and message content. Indexing then organizes this data for efficient searching and retrieval.

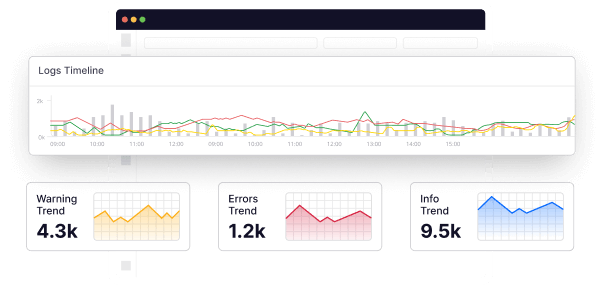

- Visualization: Log aggregation tools typically offer built-in dashboards and visualization capabilities. These allow you to analyze trends, identify patterns, and gain insights from your log data in a user-friendly format.

Type of logs to aggregate

There are many different types of logs that you can aggregate, but some of the most common include:

- Server logs: These logs record activity on a server, including startup, shutdown, errors, and requests.

- Application logs: These logs track the behavior of an application, including user interactions, errors, and performance metrics.

- Security logs: These logs record security-related events, such as login attempts, access control violations, and suspicious activity.

- Network logs: These logs capture network traffic, including connection attempts, data transfers, and errors.

- System logs: These logs record events related to the operating system, such as hardware failures, software installations, and system restarts.

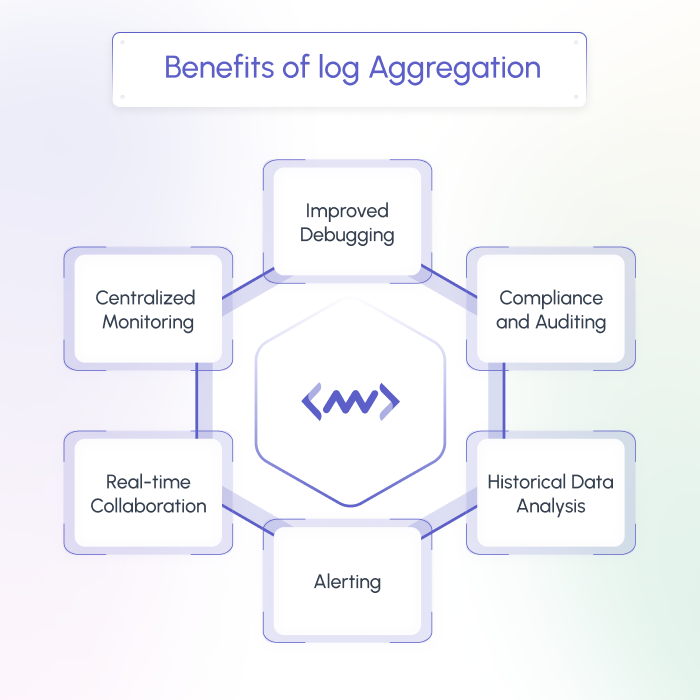

Benefits of log aggregation

Now that we know how log aggregation works, let us have a look at what makes it a crucial part of the overall infrastructure monitoring process. With log aggregation, you can gain significant benefits that can help you enhance your IT operations, security posture, and overall system health.

Some of the key benefits include:

Enhanced operational visibility

The most immediate advantage is the dramatic improvement in operational visibility. You no longer need to spend hours sifting through individual log files scattered throughout your environment.

With centralized logs, you gain a holistic view of system activity, simplifying troubleshooting and pinpointing the root cause of issues much faster. This translates to reduced downtime, improved responsiveness to problems, and a more efficient use of your IT resources.

Performance and reliability improvements

Log aggregation also goes beyond reactive troubleshooting. By correlating logs from different sources, you can identify potential performance bottlenecks and anomalies before they escalate into critical issues.

This proactive approach allows you to take preventative measures, ensuring system stability and optimal performance. Additionally, analyzing resource usage patterns within your logs provides valuable data for capacity planning.

You can effectively scale your infrastructure to meet evolving demands and optimize resource allocation, ensuring your system runs smoothly even under increased pressure.

Better security and compliance

Security is another area where log aggregation shines. Centralized logs enable efficient log security monitoring. You can identify suspicious activity patterns, detect potential security breaches in their early stages, and ensure the integrity of your systems. Streamlined log management also simplifies the process of complying with industry regulations and internal security policies.

By having all your logs readily accessible and organized, you can easily generate audit reports based on specific criteria and demonstrate adherence to compliance requirements.

In essence, log aggregation empowers you to transform your system’s vast amount of log data from a hidden liability into a valuable asset. By harnessing this data effectively, you gain a deeper understanding of your infrastructure, optimize performance, strengthen your security posture, and make informed decisions to drive your IT operations forward.

Log aggregation tools and technologies

If you want to implement log aggregation into your overall process, you are in luck. There are several powerful log aggregation tools available in the market, each with its own set of features and functionalities. These help you get the benefits of log management and analysis without needing high technical expertise. However, picking the right option can still be tricky, and it all comes down to factors like:

- Scalability: Can the tool handle the volume and growth of your log data?

- Ease of Use: How easy is it to set up, configure, and use the tool?

- Integration Capabilities: Does the tool integrate seamlessly with your existing monitoring and security tools?

- Security Features: Does the tool offer robust security features to safeguard your log data?

- Cost: Does the tool fit within your budget? Consider open-source vs. commercial options.

With these factors in mind, here is a look at some of the top log aggregation tools in the market and why you should consider adding them to your tech stack:

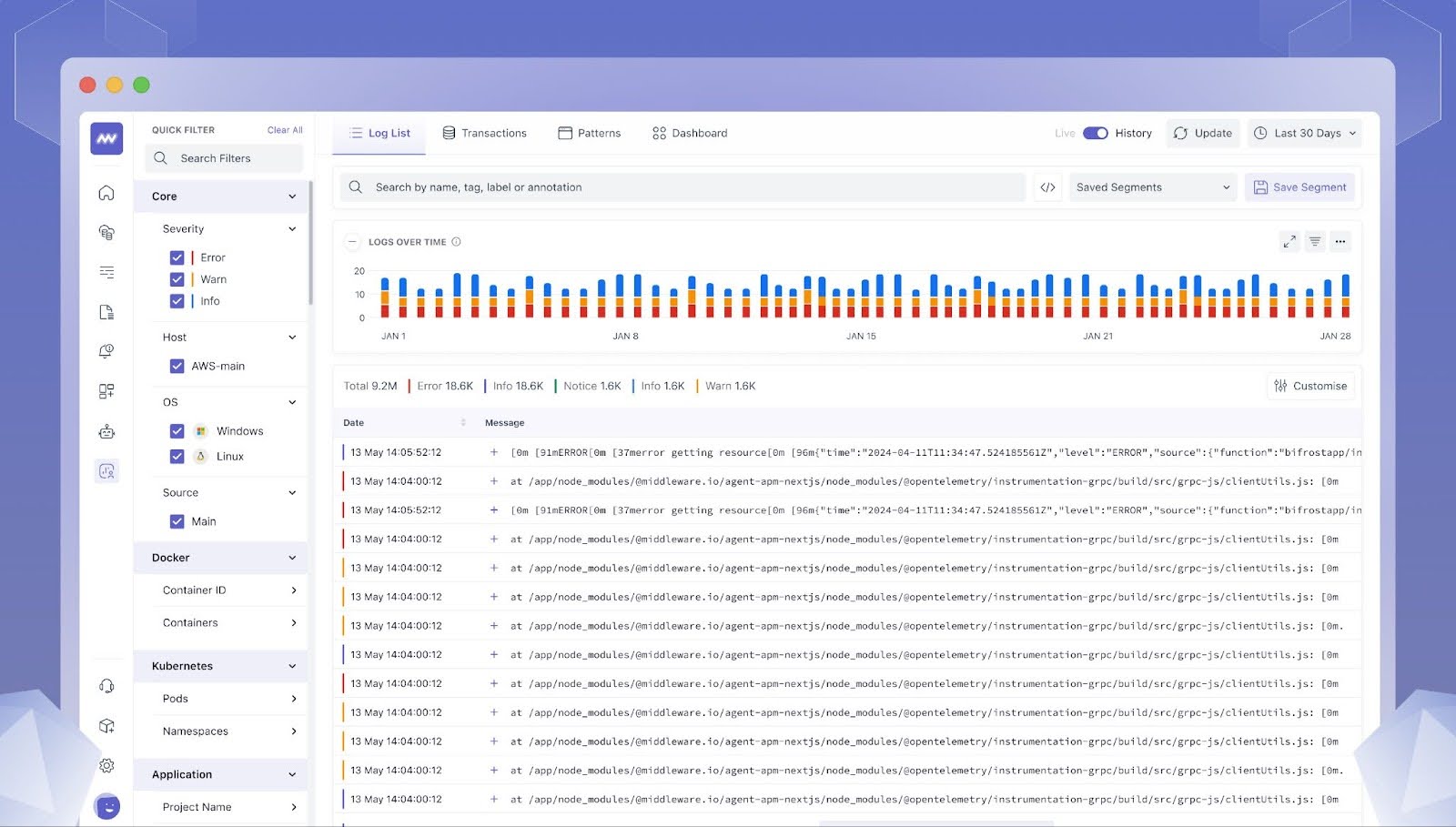

Middleware

While there’s no single “top dog” in log aggregation, Middleware offers a compelling solution within its broader observability platform.Unlike juggling separate tools for logs, metrics, and tracing, it unifies everything into a single pane of glass. This consolidated view streamlines analysis – you can effortlessly correlate issues across different data sources, pinpointing root causes faster.

Furthermore, Middleware goes beyond basic log aggregation. It boasts real-time monitoring, allowing you to react to issues as they unfold. This proactive approach to identifying anomalies can help you address potential problems before they snowball into major outages.

Key features

- Effortless collection: Deploy lightweight agents to gather logs from your servers, applications, and devices with ease.

- Centralized storage and management: Store all your logs in a secure, scalable, cloud-based repository for efficient access and analysis.

- Real-time insights and analytics: Leverage built-in analytics and machine learning to identify trends, anomalies, and potential issues within your log data.

- Seamless integration: Integrate effortlessly with your existing monitoring and security tools for a unified view of your entire IT ecosystem.

Pricing

- Free forever: Free plans for APM, Log, and Infrastructure monitoring, RUM, Synthetic monitoring, Database, and Serverless monitoring.

- Pay-as-you-go: Log monitoring starts at $0.3/ 1GB / month, Infrastructure monitoring at $10/ host/month, APM at $20/ host /month, Synthetic monitoring at $2 for 10K synthetic checks, Database monitoring at $49 per database host/month, RUM at $1/ 1000 sessions/month, and Serverless monitoring at $5/ 1M traces/month.

- Enterprise plan: Ideal for large-scale deployments. Custom pricing and contracting are available.

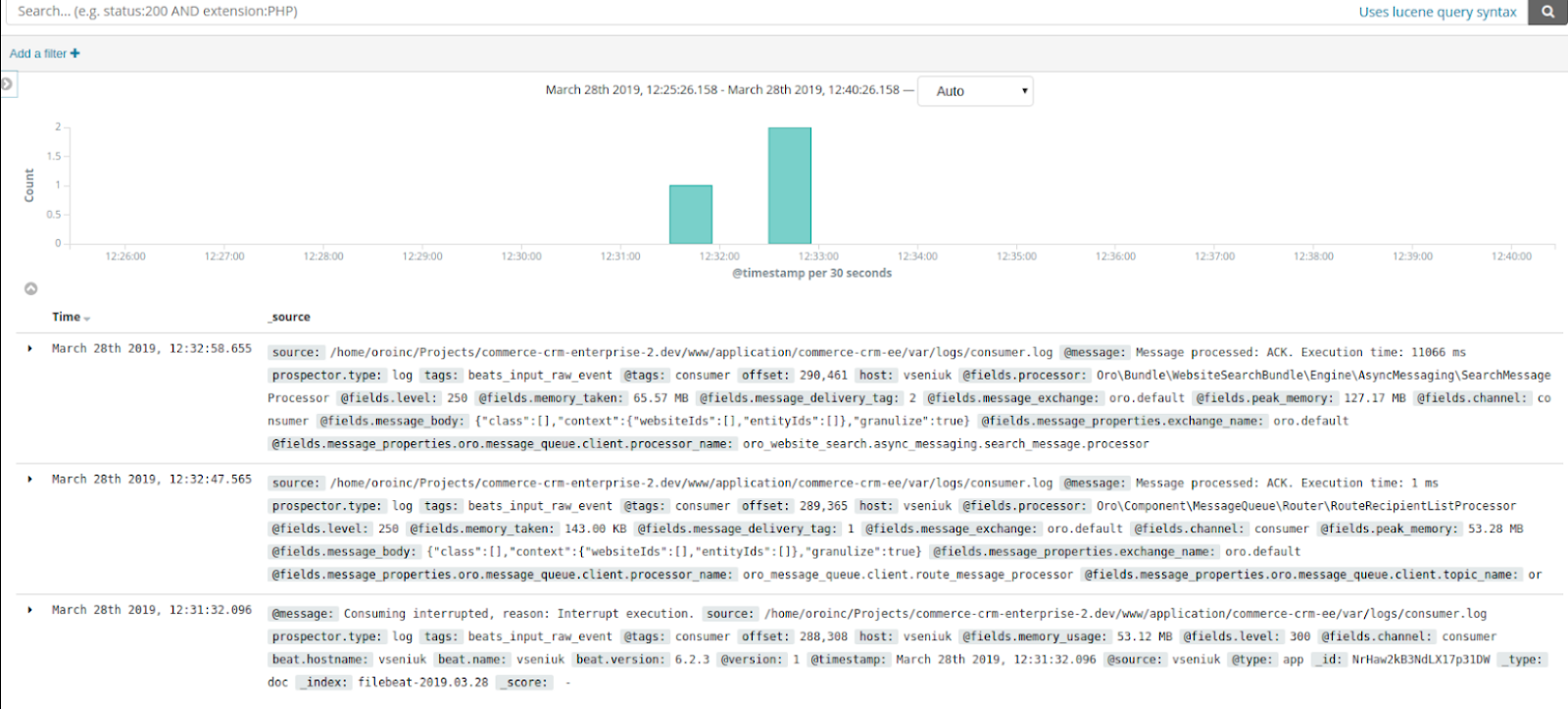

ELK Stack (Elasticsearch, Logstash, Kibana)

ELK Stack is an open-source and highly customizable suite offering powerful search and analytics capabilities. It requires the deployment and configuration of separate components for log collection (Logstash), storage (Elasticsearch), and data visualization (Kibana). This flexibility allows for deep customization but can also present a steeper learning curve for implementation and management.

However, this separate installation and configuration of Elasticsearch, Logstash, and Kibana can be complex for beginners, making it ideal only for those with technical expertise and understanding of log data management.

Key features

- Free and open-source platform offering extensive customization options for advanced users.

- Integrates with a vast array of open-source security and log analysis tools.

- Scalable platform that can handle large log volumes, suitable for both small and medium-sized organizations.

- Kibana provides powerful dashboards and visualizations for real-time analysis of log data.

Pricing

- Free and open-source.

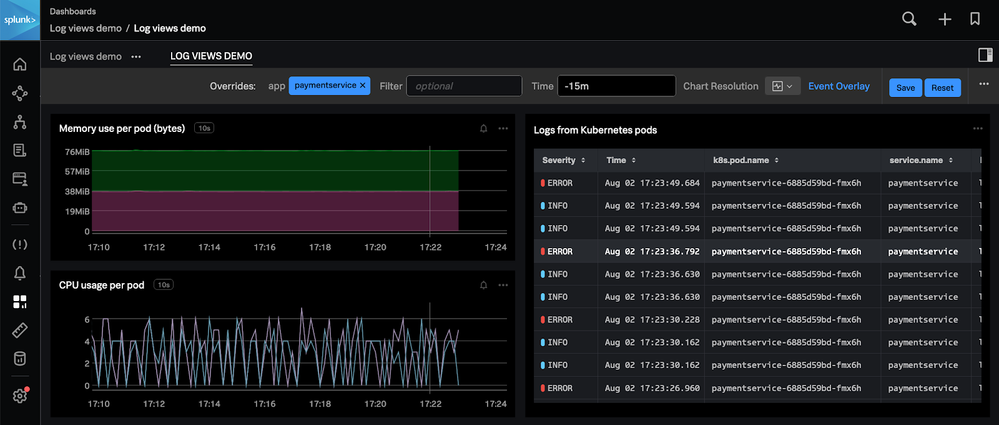

Splunk

A comprehensive log management platform with advanced features like real-time monitoring and security analytics, Splunk offers a robust feature set.

However, it can be complex to set up and manage, often requiring significant investment in licensing costs. Since it is majorly focused on observability and end-to-end visibility into your enterprise performance and metrics, it may not make sense to be used only for log management or aggregation.

Key features

- Offers advanced security features like user behavior analytics (UBA) and entity behavior analytics (EBA) for comprehensive threat detection.

- Integrates with leading security information and event management (SIEM) solutions for a holistic security posture.

- Scalable platform designed to handle massive log volumes from complex IT environments.

- Streamlines compliance reporting with pre-built templates and advanced filtering capabilities.

Pricing

- Flexible pricing based on workload, ingest-based pricing, entity pricing, or activity-based pricing (logs, traces, sessions). Contact the sales team to get an estimate.

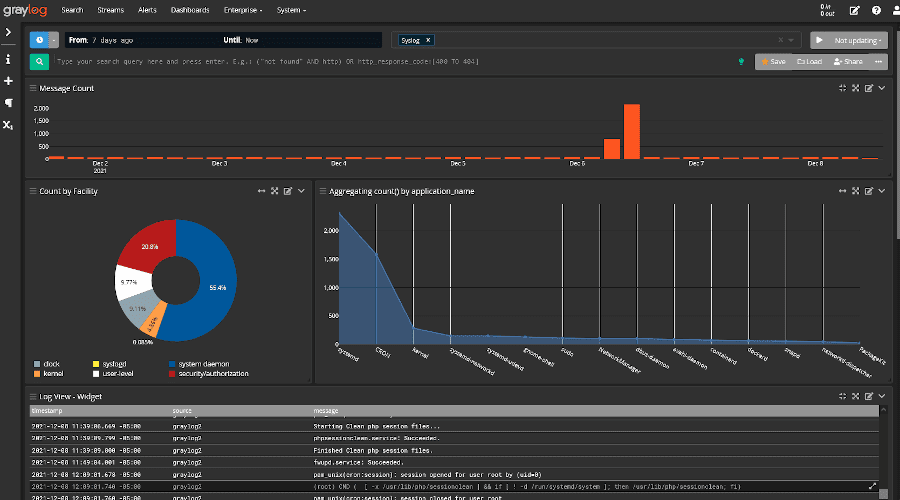

Graylog

Graylog, another open-source option known for its user-friendly interface and scalability, is a powerful log management platform that prioritizes ease of use.

Its primary focus is on being a threat detection and incident response solution that leverages API Security, SIEM, and log management for effective threat intelligence and analysis.

However, it may lack some of the advanced analytics capabilities offered by commercial solutions like Middleware or Splunk.

Key features

- Advanced security mechanisms that allow you to deliver the promise of SIEM without all the complexity or high costs.

- Centralized log management for IT Operations and DevOps teams.

- End-to-end API threat monitoring for early detection and response to any threats.

- Open-source standards and SSPL-licensed centralized log collection for log data aggregation, analysis, and management.

Pricing

- Open-source and completely free.

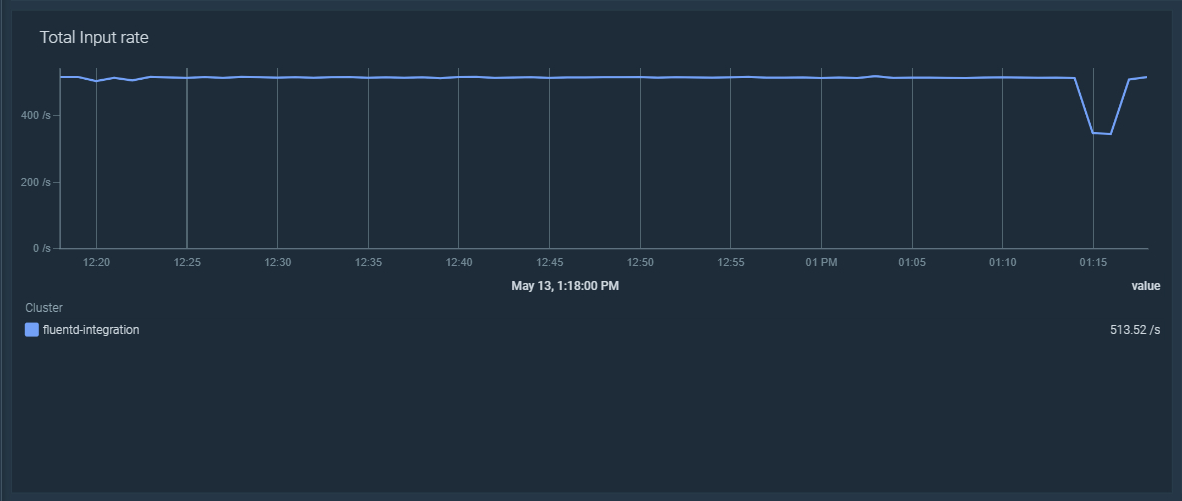

Fluentd

Fluentd is an open-source data collector that efficiently forwards logs to various destinations. Using this tool, you can build a unified logging layer by capturing insights from multiple systems and data storage, be it Syslog, Apache/Nginx logs, Mobile/Web app logs, Sensors, or IOTs, and combining them into your Elasticsearch, MongoDB, Hadoop, AWS, GCP, Snowflake, and other data collectors.

While it is not a complete log management solution, it is a popular choice for log forwarding and for using a free tool to help gain log aggregation insights. However, being open-source, it often requires integration with other tools for storage, analysis, and visualization, and the support experience is not at par with some of the competitor tools that we have seen.

Key features

- Unified Logging Layer that can capture data sources from backend systems.

- Simple yet flexible, with 500+ plugins to many data sources and output.

- Supported by a community of 5000+ data-driven companies and users who use Fluentd for their specific requirements.

Pricing

- Open-source and completely free.

Why Middleware stands out: Unleashing the power of log aggregation with ease

Middleware stands out as a champion of efficiency, user-friendliness, and powerful features. While other options offer their own strengths, let’s delve into the competitive advantages that empower Middleware to elevate your log management experience:

- Effortless onboarding and scalability: Unlike tools requiring complex deployments and configurations, Middleware boasts a streamlined setup process. With a single script installation, you can begin collecting logs from your entire infrastructure in minutes. Furthermore, Middleware’s cloud-native architecture seamlessly scales to accommodate growing log volumes, ensuring your system remains efficient even as data influx increases.

- Advanced analytics at your fingertips: Our platform integrates powerful analytics capabilities, allowing you to extract valuable insights from your log data. Leverage machine learning algorithms for anomaly detection, identify hidden patterns within your logs, and predict potential issues before they disrupt your operations.

- Seamless integration for a unified view: Middleware understands the importance of working within your existing IT ecosystem. Our platform offers effortless integration with various monitoring and security tools you already use. This eliminates the need for juggling multiple interfaces and provides a unified view of your entire IT infrastructure.

- Cost-effectiveness tailored to your needs: Middleware offers flexible pricing plans for organizations of all sizes. With a generous free tier and transparent pricing structures, you can choose a plan that perfectly aligns with your log volume and specific needs.

- Enterprise-grade security and support: We employ robust security measures to safeguard your log data, ensuring it remains protected and compliant with industry regulations. Additionally, our dedicated support team is always available to assist you with any questions or challenges you may encounter.

Implementing log aggregation

So, want to get started with log aggregation? It all starts with a few simple steps:

- Setting up and configuring log aggregation tools: Deploy log collection agents on your servers, applications, and devices. These agents will be responsible for gathering and forwarding log data to the central repository.

- Define log sources: Identify all the systems and applications that generate logs you want to collect. Configure log collectors to target these specific sources.

- Parsing and filtering: Establish parsing rules to extract relevant information from your logs and define filters to focus on specific data you need for analysis.

- Centralized storage and management: Ensure your chosen central storage solution has adequate capacity to handle the volume of your logs. Implement data retention policies to manage storage efficiently.

By following these guidelines and leveraging the specific documentation of your chosen log aggregation tool, you can configure your log aggregation system for optimal performance and efficiency.

Log aggregation challenges

While log aggregation offers immense benefits, it’s not without its hurdles. Here’s a look at some of the key challenges developers face today:

- Log explosion: The rise of cloud-native applications, microservices architectures, and the Internet of Things (IoT) has led to a massive increase in log data volume. Traditional infrastructure can struggle to keep up, impacting storage costs and real-time analysis capabilities.

- Complex: Logs come in diverse formats (structured, semi-structured, unstructured) from various sources. This heterogeneity makes it difficult to centralize, parse, and analyze them effectively. Correlating logs across different systems for root cause analysis becomes a significant challenge.

- Alert fatigue and false positives: With overwhelming log volumes, traditional alerting systems can trigger a constant barrage of notifications. Many of these alerts might be false positives, leading to alert fatigue and hindering effective troubleshooting.

- Security and compliance: Logs often contain sensitive data. Striking a balance between retaining valuable information for analysis and adhering to data privacy regulations is crucial. Additionally, ensuring secure log storage and access control becomes paramount.

- Cost optimization: Storing massive log data comes at a cost. Finding the right balance between retaining essential information and purging less critical logs to optimize storage usage is an ongoing challenge.

Future trends in log aggregation

While log aggregation is increasingly becoming automated, the future will see it become completely self-sustainable, with humans or data engineers requiring minimal intervention in log management activities. While the core principles of log collection and analysis will remain, the way we interact with and utilize this data is poised for a transformative shift.

As more data is generated with the rise of digitization, log data processing needs to transcend the limitations of centralized storage. Real-time analysis will become the norm, with insights extracted from logs the very moment they are created. This upstream processing, powered by advancements in cloud and edge computing, will not only optimize costs but also deliver valuable intelligence with unprecedented speed.

Plus, artificial intelligence and machine learning will become even more deeply woven into the fabric of log management. Anomaly detection will evolve into a predictive science, empowering organizations to anticipate and address potential system issues before they snowball into critical problems.

We will also witness an exponential growth of data originating from Internet of Things (IoT) devices, microservices architectures, and cloud-based applications, which necessitate robust and adaptable aggregation tools. These future solutions will need to handle massive volumes and diverse formats of log data seamlessly, ensuring businesses can effectively manage their digital exhaust regardless of its source or structure.

Thus, the future of log aggregation is dynamic and exciting, promising a paradigm shift in how we extract value from the ever-growing ocean of data surrounding us.

Conclusion

In this comprehensive guide, we’ve explored the world of log aggregation, demystifying its core concepts, highlighting its benefits, and providing insights into implementation best practices. By harnessing the power of log aggregation and monitoring, you can unlock valuable insights from your system’s data, streamline your IT operations, and gain a competitive edge.

As the future unfolds with advancements in AI, machine learning, and cloud technologies, log aggregation is poised to become an even more indispensable tool for organizations striving for complete visibility and control over their IT infrastructure.

FAQs

What is log aggregation?

The process of collecting log data from various sources across your IT infrastructure and centralizing it in a single location.

What types of logs can you aggregate?

Server logs, Application logs, Security logs, Network logs, and System logs.

What are the benefits of log aggregation?

Enhanced operational visibility, performance and reliability improvements, and better security and compliance.

What are the top 3 tools for log aggregation?

Middleware, ELK Stack, and Splunk.