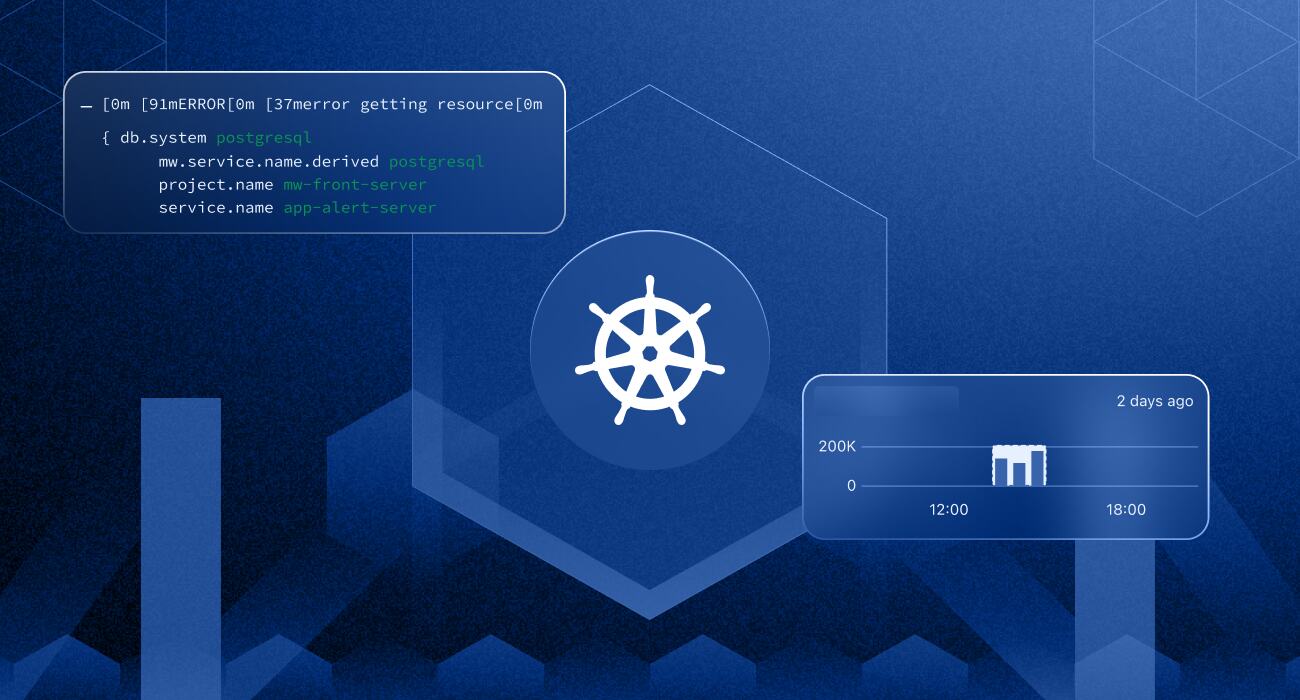

In the world of cloud-native applications, Kubernetes stands as the go-to platform for container orchestration (the automated process of managing, scaling, and maintaining containerized applications across multiple hosts). As applications grow in scale and complexity, effective logging becomes crucial for monitoring, troubleshooting, and maintaining smooth operations.

This guide explores the intricacies of Kubernetes logging, its significance, and the common commands one may encounter in their monitoring activities. We’ll also dive into the various logging sources within a Kubernetes environment, accompanied by code examples to illustrate key concepts.

Overview of Kubernetes logging

Kubernetes log monitoring involves collecting and analyzing log data from various sources within a Kubernetes cluster. Logs provide valuable insights into the state of your applications, nodes, and the cluster itself. They help identify issues, understand application behavior, and maintain overall system health.

Importance of logging in Kubernetes

- Troubleshooting: Logs help diagnose and resolve issues by providing a detailed record of events.

- Monitoring: Continuous log monitoring ensures that applications run smoothly and helps detect anomalies.

- Compliance: Logs can be used to maintain compliance with industry standards and regulations.

- Performance Optimization: Analyzing logs can reveal performance bottlenecks and opportunities for optimization.

Common logging challenges in Kubernetes environments

- Volume of Logs: Kubernetes’ dynamic and scalable nature can generate massive amounts of log data, making it difficult to manage and analyze.

- Log Retention: Determining how long to retain logs and ensuring they are stored efficiently.

- Log Aggregation: Collecting logs from multiple sources and aggregating them in a centralized location.

- Log Correlation: Correlating logs from different system parts to get a complete picture of events.

Understanding Kubernetes logging

1. Logging architecture

i. How does logging work in Kubernetes?

In Kubernetes, logs are typically generated by applications running in containers, the nodes on which these containers run, and the cluster components. Kubernetes does not handle log storage and analysis directly but allows integration with various logging solutions.

When a container writes logs, they are captured by the container runtime and can be accessed using commands like `kubectl logs`. These logs can then be shipped to external logging systems for further analysis.

ii. Logging drivers and options

A logging driver is a component in containerized environments that manages how and where logs are stored and processed. It defines the mechanism by which logs from containers are collected, formatted, and sent to a specific logging backend or storage system. By configuring logging drivers, you can control the flow of log data, making it easier to monitor, troubleshoot, and analyze the performance and behavior of your applications.

Kubernetes supports various logging drivers and options to suit different needs. Docker, which is often used in Kubernetes clusters, offers multiple logging drivers such as json-file, syslog, journald, fluentd, and gelf. Each driver has its own features and configuration options.

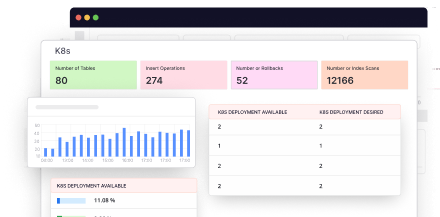

You can also integrate your logs with the Middleware. Simply click here to know more. You can also use this guide to set up monitoring with Node.js.

2. Kubernetes logging sources

i. Application logs

Application logs are generated by the applications running inside containers. These logs are essential for understanding application-specific events and behaviors.

To access application logs, you can use the `kubectl logs` command:

```sh

kubectl logs <pod-name>

```ii. Node Logs

Node logs are generated by the Kubernetes nodes and include logs from the kubelet, container runtime, and other node-level components. These logs help monitor the health and performance of individual nodes.

To access node logs, you can use SSH to connect to the node and view logs stored in the `/var/log` directory:

```sh

ssh user@node-ip

sudo tail -f /var/log/kubelet.log

```iii. Cluster Logs

Cluster logs encompass logs from the entire Kubernetes cluster, including logs from the control plane components like the API server, scheduler, and controller manager. These logs are crucial for understanding the cluster’s overall health and performance.

To access cluster logs, you can use the `kubectl` command with the appropriate component:

```sh

kubectl logs <pod-name> -n kube-system

```Here, kube-system is a namespace in Kubernetes used to host the core infrastructure components and system services that are essential for running and managing the cluster. It is a predefined namespace that comes with every Kubernetes installation.

Setting the stage

Using a demo microservice to explain logging, monitoring, and debugging

To understand the intricacies of logging in Kubernetes, we’ll use a demo microservice. This simple project will help us explore how to set up logging, monitor logs, and debug issues within a production-like Kubernetes environment.

Basics of the project

The demo microservice is a Node.js application with basic functionalities like user authentication, transactions, and payments. It includes several endpoints to simulate different scenarios and log relevant events.

Here’s the basic structure of the project:

1. app.js: The main application file.

2. Dockerfile: Used to containerize the application.

3. deployment.yaml: Kubernetes deployment configuration.

4. service.yaml: Kubernetes service configuration.

5. simulate_requests.sh: Script to simulate various user interactions.

Setting up the project

1. Create the Application File (`app.js`)

First create the project directory and initialize an empty node project. Then install ExpressJS.

```

mkdir kubernetes-logging-demo

cd kubernetes-logging-demo

npm init -y

npm install express

```The application handles user authentication, transactions, and payments, and includes logging for different events. Here’s the complete code for `app.js` file.

```javascript

const express = require('express');

const app = express();

const port = 3000;

app.use(express.json());

// Simulated database

const users = {

"john_doe": { password: "12345", balance: 100 },

"jane_doe": { password: "67890", balance: 200 }

};

// Helper function for logging

const log = (level, message) => {

const timestamp = new Date().toLocaleString();

console.log(`${timestamp} - ${level} - ${message}`);

};

// Middleware to log requests

app.use((req, res, next) => {

log('info', `Request received: ${req.method} ${req.originalUrl}`);

next();

});

// Endpoint for user authentication

app.post('/login', (req, res) => {

const { username, password } = req.body;

if (users[username] && users[username].password === password) {

log('info', `User login successful: ${username}`);

res.status(200).send('Login successful');

} else {

log('warn', `User login failed: ${username}`);

res.status(401).send('Login failed');

}

});

// Endpoint for logging user transactions

app.post('/transaction', (req, res) => {

const { username, amount } = req.body;

if (users[username]) {

users[username].balance += amount;

log('info', `Transaction successful: ${username} new balance: ${users[username].balance}`);

res.status(200).send('Transaction successful');

} else {

log('warn', `Transaction failed: User not found - ${username}`);

res.status(404).send('User not found');

}

});

// Endpoint for simulating payment processing

app.post('/payment', (req, res) => {

const { username, amount } = req.body;

if (users[username]) {

if (users[username].balance >= amount) {

users[username].balance -= amount;

log('info', `Payment successful: ${username} amount: ${amount}`);

res.status(200).send('Payment successful');

} else {

log('warn', `Payment failed: Insufficient funds - ${username}`);

res.status(400).send('Insufficient funds');

}

} else {

log('warn', `Payment failed: User not found - ${username}`);

res.status(404).send('User not found');

}

});

// Endpoint for simulating security incident

app.post('/admin', (req, res) => {

const { username } = req.body;

if (username === 'admin') {

log('error', `Unauthorized access attempt by user: ${username}`);

res.status(403).send('Unauthorized access');

} else {

res.status(200).send('Welcome');

}

});

app.listen(port, () => {

log('info', `Dummy microservice listening at http://localhost:${port}`);

});

```2. Create the Dockerfile

This file is used to build a Docker image of the application.

```Dockerfile

FROM node:18

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 3000

CMD ["node", "app.js"]

```3. Create the Kubernetes Deployment Configuration (`deployment.yaml`)

This file defines how the application will be deployed on Kubernetes.

```yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: logging-demo

spec:

replicas: 1

selector:

matchLabels:

app: logging-demo

template:

metadata:

labels:

app: logging-demo

spec:

containers:

- name: logging-demo

image: kubernetes-logging-demo:latest

imagePullPolicy: Never

ports:

- containerPort: 3000

```4. Create the Kubernetes Service Configuration (`service.yaml`)

This file defines the service that will expose the application within the Kubernetes cluster.

```yaml

apiVersion: v1

kind: Service

metadata:

name: logging-demo-service

spec:

selector:

app: logging-demo

ports:

- protocol: TCP

port: 80

targetPort: 3000

nodePort: 30001

type: NodePort

```5. Simulate User Interactions (`simulate_requests.sh`)

This script sends various requests to the application to simulate different scenarios and trigger logs. Note that this is an infinite loop until terminated.

```bash

#!/bin/bashDefine the base URL for the application

BASE_URL="http://localhost:30001"Define an array of curl commands to simulate different scenarios

curl_commands=(

# Successful login

"curl -i -X POST $BASE_URL/login -H 'Content-Type: application/json' -d '{\"username\":\"john_doe\",\"password\":\"12345\"}'"Failed login due to wrong password

"curl -i -X POST $BASE_URL/login -H 'Content-Type: application/json' -d '{\"username\":\"john_doe\",\"password\":\"wrong_password\"}'"Successful transaction

"curl -i -X POST $BASE_URL/transaction -H 'Content-Type: application/json' -d '{\"username\":\"john_doe\",\"amount\":50}'"Failed transaction because the user is not found

"curl -i -X POST $BASE_URL/transaction -H 'Content-Type: application/json' -d '{\"username\":\"unknown_user\",\"amount\":50}'"Successful payment

"curl -i -X POST $BASE_URL/payment -H 'Content-Type: application/json' -d '{\"username\":\"jane_doe\",\"amount\":50}'"Failed payment due to insufficient funds

"curl -i -X POST $BASE_URL/payment -H 'Content-Type: application/json' -d '{\"username\":\"john_doe\",\"amount\":200}'"Unauthorized access attempt

"curl -i -X POST $BASE_URL/admin -H 'Content-Type: application/json' -d '{\"username\":\"admin\"}'"

)Infinite loop to continuously execute curl requests

while true; do

- Select a random curl command from the array

cmd=${curl_commands[$RANDOM % ${#curl_commands[@]}]}- Print the command being executed (for debugging/visibility)

echo " Executing: $cmd"- Execute the selected curl command

eval $cmdOptional: Add a short sleep to mimic real user behavior

This adds a delay of 1 second between requests

sleep 1

done

```Running the project as a Kubernetes pod locally

1. Build the docker image

```sh

docker build -t kubernetes-logging-demo:latest .

```2. Deploy to Kubernetes

```sh

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

```3. Simulate User Interactions (this will make our app generate the logs)

Use a dedicated bash terminal to keep this simulation running as required.

```sh

./simulate_requests.sh

```With this, your local setup is ready, and you can test out different `kubectl` commands in real-time. This setup helps us understand how to collect, monitor, and analyze logs, which is crucial for maintaining the health and performance of our applications.

Scenarios where logging is crucial

1. Tracking user activity

Monitoring user activity is essential for understanding user behavior, identifying trends, and detecting unusual patterns that might indicate security issues.

2. Debugging payment issues

Payment-related logs are critical for resolving transaction failures, identifying bugs in the payment processing logic, and ensuring that financial operations are secure and reliable.

Basics of kubectl logs Command

The `kubectl logs` command is a powerful tool for accessing the logs of containers running in your Kubernetes cluster.

Syntax and Basic Usage

```sh

kubectl logs [OPTIONS] POD_NAME [-c CONTAINER_NAME]

```– `POD_NAME`: The name of the pod whose logs you want to view.

– `-c CONTAINER_NAME`: (Optional) Specifies the container within the pod. Useful if the pod has multiple containers.

Here’s a simple example to get logs from a pod:

```sh

kubectl logs my-pod

```If the pod contains multiple containers, specify the container name:

```sh

kubectl logs my-pod -c my-container

```If you have properly setup the demo project, you should be able to access your pod name by using this command:

```sh

kubectl get pods

```Your terminal might output something like this:

```sh

NAME READY STATUS RESTARTS AGE

logging-demo-6cf76dcb4c-mz7bv 1/1 Running 0 69m

```Now, to access the logs of this pod, you just have to run this command:

```sh

kubectl logs logging-demo-6cf76dcb4c-mz7bv

```The corresponding output will look something like this:

```sh

6/29/2024, 11:24:52 AM - info - Dummy microservice listening at http://localhost:3000

```Let’s use our `simulate_requests` script to send requests to our pod and try to monitor the logs generated. To do this, simply keep running the script in a dedicated terminal.

Filtering and Viewing Logs by Pod and Container

You can filter and view logs for specific pods and containers to narrow down your troubleshooting efforts.

Viewing Logs for a Specific Pod

To view logs for a specific pod:

```sh

kubectl logs my-pod

```Viewing Logs for a Specific Container in a Pod

As explained above, if the pod has multiple containers, specify the container name:

```sh

kubectl logs my-pod -c my-container

```Viewing Logs with a Label Selector

You can also use label selectors to filter logs from pods that match specific labels:

```sh

kubectl logs -l app=my-app

```

For our example project, this would look something like this:

```sh

kubectl logs -l app=logging-demo

```Note that the app name label is configured in the `deployment.yaml` file.

Analyzing Startup and Runtime Logs

Startup logs are crucial for identifying issues that occur during the initialization phase of your containers, while runtime logs help monitor the ongoing operations.

Viewing Startup Logs

To analyze logs from the startup phase of a pod, you can specify the time range from the pod’s creation using the –since flag with the kubectl logs command. This flag allows you to retrieve logs starting from a specified duration in the past, which is particularly useful for investigating recent startups.

```sh

kubectl logs --since=5m my-pod

```Viewing Runtime Logs

For continuous monitoring of runtime logs, you can use this command:

```sh

kubectl logs my-pod --follow

```The `–follow` option streams the logs in real-time, allowing you to monitor the container’s activities as they happen.

Deep Dive into kubectl logs –tail Command

The `kubectl logs –tail` command is particularly useful for real-time log monitoring and debugging. It helps you view the most recent log entries without having to go through the entire log history.

How Does It Work, and How Does It Differ from Other Log Options?

The `–tail` option with `kubectl logs` fetches the last few lines of logs from a pod or container.

Basic syntax:

```sh

kubectl logs my-pod --tail=50

```This command retrieves the last 50 lines of logs from `my-pod`.

Differences from Other Log Options

–since: Retrieves logs from a specific time period.

–follow: Stream logs in real-time.

–tail: Fetches a specified number of recent log lines.

Practical Use Cases

How to Tail Logs for Real-Time Monitoring?

To tail logs for real-time monitoring of a pod, use this command:

```sh

kubectl logs my-pod --follow --tail=50

```This streams the last 50 lines of logs and continues to stream new log entries in real-time.

How to Tail Logs for a Specific Component in the Project

For tailing logs of a specific component, specify the container name:

```sh

kubectl logs my-pod -c my-container --tail=50

```This command fetches the last 50 lines of logs from the `my-container` container within `my-pod`.

For our demo project, to get the container details within a pod, you can use the below command.

```sh

kubectl describe pod logging-demo-6cf76dcb4c-mz7bv -n default

```Advanced Techniques

Combining –tail with Other Flags

Combining the `–tail` option with other flags in `kubectl logs` can enhance your logging capabilities and provide more detailed insights.

#### Examples: -f (follow), –since, –timestamps

Follow (`-f`): Combines real-time streaming with tailing the logs.

```sh

kubectl logs my-pod --tail=50 -f

``` This command fetches the last 50 lines and streams new logs in real-time.

Since (`–since`): Retrieves logs from a specific time period.

```sh

kubectl logs my-pod --tail=50 --since=1h

```This command fetches the last 50 lines of logs from the past hour.

Timestamps (`–timestamps`): Adds timestamps to each log entry.

```sh

kubectl logs my-pod --tail=50 --timestamps

```This command includes timestamps for the last 50 log lines, useful for chronological analysis if the component logs do not have timestamps of their own.

Real-World Examples Where These Combinations Are Useful

1. Debugging a Deployment Issue:

```sh

kubectl logs my-pod --tail=100 -f --since=10m

``` This command is useful to debug recent issues by streaming the last 100 lines of logs from the past 10 minutes.

2. Performance Monitoring:

```sh

kubectl logs my-pod --tail=200 --timestamps -f

```Use this command to monitor performance metrics in real-time with precise timestamps.

If you use it with our demo project, here’s the output that you may see. Note that the logs generated are due to the simulated requests we are sending.

```sh

kubectl logs logging-demo-6cf76dcb4c-mz7bv --tail=200 --timestamps -f

2024-06-29T12:45:42.618459492Z 6/29/2024, 12:45:42 PM - info - Request received: POST /payment

2024-06-29T12:45:42.618497775Z 6/29/2024, 12:45:42 PM - warn - Payment failed: Insufficient funds - jane_doe

2024-06-29T12:45:43.695529512Z 6/29/2024, 12:45:43 PM - info - Request received: POST /transaction

2024-06-29T12:45:43.695560010Z 6/29/2024, 12:45:43 PM - info - Transaction successful: john_doe new balance: 200

2024-06-29T12:45:44.766836119Z 6/29/2024, 12:45:44 PM - info - Request received: POST /login

2024-06-29T12:45:44.766874021Z 6/29/2024, 12:45:44 PM - warn - User login failed: john_doe

.

.

.

```Using Labels and Selectors

Filtering logs using labels and selectors helps you focus on specific parts of your application, especially in large clusters.

Filtering Logs Using Labels and Selectors

To filter logs by labels:

```sh

kubectl logs -l app=my-app --tail=50

```This command fetches the last 50 lines of logs from all pods labeled `app=my-app`.

Examples to Illustrate the Usage

1. Filtering Logs for the `frontend` Component:

```sh

kubectl logs -l component=frontend --tail=100 -f

``` This command fetches and streams the last 100 lines of logs from all frontend components.

2. Filtering Logs for Pods Running on a Specific Node:

```sh

kubectl logs -l node=worker-node1 --tail=50

```Use this command to fetch logs from pods running on `worker-node1`.

You can fetch the list of nodes in your environment using the `kubectl get nodes` command.

Component names are generally configured in your deployment or pod configs, similar to app labels, for better identification.

Scripting and automation

Automating log tailing with scripts and integrating with CI/CD pipelines can enhance continuous monitoring and streamline troubleshooting.

Automating log tailing with scripts

You can create scripts to automate the process of tailing logs. Here’s an example script:

```sh

#!/bin/bash

# Script to tail logs from all pods with label app=my-app

kubectl logs -l app=logging-demo --tail=100 -f

```Save this script as `tail_logs.sh` and run it to automate log tailing.

Integration with CI/CD pipelines for continuous monitoring

Here’s how you can integrate log tailing in your CI/CD pipelines to monitor deployments and application health continuously.

```yaml

# Example Jenkinsfile

pipeline {

agent any

stages {

stage('Deploy') {

steps {

sh 'kubectl apply -f deployment.yaml'

}

}

stage('Monitor Logs') {

steps {

sh './tail_logs.sh'

}

}

}

}

```This Jenkins pipeline deploys your application and then tails the logs.

Scenario-Based Exploration

Once we have run the `simulate_requests.sh` file for sometime, we would have generated a lot of logs in our pod. We have simulated many different positive and negative requests. How do we actually analyze these logs in a production environment? Let’s explore some operations with our project.

Scenario 1: Monitoring Application Performance

Using tail logs to monitor key performance metrics helps understand your application’s behavior and performance under load. Here’s how you can effectively monitor and compute these metrics in real-time. You can run these commands in a bash terminal.

Using Tail Logs to Monitor Key Performance Metrics

You can create custom scripts to store your logic to measure specific things based on your logs.

```sh

kubectl logs -l app=logging-demo --tail=100 | awk '

function parseTime(ts, date, time, ampm, h, m, s) {

split(ts, datetime, ", ")

date = datetime[1]

time = datetime[2]

ampm = datetime[3]

split(date, date_parts, "/")

split(time, time_parts, ":")

h = time_parts[1]

m = time_parts[2]

s = time_parts[3]

if (ampm == "PM" && h < 12) h += 12

if (ampm == "AM" && h == 12) h = 0

return (h * 3600) + (m * 60) + s

}

/-/ {

curr_time = parseTime($1 " " $2 " " $3)

if (prev_time > 0) {

response_time = curr_time - prev_time

sum += response_time

count += 1

if (response_time > max || count == 1) max = response_time

if (response_time < min || count == 1) min = response_time

}

prev_time = curr_time

}

END {

if (count > 0) {

printf "Average Response Time: %.2f seconds\nMax Response Time: %d seconds\nMin Response Time: %d seconds\n", sum/count, max, min

} else {

print "No response times calculated."

}

}'

```

Output:

```sh

Average Response Time: 0.54 seconds

Max Response Time: 2 seconds

Min Response Time: 0 seconds

```This command fetches the last 100 log lines from all pods labeled `app=logging-demo` in real-time, and uses `awk` to compute key metrics:

- Average Response Time

- Max Response Time

- Min Response Time

Scenario 2: Investigating Security Incidents

Tail logs are essential for identifying and analyzing suspicious activities, enabling a quick response to security incidents. Here’s how you can filter logs specific to such activity.

Tail Logs for Identifying and Analyzing Suspicious Activities

```sh

kubectl logs -l app=logging-demo --tail=200 | grep -i 'error\|failed\|unauthorized' | awk '{count += 1} END {print "Total Security Incidents Detected:", count}'

```

Output:

```sh

Total Security Incidents Detected: 63

```This command retrieves the last 200 log lines and filters for keywords such as “error,” “failed,” and “unauthorized,” which can help in identifying potential security breach attempts. It uses `awk` to count the number of incidents detected. The `awk` command is a versatile tool for text processing in Unix-like systems. It excels in pattern matching, field manipulation, and generating reports using custom logic as per your application needs.

By running such commands and scripts, you can directly compute and track essential metrics, enabling real-time monitoring and swift response to any issues.

Conclusion

By mastering these logging techniques, you can significantly enhance your ability to monitor, troubleshoot, and secure your Kubernetes applications. Start implementing these strategies today to maintain robust and reliable cloud-native applications.

Want to use Middleware for log monitoring? Check out our detailed documentation here.